Transforming AI through Function Calling

Function calling is a groundbreaking feature in AI that allows language models to interact with tools more effectively. This capability involves generating structured JSON objects, making it easier for models to manage external tool functions. Yet, existing methods often struggle to simulate real-world interactions fully, focusing mainly on tool-specific messages rather than the broader context of human-AI conversations.

Addressing Key Challenges

The conversation around tool use is complex and goes beyond just executing commands. A more cohesive approach is necessary, one that integrates technical performance with natural dialogue. To meet this demand, we require advanced function-calling frameworks that enhance interaction between users and AI systems.

Recent Developments in Evaluation

Recent research has shed light on how language models interact with tools, resulting in new benchmarks such as APIBench, GPT4Tools, RestGPT, and ToolBench. These frameworks evaluate the effectiveness of tool usage. Innovations like MetaTool and BFCL have emerged, focusing on tool awareness and function relevance detection. However, many of these methods still fall short in assessing how models engage with users in real time.

Introducing FunctionChat-Bench

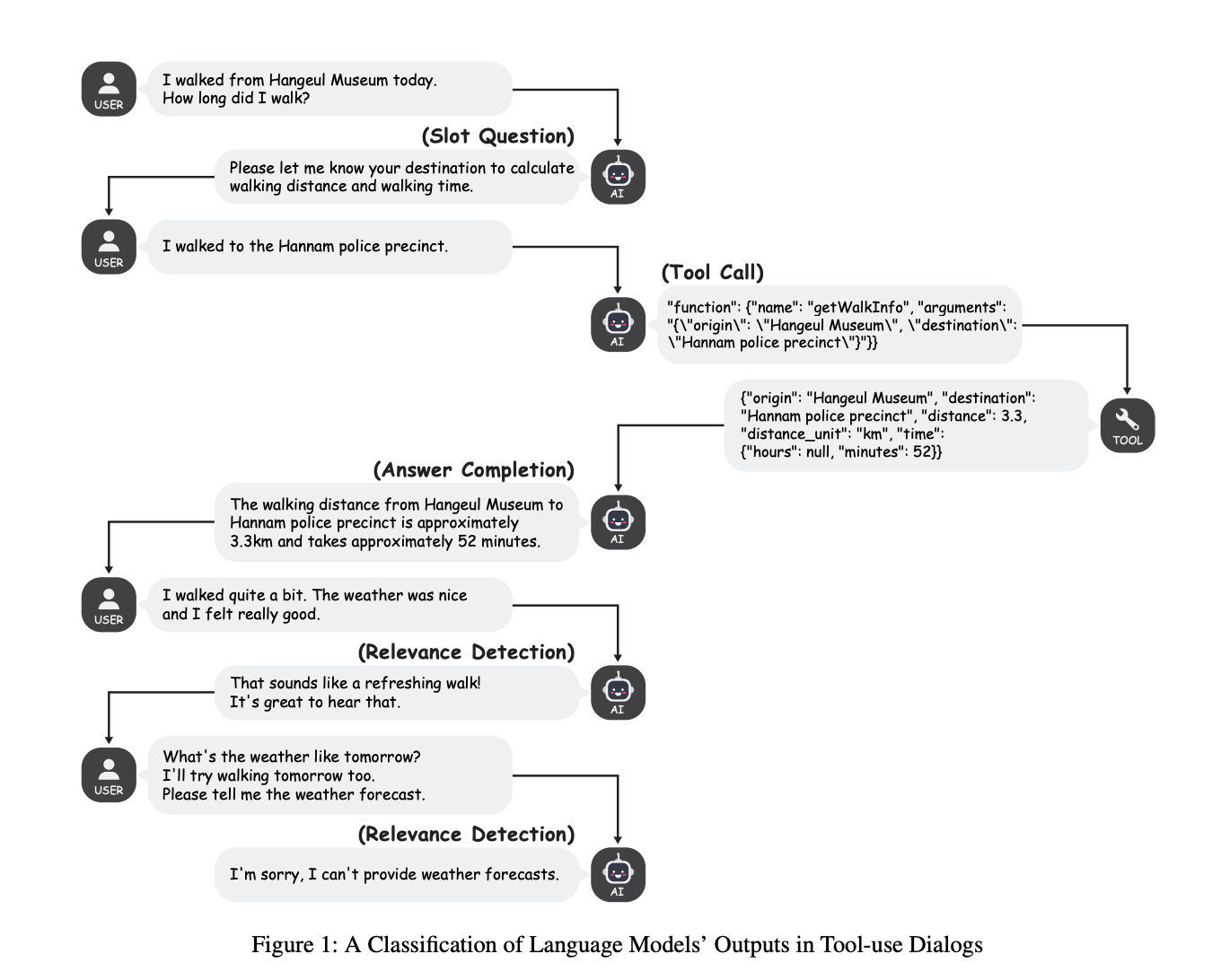

Researchers from Kakao Corp. have launched FunctionChat-Bench, a new method to evaluate models’ function-calling abilities across various scenarios. This benchmark features a substantial dataset of 700 items and automated programs for assessment. It distinguishes between single-turn and multi-turn dialogues, challenging the assumption that high performance in isolated tasks indicates overall interactive skill.

Evaluation Framework

FunctionChat-Bench uses a two-part evaluation framework consisting of:

- Single Call Dataset: Requires a user’s request to contain all information needed for a tool invocation.

- Dialog Dataset: Simulates more complex interactions, requiring models to effectively manage user inputs and follow-up questions.

Insights from Experimental Results

Results from FunctionChat-Bench reveal crucial insights into models’ function-calling abilities. For example, the Gemini model shows improved accuracy with more function candidates, while GPT-4-turbo displays a significant accuracy gap between random and precise function types. The dialog dataset also allows for comprehensive analyses, including conversational outputs and tool-call relevance in multi-turn interactions.

Future Directions in AI Research

This research aims to redefine how we evaluate AI systems, focusing specifically on their function-calling capabilities. While it sets a new standard, it also highlights the need for future studies to further investigate complex interactive AI systems.

Get Involved

To dive deeper into this research, please check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, or engage in our LinkedIn Group. Don’t miss out on our newsletter and be part of our 55k+ ML SubReddit.

Enhance Your Business with AI

Stay competitive by using FunctionChat-Bench to advance your company. Explore how AI can redefine your operations:

- Identify Automation Opportunities: Target customer interaction points that could benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that meet your specific needs.

- Implement Gradually: Begin with pilot programs, gather feedback, and expand strategically.

Connect for More Insights

For advice on AI KPI management, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Discover how AI can transform your sales processes and customer engagement at itinai.com.