Understanding Transformer-Based Language Models

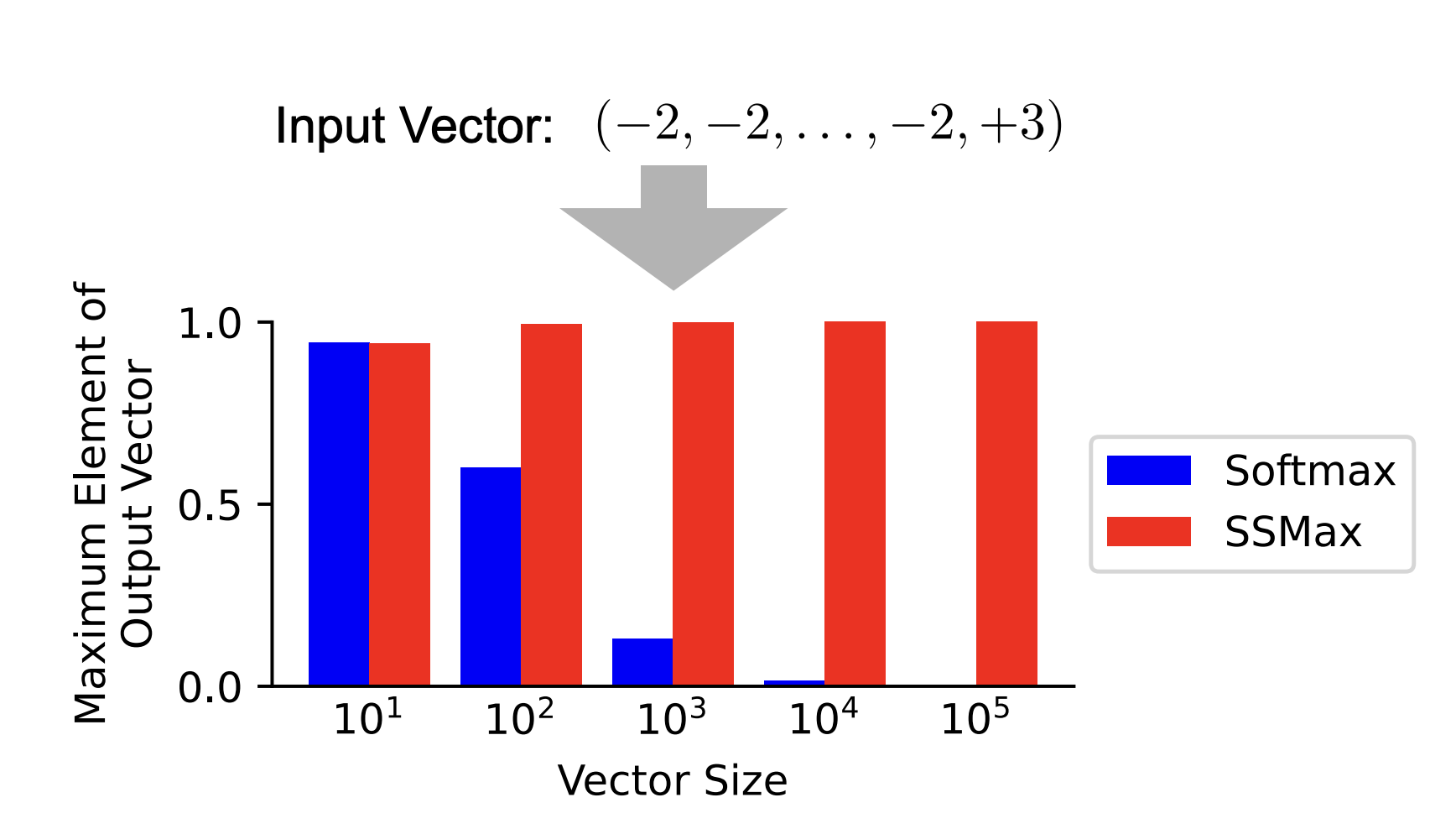

Transformer-based language models analyze text by looking at word relationships instead of reading in a strict order. They use attention mechanisms to focus on important keywords. However, they struggle with longer texts because the Softmax function, which helps distribute attention, becomes less effective as the input size increases. This leads to a problem known as attention fading, where the model loses focus on key information, making it less effective for larger texts.

Challenges with Current Methods

To improve how these models handle longer texts, current methods include:

- Positional encoding

- Sparse attention

- Extended training on longer texts

- Enhanced attention mechanisms

However, these methods are not scalable and require significant computational resources, making them inefficient for processing long inputs.

Introducing Scalable-Softmax (SSMax)

A researcher from The University of Tokyo has proposed a solution called Scalable-Softmax (SSMax). This new approach modifies the Softmax function to maintain focus on important tokens, even as the input size grows. SSMax adjusts the scaling factor based on the input size, ensuring that key information remains prominent. This method uses a logarithmic formula to dynamically adapt attention distribution, allowing the model to concentrate on relevant elements while distributing attention when necessary.

Benefits of SSMax

SSMax can be easily integrated into existing models with minimal changes, requiring just a simple multiplication in the attention computation. The researcher conducted experiments to evaluate its effectiveness, focusing on:

- Training efficiency

- Long-context generalization

- Key information retrieval

- Attention allocation

Results showed that SSMax consistently improved performance across various configurations, enhancing training efficiency and the ability to retrieve key information in long contexts.

Conclusion

In summary, SSMax enhances transformer attention, effectively addressing attention fading and improving performance with long-context tasks. Its adaptability makes it a strong alternative to Softmax for both new and existing models. Future developments can optimize SSMax for efficiency, further enhancing understanding in real-world applications.

Explore More

Check out the Paper. All credit for this research goes to the researchers involved. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 75k+ ML SubReddit.

Transform Your Business with AI

To stay competitive and leverage AI, consider the following steps:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that meet your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or follow us on Twitter.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.