FocusLLM: A Scalable AI Framework for Efficient Long-Context Processing in Language Models

Practical Solutions and Value

Empowering language models (LLMs) to handle long contexts effectively is crucial for various applications such as document summarization and question answering. However, traditional transformers require substantial resources for extended context lengths, leading to challenges in training costs, information loss, and difficulty in obtaining high-quality long-text datasets.

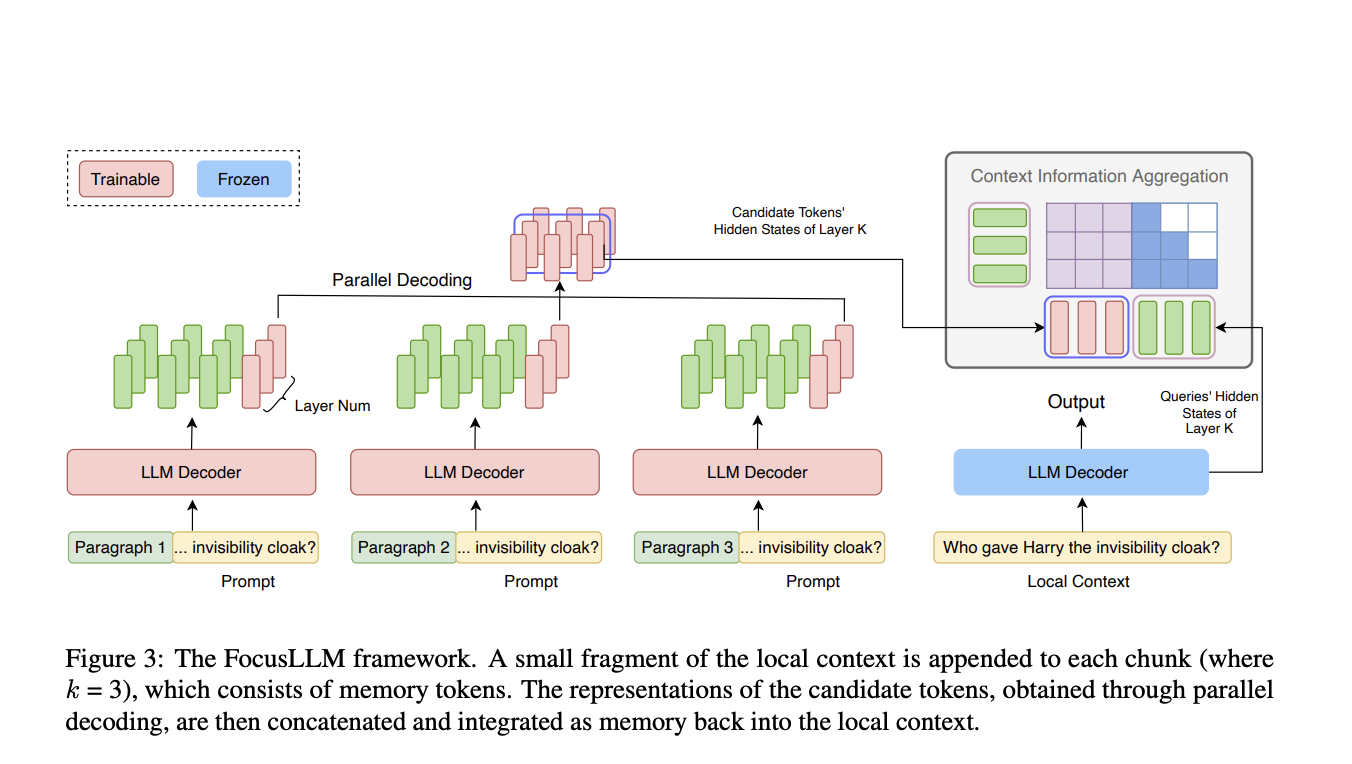

FocusLLM introduces a framework that extends the context length of LLMs by utilizing a parallel decoding strategy. This approach divides long texts into manageable chunks, extracting essential information from each and integrating it into the context. FocusLLM performs superior downstream tasks while maintaining low perplexity, even with sequences up to 400K tokens. Its design allows for remarkable training efficiency, enabling long-context processing with minimal computational and memory costs.

Value Proposition

FocusLLM outperforms other methods in tasks like question answering and long-text comprehension, demonstrating superior performance on Longbench and ∞-Bench benchmarks while maintaining low perplexity on extensive sequences. It offers a scalable solution for enhancing LLMs, making it a valuable tool for long-context applications.

AI Solutions for Business

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

AI Solutions for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.