Practical Solutions for Deploying Large Language Models (LLMs)

Addressing Latency with Weight-Only Quantization

Large Language Models (LLMs) face latency issues due to memory bandwidth constraints. Researchers use weight-only quantization to compress LLM parameters to lower precision, improving latency and reducing GPU memory requirements.

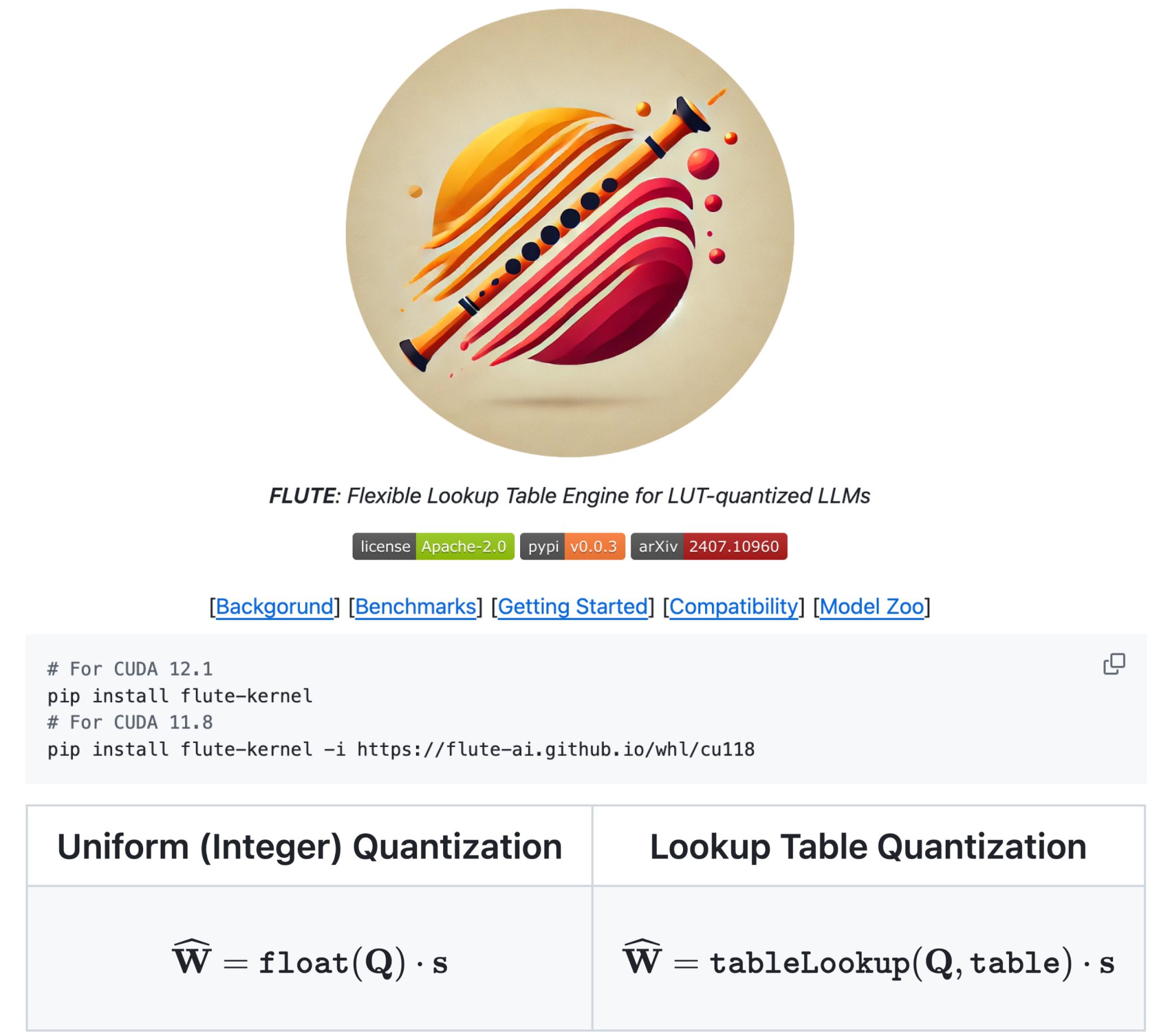

Flexible Lookup-Table Engine (FLUTE)

FLUTE, developed by researchers from renowned institutions, introduces an innovative approach for deploying weight-quantized LLMs, focusing on low-bit and non-uniform quantization. It manages complexities of low-bit and non-uniform quantization, improving efficiency and performance in scenarios where traditional methods fall short.

Key Strategies of FLUTE

- Offline Matrix Restructuring: FLUTE optimizes weight restructuring to handle non-standard bit widths.

- Vectorized Lookup in Shared Memory: FLUTE uses a vectorized lookup table for efficient dequantization and employs table duplication to reduce conflicts.

- Stream-K Workload Partitioning: FLUTE evenly distributes workload across SMs using Stream-K decomposition to optimize performance in low-bit and low-batch scenarios.

Performance and Advantages of FLUTE

FLUTE demonstrates superior performance in LLM deployment across various quantization settings, showing impressive performance across different batch sizes and comparing favorably to specialized kernels. It offers flexibility in experiments with different bit widths and group sizes, proving to be a versatile and efficient solution for quantized LLM deployment.

Accelerate LLM Inference with FLUTE

FLUTE is a CUDA kernel designed to accelerate LLM inference through fused quantized matrix multiplications. Its performance is demonstrated through kernel-level benchmarks and end-to-end evaluations on state-of-the-art LLMs like LLaMA-3 and Gemma-2. This flexibility and performance make FLUTE a promising solution for accelerating LLM inference using advanced quantization techniques.

Evolve with AI Using FLUTE

If you want to evolve your company with AI and stay competitive, FLUTE offers practical solutions for accelerating LLM inference through advanced quantization techniques, redefining your work processes and customer engagement.

AI Transformation Guidance

Discover how AI can redefine your way of work, redefine sales processes, and identify automation opportunities. Connect with us for AI KPI management advice and continuous insights into leveraging AI at hello@itinai.com or on our Telegram and Twitter channels.

Join the AI Community

Don’t forget to join our AI community on Reddit and explore upcoming AI webinars to stay updated with the latest advancements in AI.

Explore AI Solutions

Discover how AI can redefine your sales processes and customer engagement. Explore AI solutions at itinai.com.