Fine-Tuning Llama 3.2 3B Instruct for Python Code

Overview

In this guide, we’ll show you how to fine-tune the Llama 3.2 3B Instruct model using a curated Python code dataset. By the end, you will understand how to customize large language models for coding tasks and gain practical insights into the tools and configurations required for fine-tuning with Unsloth.

Installing Required Dependencies

To get started, install the necessary libraries with these commands:

- Unsloth: A library for efficient fine-tuning.

- Transformers: Provides pre-trained models.

- xFormers: Optimizes memory usage.

These installations ensure you have everything needed to fine-tune the model effectively.

Essential Imports

Next, import the required classes and functions from the libraries:

- FastLanguageModel: For loading the model.

- SFTTrainer: For training the model.

- load_dataset: For preparing your dataset.

These imports set the stage for fine-tuning.

Loading the Python Code Dataset

Load your dataset with a maximum sequence length of 2048 tokens using:

python

dataset = load_dataset(“user/Llama-3.2-Python-Alpaca-143k”, split=”train”)

Make sure to save the dataset under your username on Hugging Face for easy access.

Initializing the Llama 3.2 3B Model

Load the model in a memory-efficient 4-bit format:

python

model, tokenizer = FastLanguageModel.from_pretrained(“unsloth/Llama-3.2-3B-Instruct-bnb-4bit”)

This setup allows for handling longer text inputs while conserving memory.

Configuring LoRA with Unsloth

Apply Low-Rank Adaptation (LoRA) to optimize the model’s performance:

python

model = FastLanguageModel.get_peft_model(model, r=16, lora_alpha=16)

This configuration enhances memory efficiency and allows for training on longer contexts.

Mounting Google Drive

Enable access to your Google Drive:

python

drive.mount(“/content/drive”)

This step allows you to save your training outputs directly to your drive.

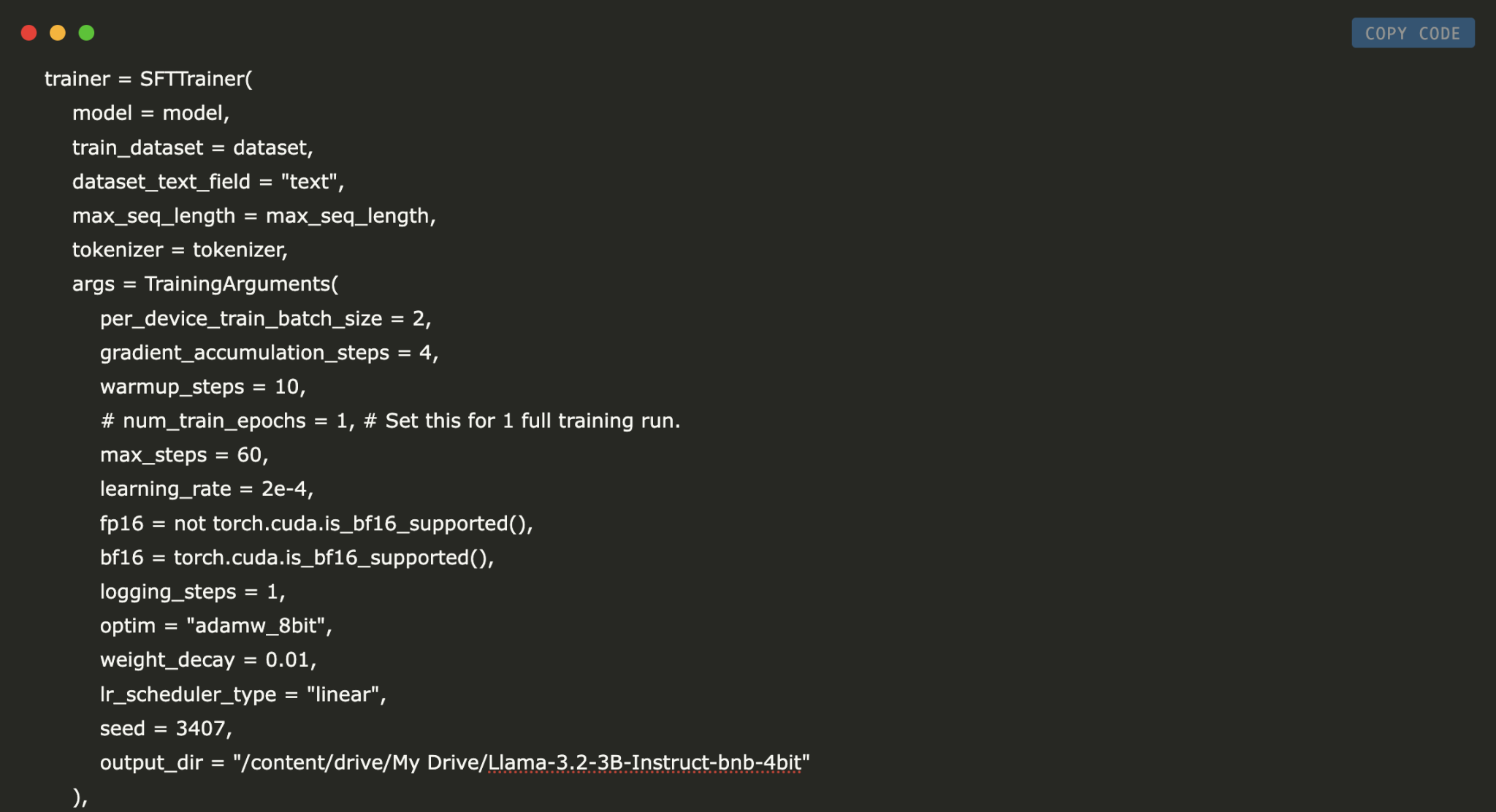

Setting Up and Running the Training Loop

Create a training instance with:

python

trainer = SFTTrainer(model=model, train_dataset=dataset)

Specify training parameters like batch size and learning rate. Then, start the training process with:

python

trainer.train()

This will fine-tune the model based on your dataset.

Saving the Fine-Tuned Model

Once training is complete, save your model and tokenizer:

python

model.save_pretrained(“lora_model”)

tokenizer.save_pretrained(“lora_model”)

This allows you to reuse the fine-tuned model without retraining.

Conclusion

This tutorial demonstrated how to fine-tune the Llama 3.2 3B Instruct model for Python code using Unsloth and LoRA. By following these steps, you can create a smaller, efficient model that excels at coding tasks. The integration of Unsloth enhances memory usage, while Hugging Face tools simplify dataset handling and training.

Get Started with AI

If you want to advance your business with AI, consider the following steps:

- Identify Automation Opportunities: Find areas where AI can improve customer interactions.

- Define KPIs: Measure the impact of AI on your business.

- Select an AI Solution: Choose tools that meet your needs.

- Implement Gradually: Start small, gather data, and scale accordingly.

For further assistance or insights, contact us at hello@itinai.com or follow us on our social media platforms.

Explore More

Discover how AI can transform your sales and customer engagement processes at itinai.com.