Enhancing Efficiency and Performance with Binarized Large Language Models

Addressing Challenges with Quantization

Transformer-based LLMs like ChatGPT and LLaMA excel in domain-specific tasks, but face computational and storage limitations. Quantization offers practical solutions by converting large parameters to smaller sizes, improving storage efficiency and computational speed. Extreme quantization maximizes efficiency but reduces accuracy, while partial binarization methods maintain key parameters at full precision.

Introducing Fully Binarized Large Language Models (FBI-LLM)

Researchers from Mohamed bin Zayed University of AI and Carnegie Mellon University introduce FBI-LLM, achieving competitive performance by training large-scale binary language models from scratch. The FBI-LLM framework employs autoregressive distillation to maintain equivalent model dimensions and training data, resulting in minimal performance gaps compared to full-precision models.

Optimizing Neural Network Binarization

Neural network binarization significantly improves efficiency and reduces storage, but often at the cost of accuracy. Techniques like BinaryConnect and Binarized Neural Networks (BNN) use stochastic methods and clipping functions to train binary models. Recent approaches like BitNet and OneBit employ quantization-aware training for better performance.

FBI-LLM Methodology

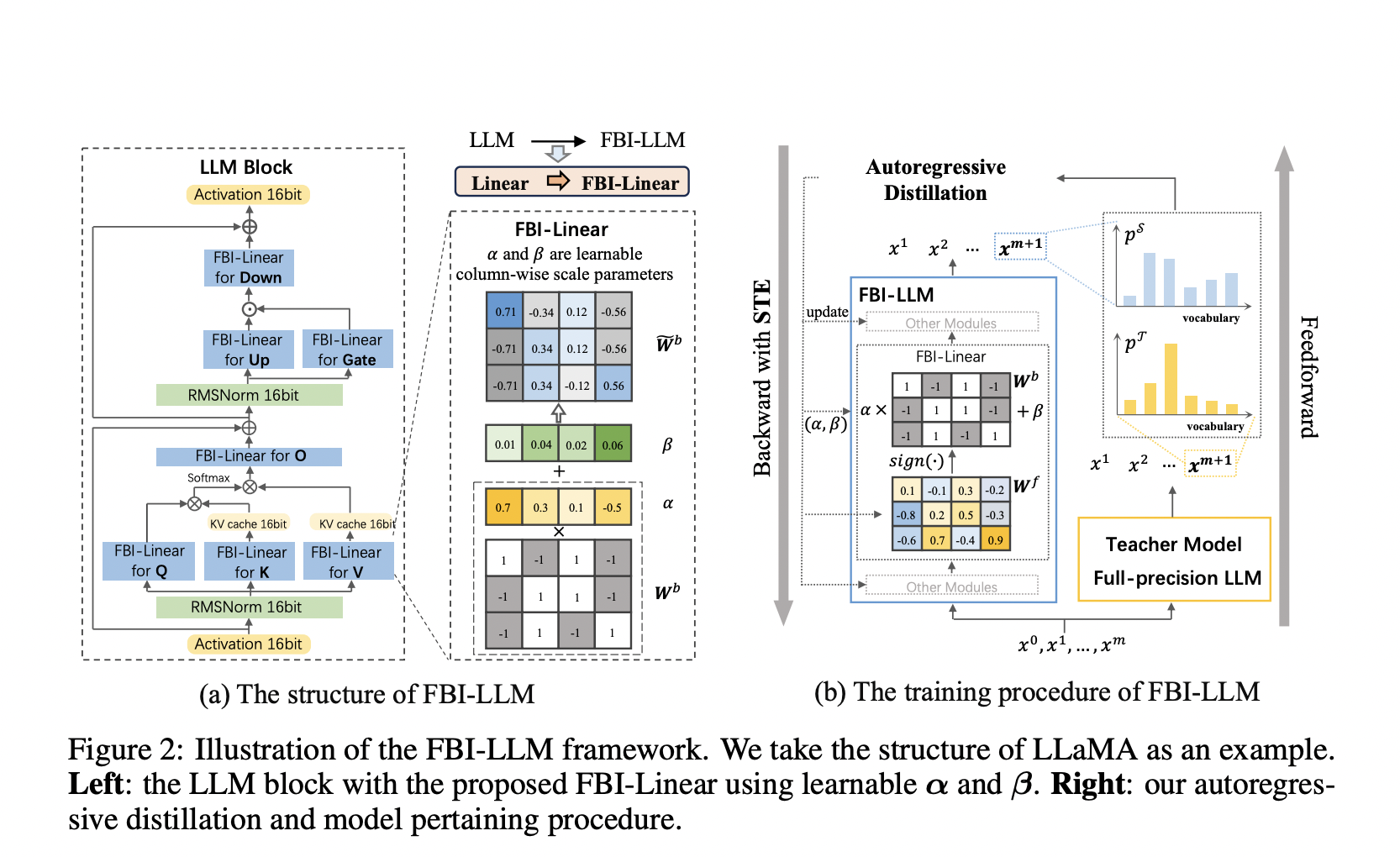

FBI-LLM modifies LLMs by replacing linear modules with FBI-linear, maintaining semantic information and activation scaling. The training procedure employs autoregressive distillation, using a full-precision teacher model to guide a binarized student model via cross-entropy loss. The Straight-Through Estimator (STE) enables effective optimization.

Experimental Results

Experimental results demonstrate that FBI-LLM surpasses existing benchmarks across different model sizes, achieving competitive zero-shot accuracy and perplexity metrics while offering substantial compression benefits compared to full-precision LLMs.

Challenges and Considerations

Binarization unavoidably leads to performance degradation compared to full-precision models, and the distillation process adds computational overhead. Ethical concerns surrounding pretrained LLMs persist even after binarization.

Discover the Potential of AI Solutions

If you want to evolve your company with AI, stay competitive, and use FBI-LLM to redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually for impactful AI integration. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.