The FalconMamba 7B: Revolutionizing AI with Practical Solutions and Unmatched Value

Introduction

The FalconMamba 7B, a groundbreaking AI model, overcomes limitations of existing architectures and is accessible to researchers and developers globally.

Key Features

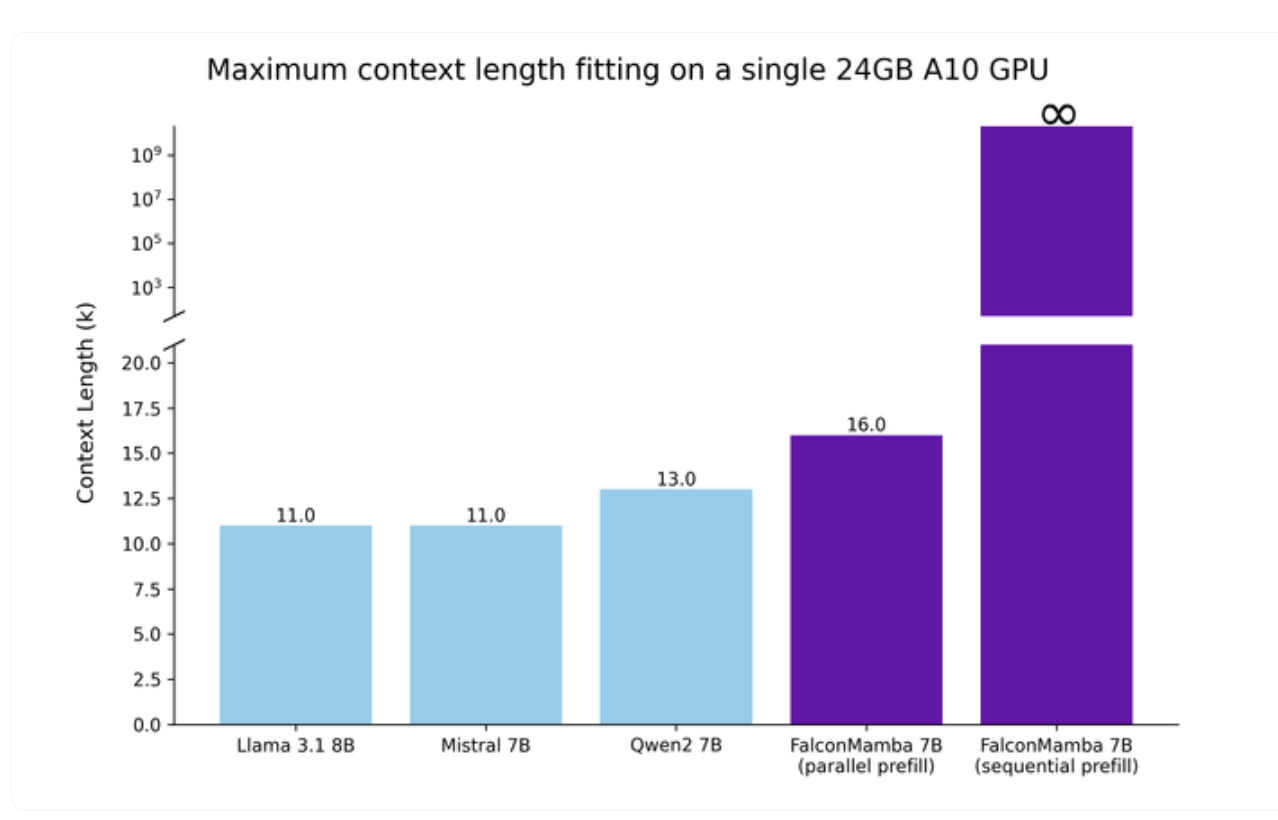

Distinct architecture enables processing of large sequences without increased memory storage, fitting on a single A10 24GB GPU.

Constant token generation time regardless of context size, eliminating the need to attend to all previous tokens.

Efficiently handles extensive data processing and supports features like bits and bytes quantization for smaller GPU memory constraints.

Performance and Benchmarks

Demonstrated impressive results in various evaluations, showcasing strong performance in tasks requiring long sequence processing.

Outperformed other state-of-the-art models in benchmarks such as MATH, MMLU-IFEval, and BBH.

Practical Applications

Highly versatile tool for applications requiring extensive data processing, compatible with Hugging Face transformers library and accessible to academic researchers and industry professionals.

Instruction-tuned version enhances the model’s ability to perform instructional tasks more precisely and effectively, with faster inference using torch.compile.

Conclusion

The FalconMamba 7B, with its innovative architecture, impressive performance, and accessibility, is poised to make a substantial impact across various sectors.

Evolve Your Company with AI

Stay competitive and leverage the FalconMamba 7B to redefine your way of work, identify automation opportunities, define KPIs, select an AI solution, and implement gradually.

Connect with us at hello@itinai.com for AI KPI management advice and stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom for continuous insights into leveraging AI.