Practical Solutions and Value of In-Context Reinforcement Learning in Large Language Models

Key Highlights:

– Large language models (LLMs) excel in learning across domains like translation and reinforcement learning.

– Understanding how LLMs implement reinforcement learning remains a challenge.

– Sparse autoencoders help analyze LLMs’ learning processes effectively.

– Researchers focus on mechanisms behind LLMs’ reinforcement learning abilities.

Value Proposition:

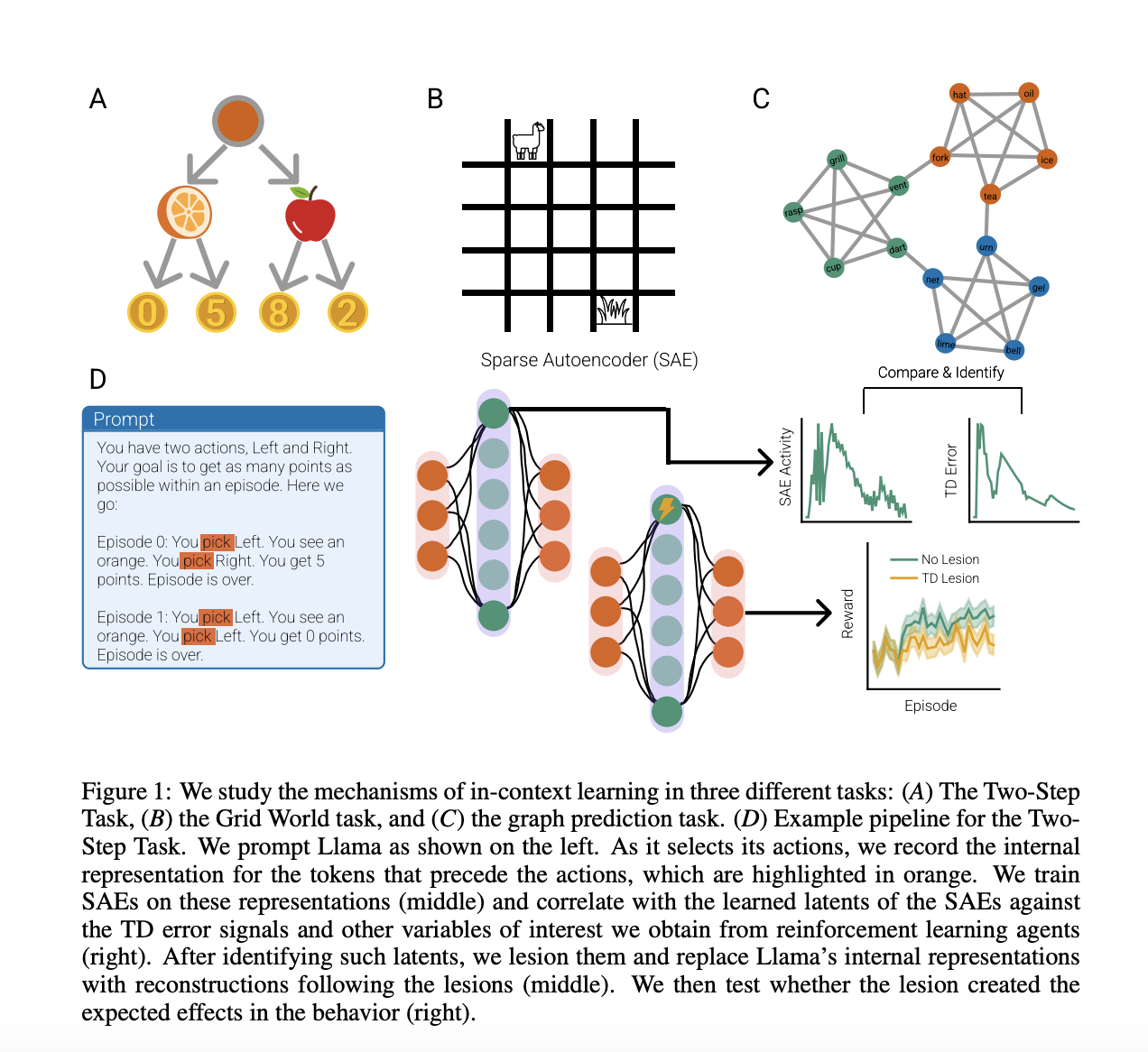

– Researchers use sparse autoencoders to study how LLMs learn reinforcement learning.

– By analyzing Llama 3 70B, insights into LLMs’ reinforcement learning mechanisms are gained.

– Llama’s ability to learn complex tasks like grid navigation is enhanced through reinforcement learning.

– Successor Representation concept showcases Llama’s structural knowledge learning capabilities.

Practical Applications:

– Implementing sparse autoencoders aids in understanding LLMs’ reinforcement learning.

– Analyzing Llama’s performance in various tasks provides valuable insights.

– Linking LLM learning mechanisms to biological agents’ computations enhances understanding.

– Collaboration opportunities for promoting AI solutions to a wide audience are available.

Get in Touch:

– For AI KPI management advice, contact us at hello@itinai.com.

– Stay updated on leveraging AI by following us on Telegram @itinainews or Twitter @itinaicom.