The Guardian has conducted an investigation into the use of AI and complex algorithms in the UK’s public sector decision-making processes. The findings reveal a chaotic and unsupervised application of these technologies across multiple departments, leading to discriminatory outcomes. Examples include an algorithm incorrectly suspending benefits and facial recognition technology showing higher error rates for identifying black faces. The report warns of potential scandals and emphasizes the need for transparency and understanding of these algorithms to prevent unjust outcomes. The article also provides global examples of algorithmic bias and the importance of thorough and transparent implementation of AI in public services.

Evidence of AI Misuse Unearthed in the UK Public Sector

An investigation by The Guardian has revealed the widespread and unsupervised use of AI and complex algorithms in various public sector decision-making processes in the UK. This chaotic application of advanced technologies across multiple departments has raised concerns about discriminatory outcomes.

Examples of AI Misuse in the UK

The investigation has highlighted several instances of AI tools leading to unjust outcomes:

- The Department for Work and Pensions (DWP) used an algorithm that resulted in individuals losing their benefits incorrectly.

- The Metropolitan Police’s facial recognition tool had a higher error rate when identifying black faces compared to white faces.

- The Home Office used an algorithm that disproportionately targeted individuals from specific nationalities in its efforts to uncover fraudulent marriages for benefits and tax breaks.

These examples demonstrate how AI can perpetuate existing biases found in the data, leading to discriminatory results.

Risks for the Public Sector

The unchecked use of AI in government departments and police forces raises concerns about accountability, transparency, and bias. The lack of clear explanations, avenues for appeal, and transparency in automated processes can lead to unjust outcomes, as seen in the Department for Work and Pensions (DWP) and the Robodebt scandal in Australia.

The corporate world has also witnessed failures in AI-driven recruitment tools, such as Amazon’s AI recruitment tool, which displayed bias against female candidates. These examples highlight the importance of careful and transparent implementation of AI to mitigate the risks of bias and discrimination.

Examples of Algorithmic Bias to Be Wary Of

As AI and algorithmic decision-making become more integrated into societal processes, alarming examples of bias and discrimination are emerging:

- In the US healthcare system, a widely used algorithm exhibited racial bias, resulting in less care being provided to Black patients with the same level of need as their white counterparts.

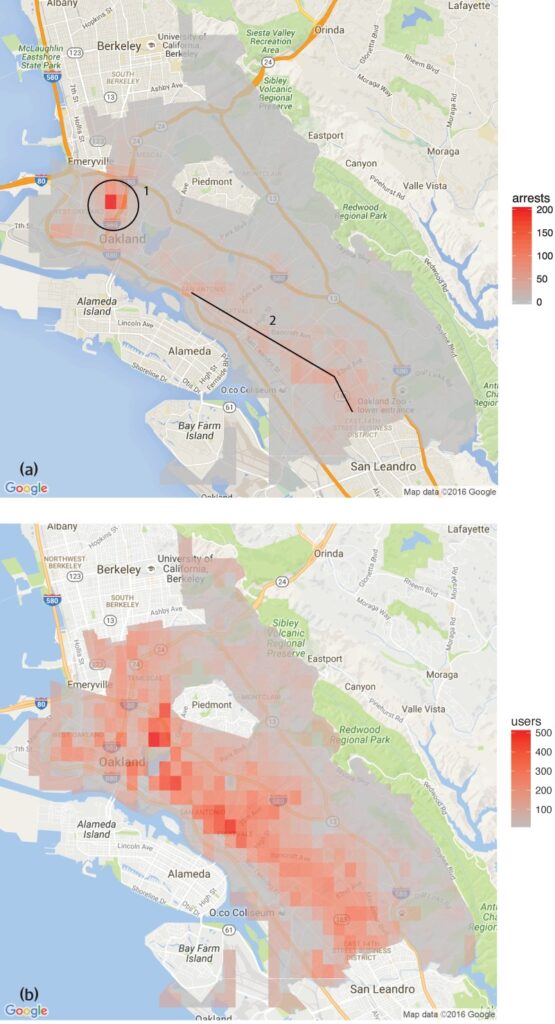

- Predictive policing and judicial sentencing tools have been found to be biased against African-American defendants, raising concerns about the fairness and impartiality of AI-assisted decision-making.

- In São Paulo, Brazil, the Smart Sampa project, which integrates AI and surveillance, risks exacerbating structural racism and inequality by misidentifying darker skin tones and disproportionately targeting the Black community.

- The Dutch government’s use of the System Risk Indication (SyRI) algorithm to detect potential welfare fraud raised concerns about human rights implications and the lack of transparency and accountability in algorithmic decision-making.

Transparent and Fair Testing of AI Tools

To ensure accountability, prevent discrimination, and maintain public trust, it is crucial to implement transparent and fair testing protocols for AI tools. Transparent testing allows for a comprehensive understanding of how algorithms function and identify potential biases or discriminatory practices. Fair testing ensures that tools are evaluated across diverse scenarios and demographics to avoid marginalizing any particular group.

While comprehensive testing procedures may seem at odds with the efficiency promised by AI, it is essential to prioritize scrutiny and analysis to avoid unjust outcomes and loss of public trust.

Evolve Your Company with AI

If you want to stay competitive and leverage the benefits of AI, consider the evidence of AI misuse in the UK public sector as an opportunity to evolve. Implementing AI in your company can redefine your way of work and enhance efficiency.

Practical AI Solutions for Middle Managers

To identify automation opportunities, define key performance indicators, select the right AI solutions, and implement them gradually, connect with us at hello@itinai.com. We provide AI solutions tailored for middle managers and can help you navigate the challenges and risks associated with AI implementation.

Spotlight on the AI Sales Bot

One practical AI solution to consider is the AI Sales Bot from itinai.com/aisalesbot. This tool automates customer engagement 24/7 and manages interactions across all stages of the customer journey. Discover how AI can redefine your sales processes and customer engagement by exploring our solutions at itinai.com.

Stay tuned for continuous insights into leveraging AI by following us on Telegram or Twitter @itinaicom.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Evidence of AI misuse unearthed in the UK public sector

- DailyAI

- Twitter – @itinaicom