Practical Solutions and Value of EuroLLM Project

Creating Multilingual Language Models

The EuroLLM project aims to develop language models that understand and generate text in various European languages and other important languages like Arabic, Chinese, and Russian.

Data Collection and Filtering

Diverse datasets were collected and filtered to train EuroLLM models, ensuring quality and language coverage. This included web data, parallel data, code/math data, and high-quality data.

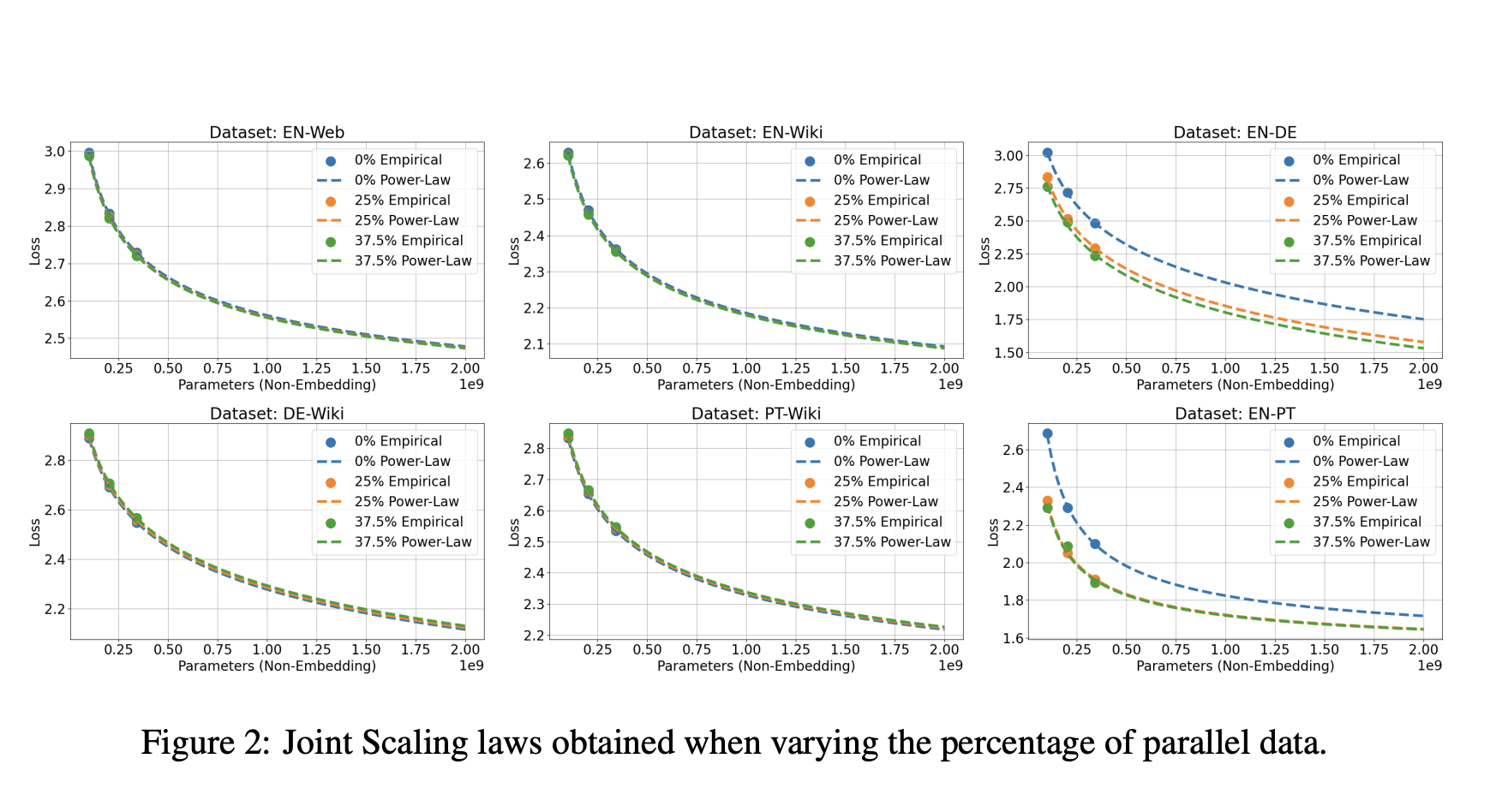

Data Mixture

The training corpus was balanced with data from different languages and domains, enhancing multilingual capabilities. English data was gradually reduced to improve cross-language alignment.

Tokenizer

A multilingual tokenizer with a large vocabulary was developed, enabling efficient handling of multiple languages and improving multilingual support.

Model Configuration

EuroLLM models use a specialized Transformer architecture with enhancements like grouped query attention and SwiGLU activation function for better results. They were pre-trained on a large dataset using advanced GPU technology.

Post-Training and Fine-Tuning

The models were fine-tuned on specific datasets to improve performance, including becoming instruction-following conversational models.

Results and Future Work

Evaluation results showed the effectiveness of EuroLLM models in understanding and generating text in multiple languages. Future work will focus on scaling up models and enhancing data quality for improved performance.