Introduction to Multimodal Foundation Models

Multimodal foundation models are becoming crucial in artificial intelligence as they can handle different types of data, like images, text, and audio. These models help perform various tasks effectively. However, they face challenges in generalizing across different data types and tasks.

Challenges in Current Models

Many existing models struggle with limited datasets, leading to poor performance when new types of data are added. This issue makes it hard to scale and achieve consistent results, highlighting the need for better frameworks that can integrate different data types while maintaining performance.

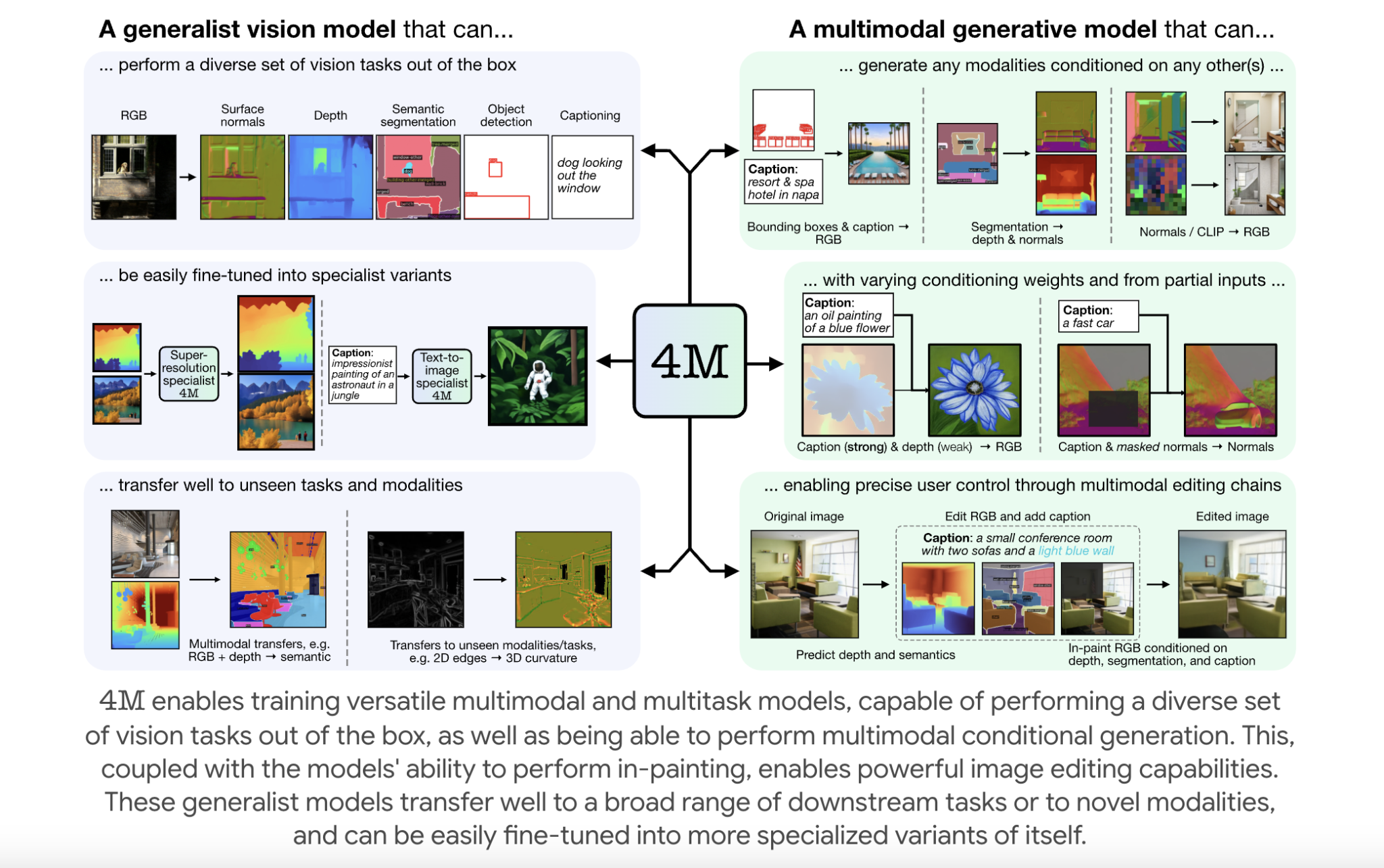

Introducing 4M Framework

Researchers at EPFL have developed 4M, an open-source framework that trains adaptable and scalable multimodal models. Unlike traditional models that focus on a few tasks, 4M supports 21 different data types, significantly expanding its capabilities.

Key Features of 4M

One of 4M’s main innovations is its discrete tokenization process, which turns various data types into a single sequence of tokens. This allows for efficient training using a Transformer-based architecture across multiple data types. The framework simplifies training and avoids task-specific components, balancing scalability and efficiency.

Technical Advantages

The 4M framework uses a specialized encoder-decoder Transformer architecture for multimodal masked modeling. It employs different encoders for different data types, ensuring smooth integration of images, text, and metadata.

Fine-Grained Control and Scalability

4M also enables precise data generation by allowing users to condition outputs based on specific data types, such as human poses. Additionally, it supports cross-modal retrieval, letting users query one data type (like text) to find relevant information in another (like images).

4M is highly scalable, trained on extensive datasets like COYO700M and CC12M, and can handle over 0.5 billion samples with up to three billion parameters. This efficiency makes it ideal for complex multimodal tasks.

Performance Results

4M shows impressive performance across various tasks, achieving a semantic segmentation score that matches or exceeds specialized models while handling three times as many tasks. Its pretrained encoders also excel in transfer learning, maintaining high accuracy in both familiar and new tasks.

Applications

The framework’s versatility makes it suitable for fields like autonomous systems and healthcare, where integrating different types of data is essential.

Conclusion

The 4M framework represents a major advancement in multimodal AI. By addressing scalability and integration challenges, it opens new opportunities for flexible and efficient AI systems. Its open-source nature encourages collaboration and further innovation in the field.

Explore more through the Paper, Project Page, GitHub Page, Demo, and Blog. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. Join our community of over 60k on our ML SubReddit.

Join Our Webinar

Gain actionable insights on improving LLM model performance while ensuring data privacy.

Transform Your Business with AI

Utilize the 4M framework to stay ahead in your industry:

- Identify Automation Opportunities: Find critical customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on your business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand thoughtfully.

For AI KPI management advice, connect with us at hello@itinai.com and stay updated on insights via our Telegram and Twitter.

Discover how AI can enhance your sales processes and customer engagement. Visit itinai.com for more solutions.