The Value of Vision-Language Models

Vision-Language Models in Practical Applications

The research on vision-language models (VLMs) is gaining momentum due to their potential to revolutionize various applications, such as visual assistance for visually impaired individuals.

Challenges in Model Evaluations

Current evaluations of VLMs need to address the complexities introduced by multi-object scenarios and diverse cultural contexts.

Practical Solutions and Value

Multi-Object Hallucination

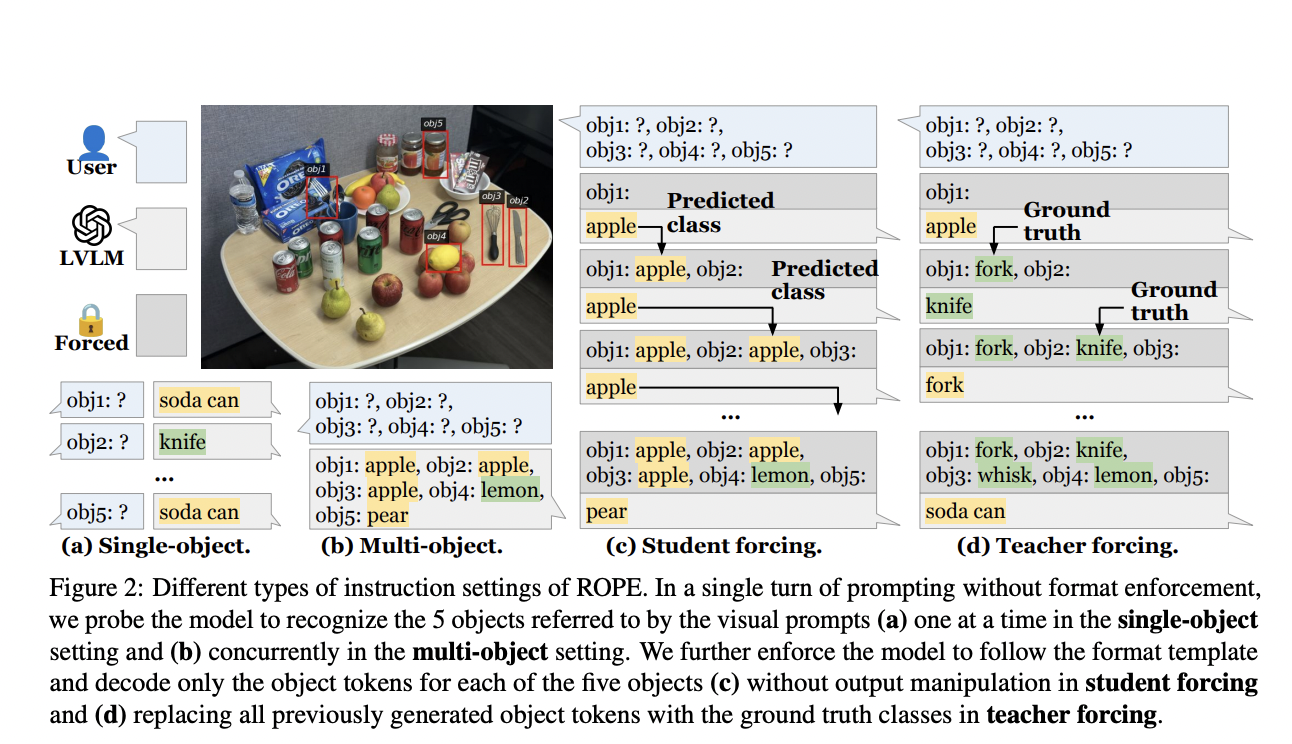

ROPE Protocol: Introducing automated evaluation protocols that consider object class distributions and visual prompts.

Data Diversity: Ensuring balanced object distributions and diverse annotations in training datasets.

Cultural Inclusivity in Vision-Language Models

User-Centered Surveys: Incorporating feedback from visually impaired individuals to determine caption preferences.

Cultural Annotations: Enhancing datasets with culture-specific annotations to improve the cultural competence of VLMs.

Conclusion

Integrating vision-language models into applications for visually impaired users holds great promise. Addressing technical and cultural challenges is crucial to realizing this potential. Researchers and developers can create more reliable and user-friendly VLMs by adopting comprehensive evaluation frameworks and incorporating cultural inclusivity into model training and assessment.

About AI Integration

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

AI in Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.