Advancements in Language Models

Large Language Models (LLMs) have greatly improved how we process natural language. They excel in tasks like answering questions, summarizing information, and engaging in conversations. However, their increasing size and need for computational power reveal challenges in managing large amounts of information, especially for complex reasoning tasks.

Introducing Retrieval-Augmented Generation (RAG)

To tackle these challenges, Retrieval-Augmented Generation (RAG) combines retrieval systems with generative models. This approach allows models to access external knowledge, enhancing their performance in specific areas without needing extensive retraining. However, smaller models often struggle with reasoning in complex situations, limiting their effectiveness.

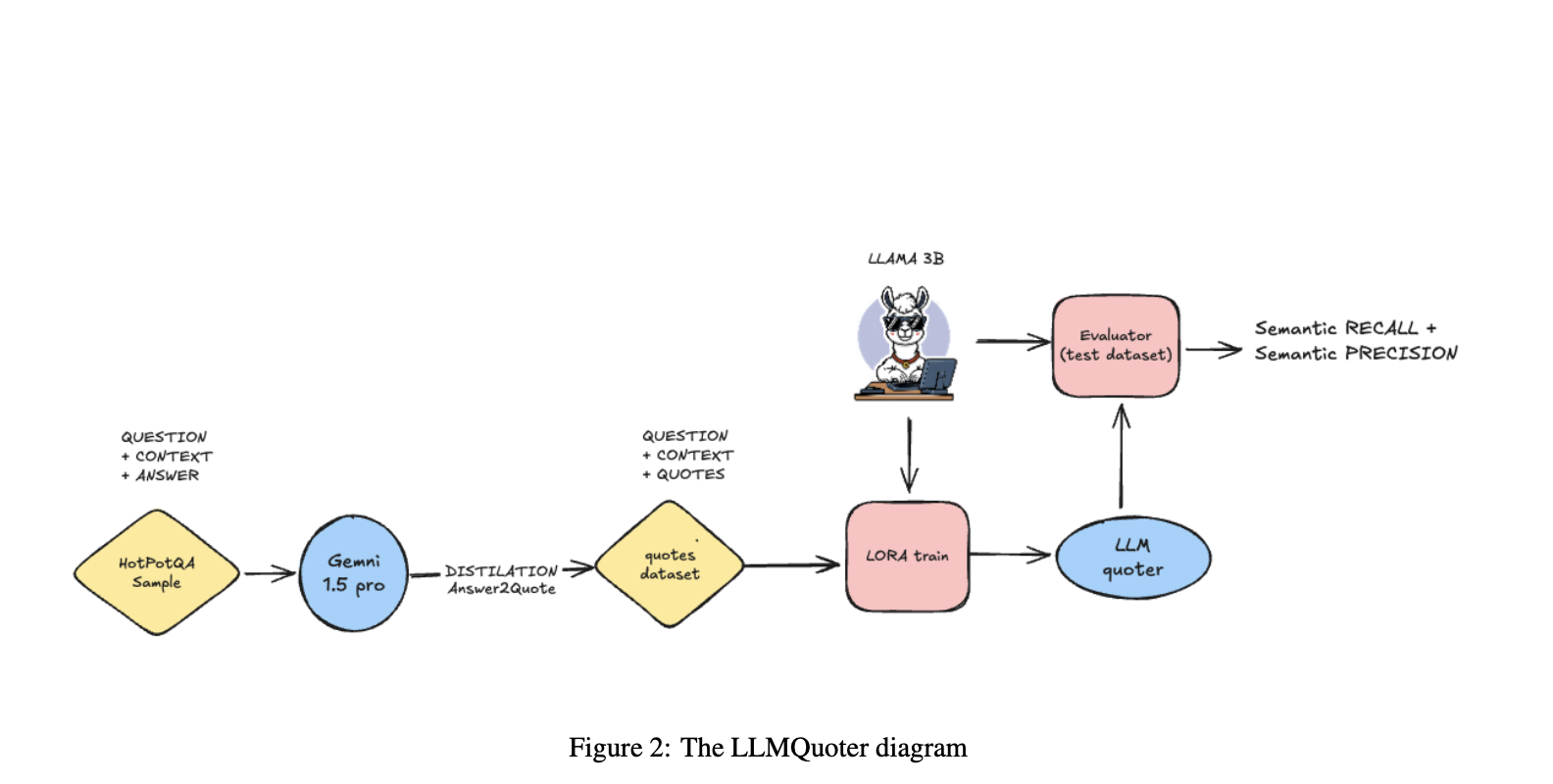

LLMQuoter: A Practical Solution

Researchers from TransLab at the University of Brasilia have developed LLMQuoter, a lightweight model that improves RAG by using a “quote-first-then-answer” strategy. Built on the LLaMA-3B architecture and fine-tuned with Low-Rank Adaptation (LoRA), LLMQuoter identifies key evidence before reasoning, which reduces cognitive load and increases accuracy. This model achieves over 20 points in accuracy compared to traditional methods while being resource-efficient.

Addressing Reasoning Challenges

Reasoning is a key challenge for LLMs. Large models may struggle with complex logical tasks, while smaller models face limitations in maintaining context. Techniques like split-step reasoning and task-specific fine-tuning help break down tasks into manageable parts, improving efficiency and accuracy. Frameworks like RAFT enhance context-aware responses, especially in specialized applications.

Knowledge Distillation for Efficiency

Knowledge distillation is crucial for making LLMs more efficient. It transfers skills from larger models to smaller ones, allowing them to perform complex tasks with less computational power. Techniques like rationale-based distillation improve the performance of compact models. Evaluations show that models trained to extract relevant quotes perform better than those processing full contexts.

Significant Improvements with Quote Extraction

The study highlights the effectiveness of quote extraction in enhancing RAG systems. Fine-tuning a compact model with minimal resources led to notable improvements in recall, precision, and F1 scores. For example, using extracted quotes increased accuracy from 24.4% to 62.2% for the LLAMA 1B model. This “divide and conquer” strategy simplifies reasoning, allowing even less optimized models to perform well.

Future Research Directions

Future research may explore diverse datasets and incorporate reinforcement learning techniques to enhance scalability. Advancing prompt engineering can further improve quote extraction and reasoning processes. This approach also has potential applications in memory-augmented RAG systems, making high-performing NLP systems more scalable and efficient.

Get Involved

Check out the research paper for more insights. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our 65k+ ML SubReddit for ongoing discussions.

Transform Your Business with AI

To stay competitive, consider how AI can enhance your operations:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For continuous insights, follow us on Telegram at t.me/itinainews or Twitter @itinaicom.

Revolutionize Your Sales and Customer Engagement

Discover how AI can transform your sales processes and customer interactions. Explore solutions at itinai.com.