Enhancing Language Models with RAG: Best Practices and Benchmarks

Challenges in RAG Techniques

RAG techniques face challenges in integrating up-to-date information, reducing hallucinations, and improving response quality in large language models (LLMs). These challenges hinder real-time applications in specialized domains such as medical diagnosis.

Current Methods and Limitations

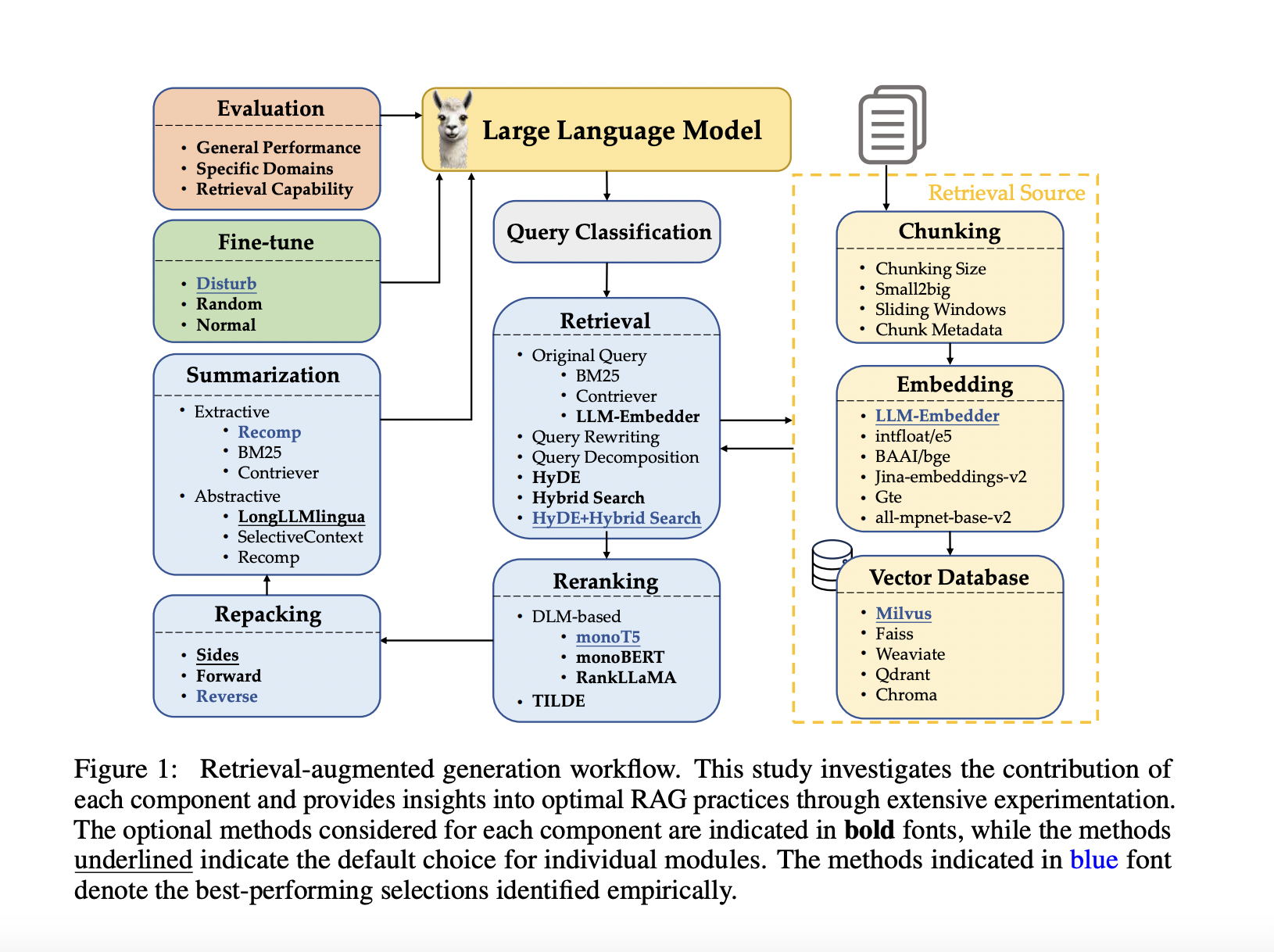

Current methods involve query classification, retrieval, reranking, repacking, and summarization. However, they have limitations such as complex implementations, prolonged response times, and struggle with efficiently balancing performance and response time.

Optimizing RAG

A three-step approach was adopted to compare methods, evaluate their impact, and explore promising combinations. Strategies to enhance question-answering capabilities and accelerate content generation were suggested, such as integrating multimodal retrieval techniques using a “retrieval as generation” strategy.

Evaluation and Achievements

The evaluation involved detailed experimental setups using datasets such as TREC DL 2019 and 2020. The study achieved significant improvements across various key performance metrics, demonstrating substantial enhancements in retrieval effectiveness and efficiency.

Conclusion and Future Research

This research addresses the challenge of optimizing RAG techniques, proposing innovative combinations and demonstrating significant improvements in performance metrics. The integration of multimodal retrieval techniques represents a significant advancement in the field of AI research.

Evolve Your Company with AI

Discover how AI can redefine your way of work by identifying automation opportunities, defining KPIs, selecting AI solutions, and implementing them gradually. For AI KPI management advice, connect with us at hello@itinai.com.

If you want to evolve your sales processes and customer engagement with AI, explore solutions at itinai.com.