Practical Solutions for LLMs

Fact-Checking for Accuracy

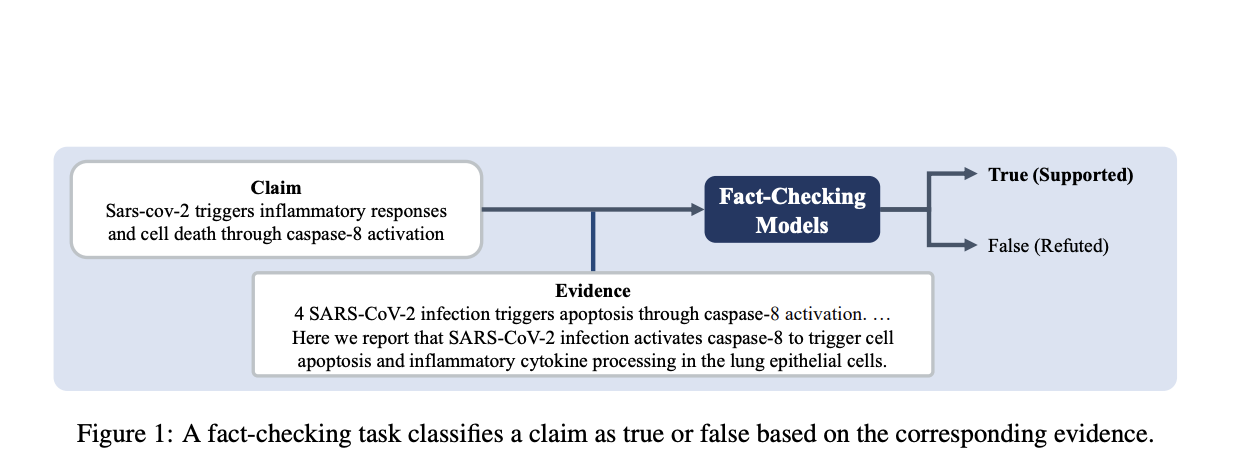

Fact-checking is crucial to verify the accuracy of LLM results, especially in fields like journalism, law, and healthcare. It detects and reduces hallucinations, ensuring credibility for crucial applications.

Parameter-Efficient Methods

Low-Rank Adaptation (LoRA) minimizes computing demands by modifying a subset of LLM parameters, addressing the computational resources needed for fine-tuning.

Integration of LoRAs

Efforts to integrate multiple LoRAs for distinct tasks or viewpoints have been explored, aiming to foster a more holistic reasoning aptitude for LLMs.

Value of LoraMap

LoraMap goes beyond parallel integration of LoRAs by emphasizing the relationships between them, providing a more sophisticated and efficient method of optimizing LLMs for intricate reasoning tasks.

Evolve Your Company with AI

Discover how AI can redefine your work processes and identify automation opportunities, define KPIs, select the right AI solutions, and implement gradually for measurable impacts on business outcomes.

Connect with Us

For AI KPI management advice and insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram Channel and Twitter.