Introduction to Large Language Models (LLMs)

Large Language Models (LLMs) are essential tools in customer support, automated content creation, and data retrieval. However, their effectiveness can be limited by challenges in consistently following detailed instructions across multiple interactions, especially in high-stakes environments like financial services.

Challenges Faced by LLMs

LLMs often struggle with recalling instructions, which can lead to deviations from intended behaviors. They may also produce misleading information, known as hallucination, making them less reliable in scenarios that require precise decision-making.

Key Issues

Maintaining reasoning consistency in complex scenarios is a significant challenge. While LLMs perform well with simple queries, their performance declines in multi-turn conversations. Key issues include:

- Alignment Drift: Models may drift away from original instructions, leading to misinterpretations.

- Context Forgetfulness: Recent information may overshadow earlier details, resulting in critical constraints being overlooked.

Current Solutions and Their Limitations

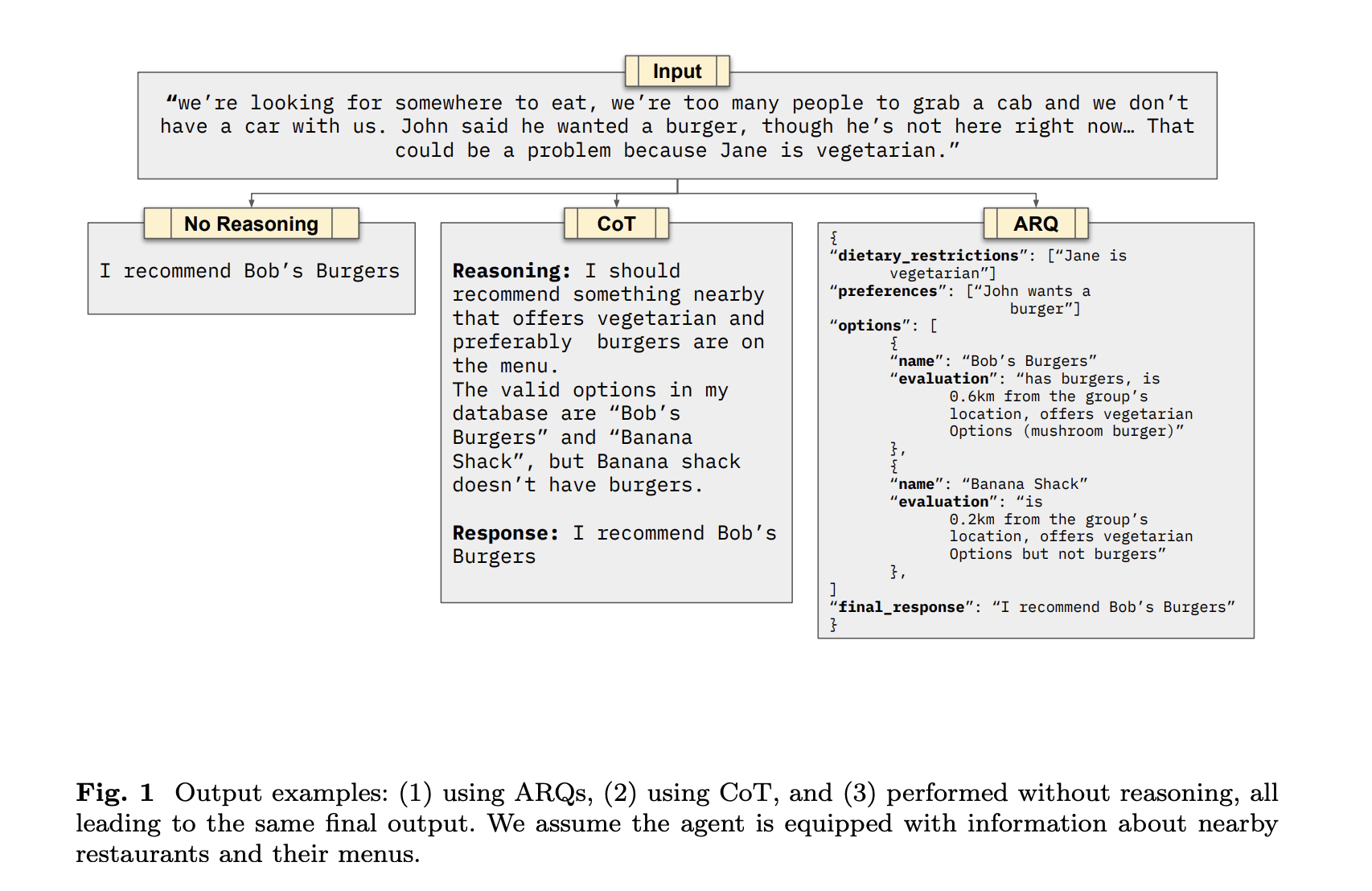

Various prompting techniques have been developed to improve instruction adherence, such as Chain-of-Thought (CoT) prompting and Chain-of-Verification. However, these methods often lack the necessary structure to enforce domain-specific constraints effectively.

Introducing Attentive Reasoning Queries (ARQs)

Researchers at Emcie Co Ltd. have developed Attentive Reasoning Queries (ARQs) to address these limitations. ARQs use a structured reasoning blueprint that guides LLMs through predefined queries, enhancing adherence to guidelines and minimizing errors.

ARQ Framework Overview

The ARQ framework consists of several stages:

- Targeted Queries: Structured queries remind the model of key constraints before generating responses.

- Step-by-Step Processing: The model processes a series of queries to reinforce task-specific reasoning.

- Verification Step: The model checks its response against predefined criteria to ensure correctness.

Performance Evaluation

In tests conducted within the Parlant framework, ARQs achieved a 90.2% success rate across 87 conversational scenarios, outperforming both CoT reasoning (86.1%) and direct response generation (81.5%). ARQs excelled in preventing guideline misapplication and reducing hallucination errors.

Key Takeaways

- ARQs improved instruction adherence, achieving a 90.2% success rate.

- They reduced hallucination errors by 23% compared to CoT.

- In guideline re-application scenarios, ARQs had a success rate of 92.19%.

- ARQs reduced token usage by 29% in classification tasks.

- The verification mechanism helped prevent alignment drift.

Future Research Directions

Future research will focus on optimizing ARQ efficiency and exploring its applications in various AI-driven decision-making systems.

Get Involved

For further information, check out the Paper and GitHub Page. Follow us on Twitter and join our ML SubReddit.

Transform Your Business with AI

Explore how AI technology can enhance your operations:

- Identify processes that can be automated.

- Determine key performance indicators (KPIs) to measure the impact of your AI investment.

- Select customizable tools that align with your objectives.

- Start small, gather data, and gradually expand your AI initiatives.

Contact Us

If you need guidance on managing AI in your business, contact us at hello@itinai.ru or reach out via Telegram, X, or LinkedIn.