Optimizing Inference-Time for Flow Models: Practical Business Solutions

Introduction

Recent developments in artificial intelligence have shifted focus from simply increasing model size and training data to enhancing the efficiency of inference-time computation. This optimization strategy can significantly improve model performance without necessitating a complete model retraining. For businesses, implementing these advancements can lead to better resource allocation, heightened efficiency, and improved user satisfaction.

Understanding Inference-Time Scaling

What is Inference-Time Scaling?

Inference-time scaling refers to the techniques employed to optimize the computational resources used during the model inference stage. By leveraging additional computational power, businesses can enhance the performance of models, such as those used in language processing and image generation.

Case Studies and Applications

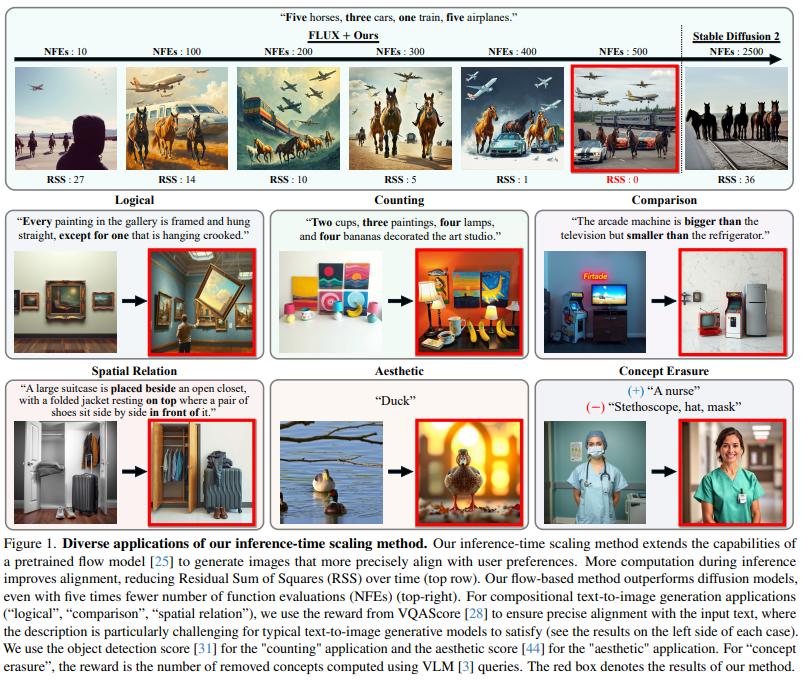

Models like OpenAI’s GPT and DeepSeek have shown substantial improvements in their outputs by employing this scaling technique. For example, in text-to-image generation, the traditional sampling methods often miss intricate relationships between objects, leading to subpar results. By adopting inference-time scaling, businesses can generate outputs that closely align with user preferences and specifications.

Categories of Inference-Time Scaling Techniques

1. Fine-Tuning Approaches

Fine-tuning methods improve model alignment with specific tasks but necessitate retraining, which can hinder scalability. While effective, they may not always be the optimal choice for organizations looking to implement scalable AI solutions.

2. Particle-Sampling Techniques

Particle-sampling methods, such as those used in techniques like SVDD and CoDe, offer a more dynamic approach by selecting high-reward samples iteratively. This significantly boosts output quality and is particularly useful for tasks like text-to-image generation.

Innovations in Flow Model Sampling

Overcoming Limitations of Deterministic Processes

Researchers from KAIST have developed a novel inference-time scaling method specifically designed for flow models, addressing the inherent limitations associated with their deterministic nature.

Key Innovations Introduced

- SDE-Based Generation: This method enables stochastic sampling, allowing for greater variability in the results.

- VP Interpolant Conversion: This technique enhances the diversity of generated samples, improving alignment with desired outcomes.

- Rollover Budget Forcing (RBF): A dynamic strategy for adaptive computational resource allocation that ensures efficiency during the inference process.

Experimental Findings

Studies have shown that these methods not only improve the alignment of generated outputs with user expectations but also enhance the overall efficiency of the AI systems deployed. The results indicate that organizations that implement these innovations can produce high-quality images and videos without sacrificing performance, as evidenced by metrics like VQAScore and RSS.

Steps Forward for Businesses

How to Implement AI Solutions

For organizations looking to integrate AI effectively, consider the following steps:

- Identify Automation Opportunities: Look for processes that can be streamlined or automated to maximize efficiency.

- Define Key Performance Indicators (KPIs): Establish important metrics that will help you measure the impact of AI initiatives.

- Select Appropriate Tools: Choose AI solutions that meet your business needs while allowing for customization.

- Start Small: Initiate a pilot project, gather and analyze data, and scale up gradually based on the findings.

Conclusion

The advancements in inference-time scaling for flow models provide businesses with a strategic advantage. By incorporating techniques like stochastic sampling and adaptive resource allocation, organizations can achieve better performance while ensuring high-quality outputs. As AI continues to evolve, leveraging these innovations will be pivotal in driving success and maintaining a competitive edge.

For further assistance in managing AI solutions in your business, reach out to us at hello@itinai.ru or connect with us on Telegram, X, and LinkedIn.