Efficient and Robust Controllable Generation: ControlNeXt Revolutionizes Image and Video Creation

The research paper titled “ControlNeXt: Powerful and Efficient Control for Image and Video Generation” addresses a significant challenge in generative models, particularly in the context of image and video generation. As diffusion models have gained prominence for their ability to produce high-quality outputs, the need for fine-grained control over these generated results has become increasingly important.

Challenges Addressed

Traditional methods, such as ControlNet and Adapters, have attempted to enhance controllability by integrating additional architectures. However, these approaches often lead to substantial increases in computational demands, particularly in video generation, where the processing of each frame can double GPU memory consumption. This paper highlights the limitations of existing methods, which need to improve with high resource requirements and weak control. It introduces ControlNeXt as a more efficient and robust solution for controllable visual generation.

Solution Highlights

Existing architectures typically rely on parallel branches or adapters to incorporate control information, which can significantly inflate the model’s complexity and training requirements. In contrast, the proposed ControlNeXt method aims to streamline this process by replacing heavy additional branches with a more straightforward, efficient architecture. This design minimizes the computational burden while maintaining the ability to integrate with other low-rank adaptation (LoRA) weights, allowing for style alterations without necessitating extensive retraining.

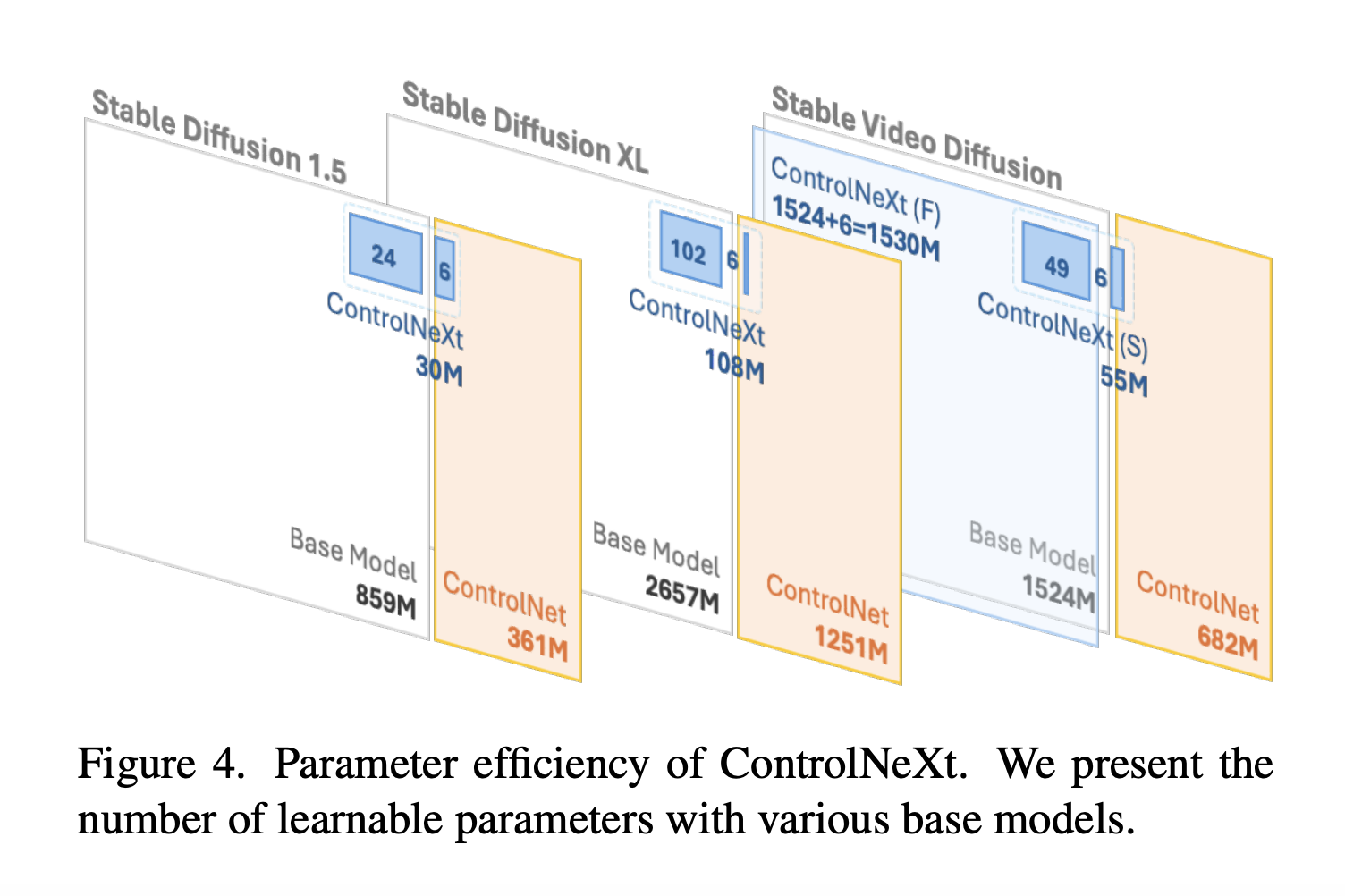

Delving deeper into the proposed method, ControlNeXt introduces a novel architecture that significantly reduces the number of learnable parameters to 90% less than its predecessors. This is achieved using a lightweight convolutional network to extract conditional control features rather than relying on a parallel control branch.

The performance of ControlNeXt has been rigorously evaluated through a series of experiments involving different base models for image and video generation. The results demonstrate that ControlNeXt effectively retains the original model’s architecture while introducing only a minimal number of auxiliary components. This lightweight design allows seamless integration as a plug-and-play module with existing systems.

Practical Implementation and Value

ControlNeXt stands out as a significant advancement in the field of controllable generative models, promising to facilitate more precise and efficient generation of visual content. It offers a powerful and efficient method for image and video generation, addressing the critical issues of high computational demands and weak control in existing models.

If you want to evolve your company with AI, stay competitive, use for your advantage Efficient and Robust Controllable Generation: ControlNeXt Revolutionizes Image and Video Creation.

For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.