Advancements in Language Modeling

Recent developments in language modeling have improved natural language processing, allowing for the creation of coherent and contextually relevant text for various uses. Autoregressive (AR) models, which generate text sequentially from left to right, are commonly used for tasks like coding and reasoning. However, these models often struggle with accumulating errors, which can affect text quality and efficiency.

Challenges with Autoregressive Models

One major issue with autoregressive models is that small errors can build up over time, leading to significant deviations in the generated text. This error accumulation reduces accuracy and makes these models less suitable for real-time applications where speed and reliability are crucial. Researchers are now exploring parallel text generation methods to improve performance while minimizing errors.

Emerging Solutions: Discrete Diffusion Models

Discrete diffusion models offer a promising solution for generating text in parallel. These models create entire sequences at once, which can speed up the generation process. They begin with a fully masked sequence and gradually reveal tokens without following a strict order, allowing for more flexibility. However, they often struggle to maintain the contextual understanding provided by traditional autoregressive models.

Introducing EDLM: Energy-based Diffusion Language Model

Researchers from Stanford University and NVIDIA have developed the Energy-based Diffusion Language Model (EDLM), which combines energy-based modeling with discrete diffusion techniques to enhance parallel text generation. The model uses an energy function at each stage to address the dependencies between tokens, improving text quality while maintaining the benefits of parallel generation.

How EDLM Works

The EDLM framework introduces an energy function that captures relationships among tokens during the generation process. This function helps correct predictions iteratively and allows the model to efficiently sample text without the costly training typically required. By optimizing token dependencies, EDLM reduces errors and improves accuracy compared to other diffusion models.

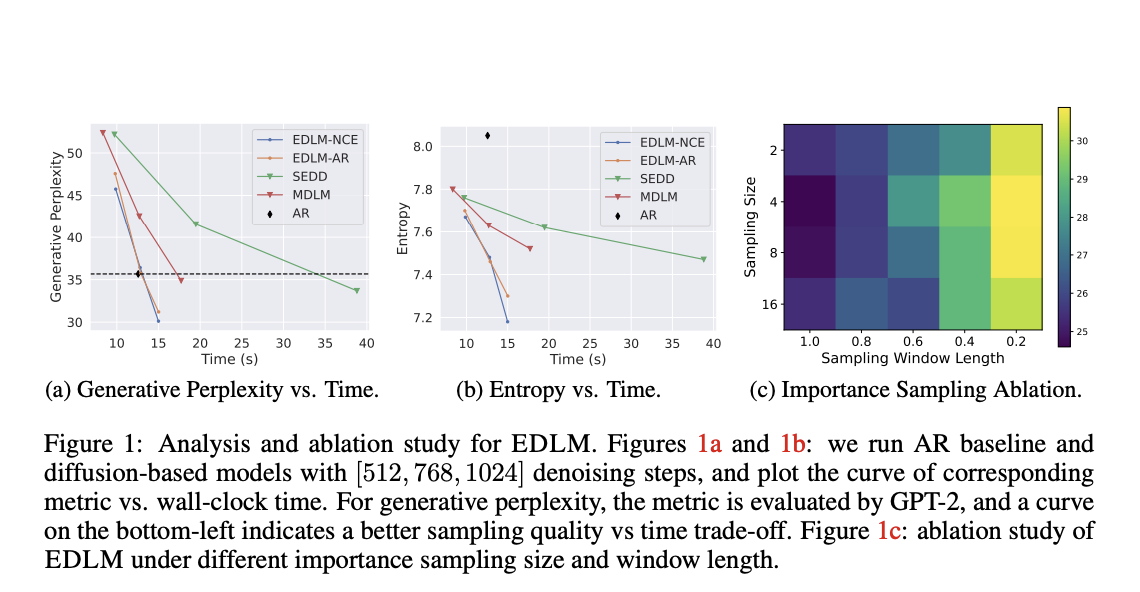

Performance Benefits of EDLM

EDLM shows significant improvements in both speed and quality of text generation. In tests, it achieved up to a 49% reduction in generative perplexity, indicating higher accuracy. Additionally, it offers a 1.3x speed advantage over traditional diffusion models while maintaining performance standards similar to autoregressive models. For example, in the Text8 dataset, EDLM achieved the lowest bits-per-character score, demonstrating its ability to generate coherent text more efficiently.

Conclusion

EDLM effectively addresses the challenges of sequential dependency and error propagation in language generation. By combining energy-based corrections with the advantages of parallel generation, it provides a model that excels in both accuracy and speed. This innovation highlights the potential of energy-based frameworks in advancing generative text technologies.

For further insights, check out the Paper. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, consider subscribing to our newsletter and joining our 55k+ ML SubReddit.

AI Solutions for Your Business

If you’re looking to enhance your company’s competitiveness with AI, consider utilizing EDLM. Discover how AI can transform your workflows:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI use wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights through our Telegram or follow us on Twitter.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.