Practical Solutions for Energy-Efficient Large Language Model (LLM) Inference

Enhancing Energy Efficiency

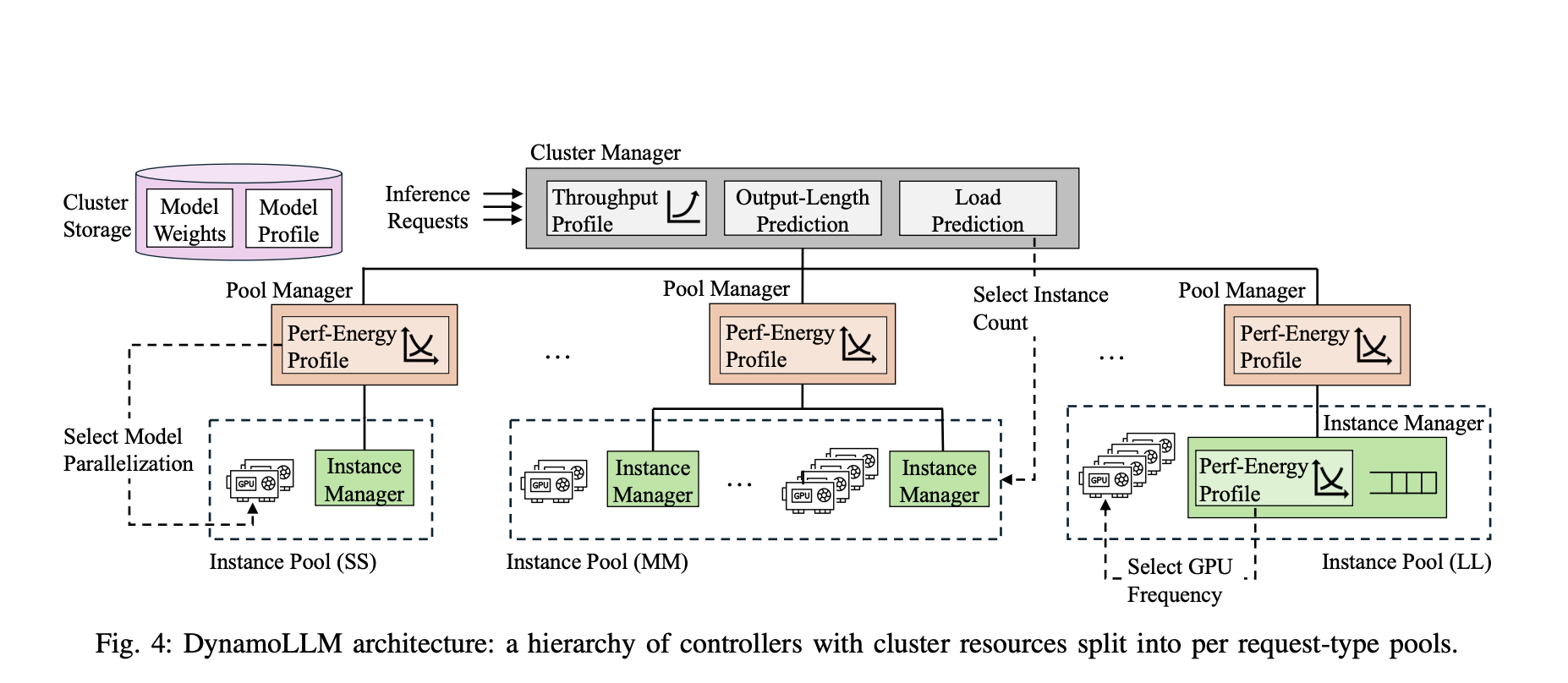

Large Language Models (LLMs) require powerful GPUs to handle data quickly, but this consumes a lot of energy. To address this, DynamoLLM optimizes energy usage by understanding distinct processing requirements and adjusting system configurations in real-time.

Dynamic Energy Management

DynamoLLM automatically and dynamically rearranges inference clusters to optimize energy usage while ensuring performance requirements are met. By monitoring the system’s performance and adjusting configurations as needed, it finds the best trade-offs between computational power and energy efficiency.

Performance and Environmental Impact

DynamoLLM can save up to 53% of the energy normally needed by LLM inference clusters while also reducing consumer prices by 61% and operational carbon emissions by 38%. It achieves this while maintaining required latency Service Level Objectives (SLOs).

Value of DynamoLLM

DynamoLLM represents a significant advancement in improving the sustainability and economics of LLMs, addressing both financial and environmental concerns in the rapidly evolving field of Artificial Intelligence.

AI Solutions for Business Transformation

Utilize DynamoLLM to enhance your company’s AI capabilities, ensuring sustainable performance and optimized energy efficiency in LLM inference. Explore how AI can revolutionize your business by identifying automation opportunities, defining KPIs, selecting suitable AI solutions, and implementing them gradually.

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com and stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement at itinai.com.