Normalization Layers in Neural Networks

Normalization layers are essential in modern neural networks. They help improve optimization by stabilizing gradient flow, reducing sensitivity to weight initialization, and smoothing the loss landscape. Since the introduction of batch normalization in 2015, various techniques have been developed, with layer normalization (LN) becoming particularly important in Transformer models. Their effectiveness is evident as they accelerate convergence and enhance model performance, especially in deeper networks.

Exploring Alternatives to Normalization Layers

While normalization layers have proven beneficial, researchers are exploring methods to train deep networks without them. Alternative strategies include innovative weight initialization, weight normalization techniques, and adaptive gradient clipping. In Transformers, modifications have been made to reduce the reliance on normalization, demonstrating that stable convergence can be achieved through various training techniques.

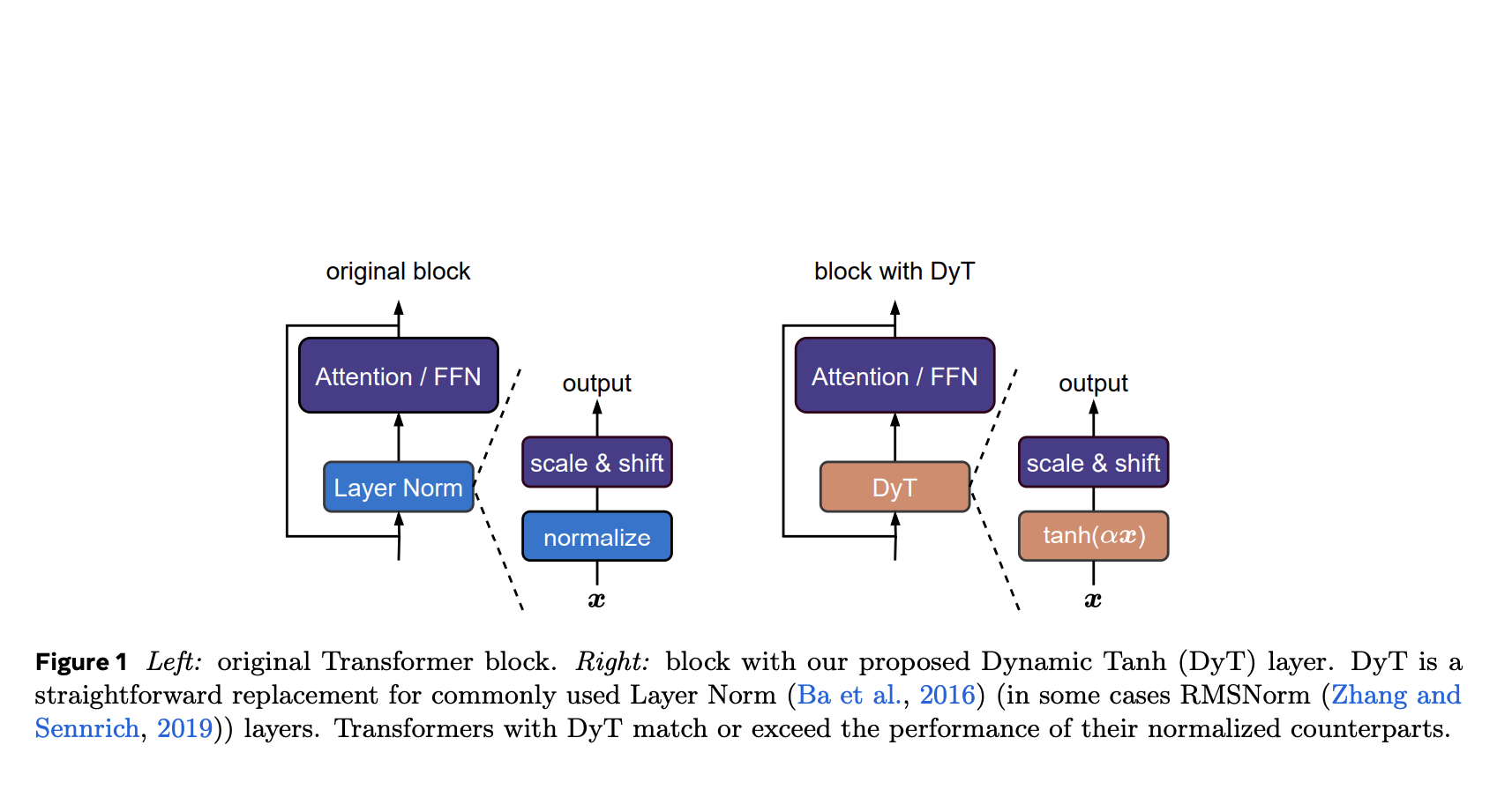

Introducing Dynamic Tanh (DyT)

Dynamic Tanh (DyT) has been proposed as an effective alternative to normalization layers in Transformers. DyT operates as an element-wise function that scales activations while limiting extreme values. This approach simplifies computations by eliminating the need for activation statistics. Empirical evaluations show that DyT maintains or improves performance across various tasks without extensive hyperparameter tuning, enhancing both training and inference efficiency.

Performance Evaluation of DyT

Researchers conducted experiments using models such as ViT-B and wav2vec 2.0, finding that DyT often outperformed traditional normalization methods. In supervised vision tasks, DyT showed slight improvements, while in self-supervised learning and other applications, its performance was comparable to existing methods. Efficiency tests indicated reduced computation time with DyT, highlighting its potential as a competitive alternative.

Conclusion

The study concludes that modern neural networks, especially Transformers, can be effectively trained without normalization layers. DyT offers a lightweight alternative that simplifies training while maintaining or improving performance, often without needing hyperparameter adjustments. This research provides new insights into the function of normalization layers and presents DyT as a promising solution.

Business Applications of AI

Explore how artificial intelligence can transform your business processes:

- Identify areas for automation within customer interactions.

- Establish key performance indicators (KPIs) to measure the impact of AI investments.

- Select customizable tools that align with your objectives.

- Start with a small project, analyze its effectiveness, and gradually expand AI integration.

Contact Us

If you need guidance on managing AI in business, reach out to us at hello@itinai.ru or connect with us on: