Practical AI Solutions for Language Generation Challenges

Addressing Challenges in Fine-Tuning Large Pre-Trained Generative Transformers

Large pre-trained generative transformers excel in natural language generation but face challenges in adapting to specific applications. Fine-tuning on smaller datasets can lead to overfitting, compromising reasoning skills like compositional generalization and commonsense. Existing methods like prompt-tuning and NADO algorithm offer solutions for efficient adaptation and model control.

Introducing DiNADO: Enhanced Parameterization of NADO Algorithm

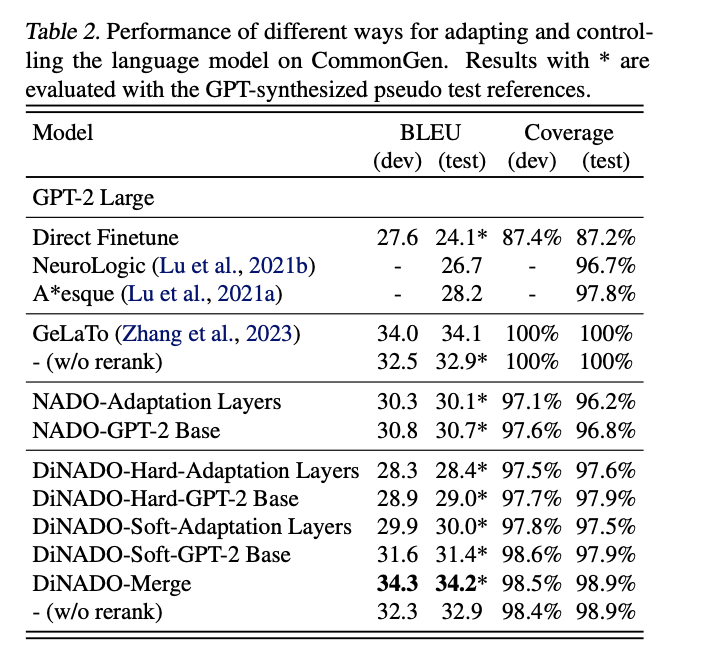

DiNADO, developed by researchers from UCLA, Amazon AGI, and Samsung Research America, improves upon the NADO algorithm by enhancing convergence during fine-tuning and addressing gradient estimation inefficiency. It is evaluated on Formal Machine Translation and Lexically Constrained Generation tasks, showcasing superior performance and controllability.

Advantages of DiNADO for AI Applications

DiNADO’s compatibility with fine-tuning methods like LoRA, theoretical analysis of flawed designs in vanilla NADO, and superior training dynamics make it a valuable enhancement for controllable language generation. It offers new opportunities for more efficient and effective text generation applications.

Evolve Your Company with AI: Use DiNADO for Superior Convergence and Global Optima

AI can redefine the way businesses work. Identify automation opportunities, define KPIs, select AI solutions, and implement them gradually for impactful AI integration. Connect with us for AI KPI management advice and stay tuned for continuous insights into leveraging AI through our Telegram and Twitter channels.

Redefined Sales Processes and Customer Engagement with AI

Discover how AI can transform sales processes and customer engagement. Explore AI solutions at our website for innovative ways to leverage AI for business growth.