Understanding the Challenges of Long Contexts in Language Models

Language models are increasingly required to manage long contexts, but traditional attention mechanisms face significant issues. The complexity of full attention makes it hard to process long sequences efficiently, leading to high memory use and computational demands. This creates challenges for applications like multi-turn dialogues and complex reasoning. Although sparse attention methods offer theoretical benefits, they often fail to deliver real-world speed improvements.

Rethinking Attention Mechanisms

Researchers are now focused on improving attention mechanisms to balance performance and efficiency. This is essential for developing models that can scale effectively.

Introducing NSA: A Solution for Long-Context Training

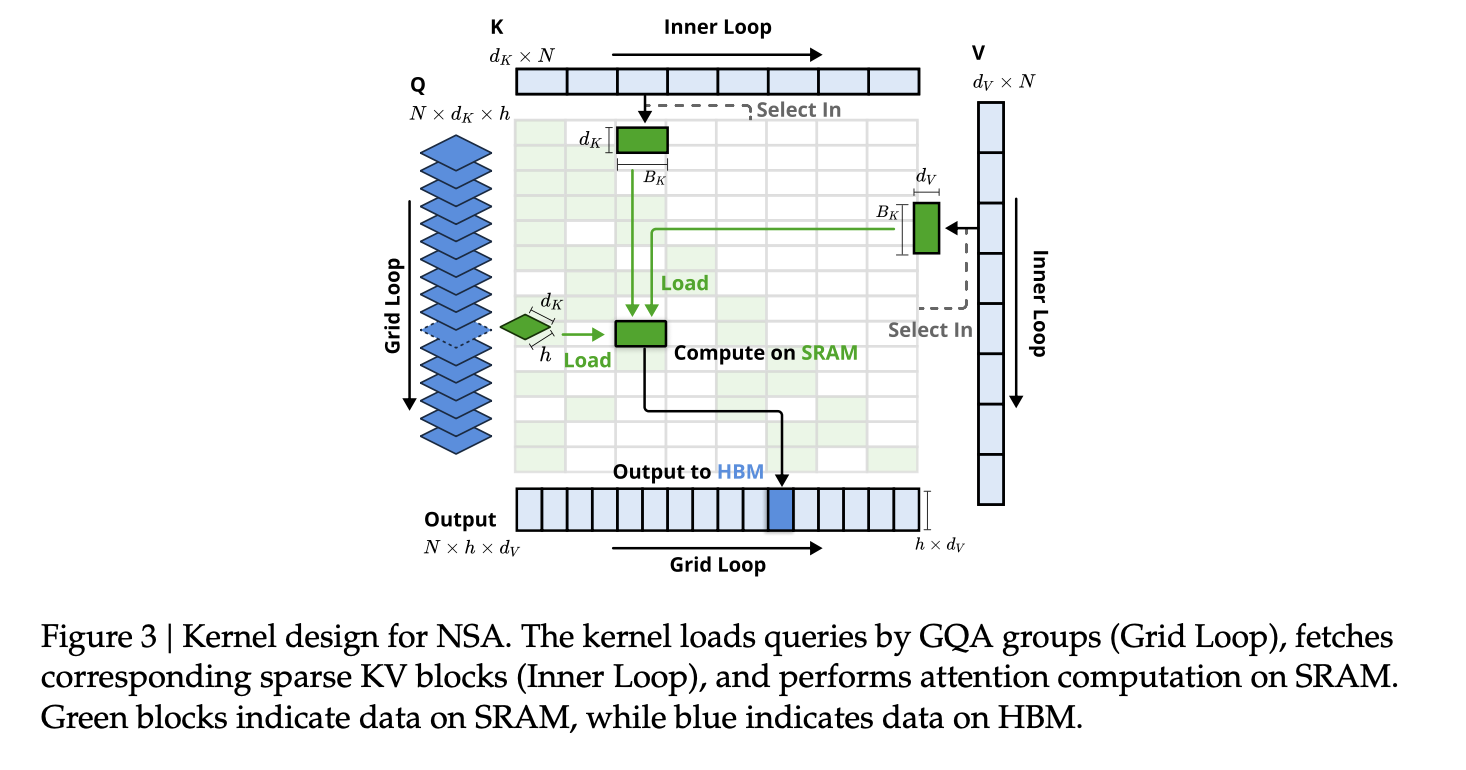

DeepSeek AI presents NSA, a new sparse attention mechanism designed for fast training and inference with long contexts. NSA combines innovative algorithms with hardware optimizations to lower the computational costs associated with processing long sequences.

How NSA Works

NSA employs a three-part strategy:

- Compression: Groups of tokens are summarized into key representations.

- Selection: Only the most relevant tokens are kept based on importance scores.

- Sliding Window: Local context is preserved for better understanding.

This approach allows NSA to maintain both global and local dependencies while being efficient.

Technical Benefits of NSA

NSA’s design focuses on two main areas: hardware efficiency and ease of training. It uses a learnable multilayer perceptron for token compression, which captures key patterns without needing full-resolution processing. The token selection process minimizes random memory access, and the sliding window component ensures that important local details are retained.

By optimizing GPU resource usage, NSA significantly speeds up both training and inference. Experimental results show improvements of up to 9× in forward propagation and 6× in backward propagation for long sequences.

Proven Performance Across Tasks

Research shows that NSA performs comparably or better than traditional models on benchmarks like MMLU, GSM8K, and DROP. It excels in scenarios requiring both global awareness and local precision, achieving high accuracy even in complex tasks with sequences up to 64k tokens.

Key Takeaways

- NSA effectively combines token compression, selective attention, and sliding window processing.

- It offers a practical solution for efficiently handling long sequences without sacrificing accuracy.

Conclusion

NSA represents a significant advancement in sparse attention mechanisms. By merging trainability with hardware optimizations, it addresses the challenges of computational efficiency and effective long-context modeling. This innovative approach reduces computational overhead while maintaining essential context.

For more details, check out the Paper. All credit goes to the researchers involved. Follow us on Twitter and join our 75k+ ML SubReddit community.

Transform Your Company with AI

Stay competitive and leverage DeepSeek AI’s NSA for your advantage. Discover how AI can transform your workflow:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, reach out at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.