The Fire-Flyer AI-HPC Architecture: Revolutionizing Affordable, High-Performance Computing for AI

Addressing Industry Challenges

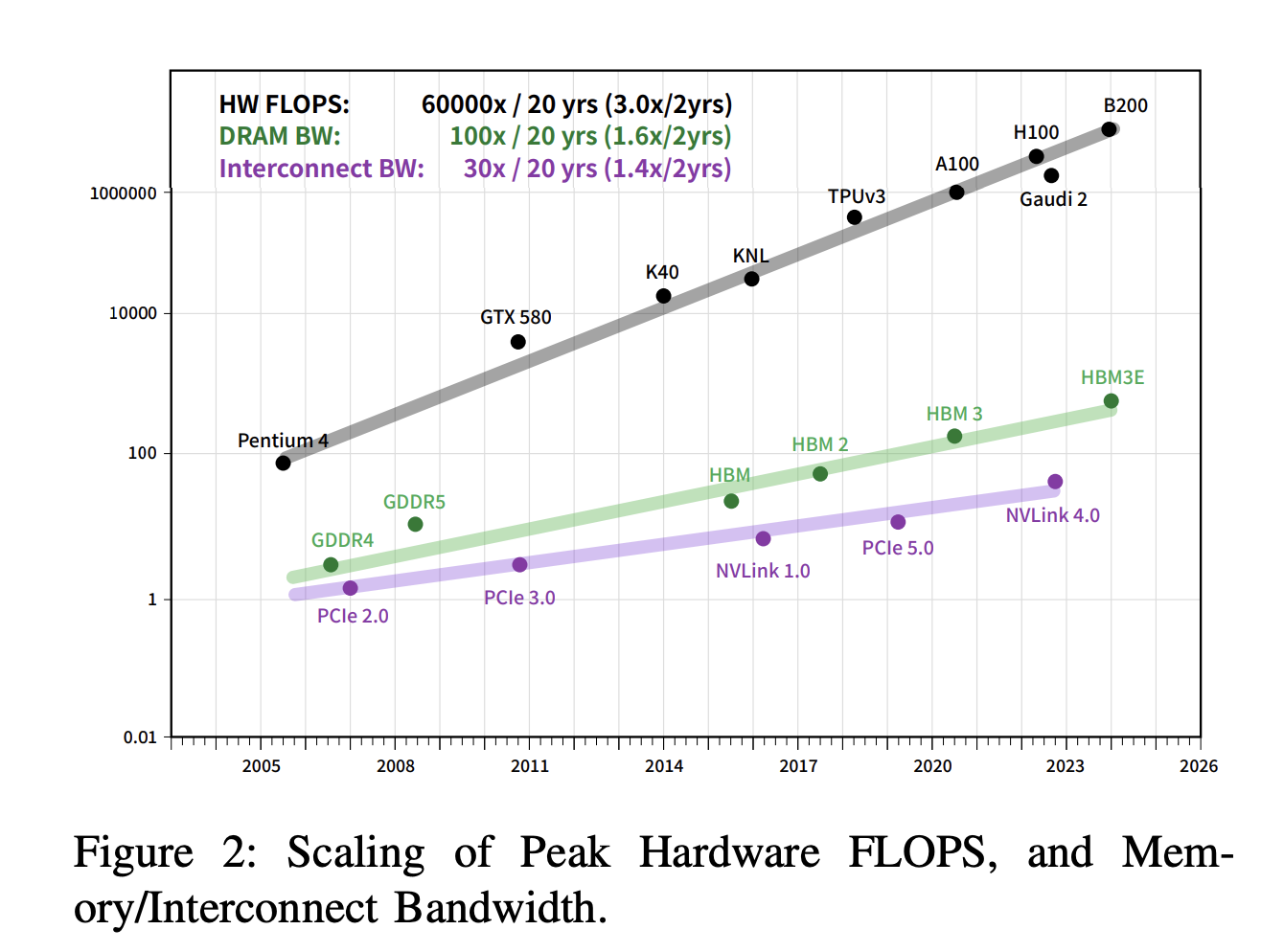

The demand for processing power and bandwidth has surged due to the advancements in Large Language Models (LLMs) and Deep Learning. Challenges such as the high cost of building high-performance computing systems present significant obstacles for companies aiming to enhance their AI capabilities while managing expenses.

Practical Solutions and Value

DeepSeek-AI’s Fire-Flyer AI-HPC architecture amalgamates hardware and software design to prioritize cost-effectiveness, energy conservation, and performance optimization. The Fire-Flyer 2, with 10,000 PCIe A100 GPUs, delivers industry-leading performance levels while reducing costs by 50% and energy consumption by 40%.

Key innovations like HFReduce enhance data interchange across GPUs, ensuring high throughput in large-scale training workloads. The Computation-Storage Integrated Network is optimized to enhance system dependability and performance. Software tools like HaiScale, 3FS, and the HAI-Platform improve scalability and effectively manage complex workloads.

Conclusion and Call to Action

The Fire-Flyer AI-HPC architecture represents a significant advancement in affordable, high-performance computing for AI. It balances cost and energy efficiency to meet the expanding requirements of DL and LLMs, making it a valuable solution for companies embracing AI.

To stay competitive and leverage AI for your company’s growth, consider adopting DeepSeek-AI’s Fire-Flyer AI-HPC architecture. Explore how AI can redefine your business processes, identify automation opportunities, define KPIs, select suitable AI solutions, and implement them gradually to drive measurable impacts on your business outcomes.

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or follow us on Telegram t.me/itinainews or Twitter @itinaicom.