Understanding Retrieval-Augmented Generation (RAG)

Retrieval-augmented generation (RAG) is a significant improvement in how large language models (LLMs) perform tasks by using relevant external information. This method combines information retrieval with generative modeling, making it useful for complex tasks like machine translation, question answering, and content creation. By integrating documents into the LLMs’ context, RAG allows models to access a wider range of data, enhancing their ability to answer specialized queries accurately. This is especially beneficial in industries where precise information is crucial.

Challenges in Managing Contextual Information

A key challenge for LLMs is managing large amounts of contextual information. As these models become more powerful, they must synthesize vast data without compromising response quality. However, adding too much external information can lead to performance issues, particularly when models struggle to retain important details over long contexts. Optimizing LLMs for longer contexts is essential, especially as applications demand rich, data-driven interactions.

Current RAG Approaches

Most traditional RAG methods involve using vector databases to retrieve relevant document chunks based on user queries. While effective for shorter contexts, many open-source models see a drop in accuracy with larger contexts. Some advanced models can handle up to 32,000 tokens, but there is still a need for improved methods to manage even longer contexts effectively.

Research Findings from Databricks Mosaic

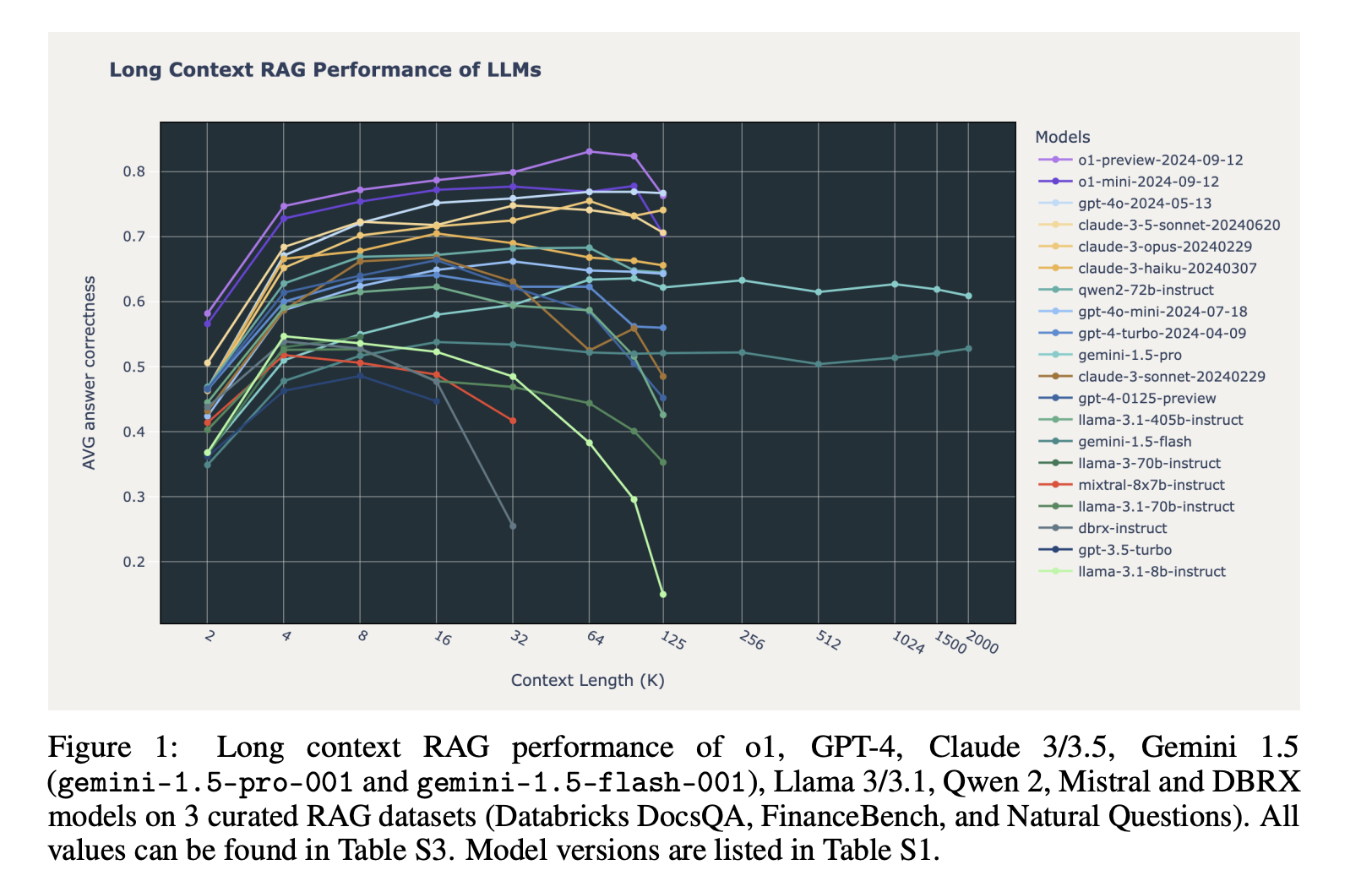

The research team at Databricks Mosaic evaluated the performance of RAG across various LLMs, including popular models like OpenAI’s GPT-4 and Google’s Gemini 1.5. They tested how well these models maintained accuracy with context lengths ranging from 2,000 to 2 million tokens. This study aimed to find which models excel in long-context scenarios, crucial for applications that require extensive data synthesis.

Methodology and Results

The research involved embedding document chunks using OpenAI’s text-embedding model and storing them in a vector store. Tests were conducted on specialized datasets relevant to RAG applications. The results showed significant differences in model performance. Some models, like OpenAI’s o1-mini and Google’s Gemini 1.5 Pro, maintained high accuracy even with 100,000 tokens, while others struggled beyond 32,000 tokens.

Insights on Model Performance

Analysis revealed that not all models improved with longer contexts. Some, like Claude 3 Sonnet, often refused to respond due to copyright concerns, while others faced issues with safety filters. Open-source models like Llama 3.1 showed consistent failures in longer contexts. These patterns highlight the need for targeted improvements in long-context capabilities.

Key Takeaways

- Performance Stability: Only a few commercial models maintained consistent performance beyond 100,000 tokens.

- Performance Decline in Open-Source Models: Many open-source models saw significant drops in performance beyond 32,000 tokens.

- Failure Patterns: Different models exhibited unique failure modes, often linked to context length and task demands.

- High-Cost Challenges: Long-context RAG can be expensive, with costs varying based on model and context length.

- Future Research Needs: More research is needed on context management, error handling, and cost reduction in RAG applications.

Conclusion

While longer context lengths offer exciting possibilities for LLMs, practical limitations remain. Advanced models like OpenAI’s o1 and Google’s Gemini 1.5 show promise, but broader applicability requires ongoing refinement. This research is a crucial step in understanding the challenges of scaling RAG systems for real-world use.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you like our work, you will love our newsletter. Don’t forget to join our 55k+ ML SubReddit.

Sponsorship Opportunity: Promote Your Research/Product/Webinar with 1 Million+ Monthly Readers and 500k+ Community Members.

To evolve your company with AI, consider how RAG can enhance your operations:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, stay tuned on our Telegram or Twitter.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.