Researchers from the University of Chicago have developed a tool called Nightshade, which can “poison” AI models that use images without consent. It embeds invisible pixels into an image, corrupting the classification of the image and affecting broader concepts. The tool could make AI companies more cautious about using images without permission but also highlights a vulnerability in text-to-image generators. Nightshade is part of the Glaze tool that mislabels the style of an image. However, malicious actors could still exploit the vulnerability unless better labeling detectors or human review are implemented.

Data Poisoning Tool Helps Artists Punish AI Scrapers

Researchers from the University of Chicago have developed a powerful tool called Nightshade that allows artists to protect their work from being scraped and used without consent by AI models. Companies like OpenAI and Meta have faced criticism for using online content without permission to train their AI models, leading to concerns from artists whose work is being replicated without consent.

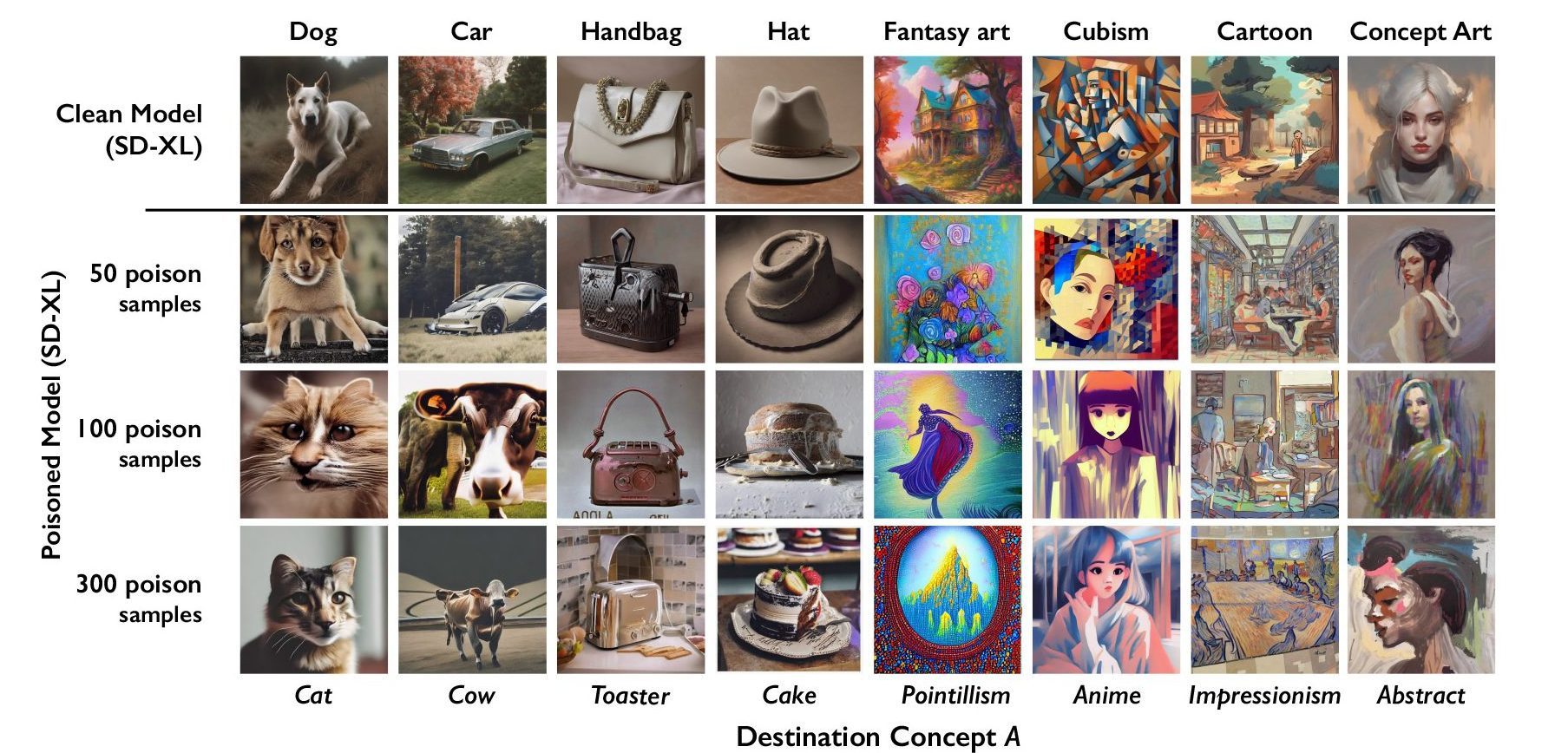

Nightshade works by embedding invisible pixels into an image, making it “poisonous” to AI models. When an AI model trained on large amounts of visual data encounters an image poisoned by Nightshade, it can misclassify the image, leading to errors and corruption in its output. For example, an image of a castle could be misclassified as an old truck.

The researchers found that using as few as 100 images targeting a single prompt was enough to corrupt a model. The corruption can also extend to broader concepts, affecting terms related to the targeted label. This means that if the “dog” label is corrupted, terms like “puppy” would also be affected.

Nightshade is bad news for AI image generators, as it can cause them to misclassify images and produce incorrect outputs. This tool could make AI companies more cautious about obtaining consent before using images and encourage them to respect “do not scrape” opt-outs.

The research team plans to incorporate Nightshade into their Glaze tool, which mislabels the style of an image. This allows artists to protect their work by labeling it as a different style, making it more difficult for AI models to replicate.

However, Nightshade also highlights a vulnerability in text-to-image generators that could be exploited by malicious actors. If a large number of images are poisoned and made available for scraping, AI models could be severely damaged. Better labeling detectors or human review are needed to mitigate this risk.

If you want to evolve your company with AI and stay competitive, consider using tools like Nightshade to protect your content and ensure consent. AI can redefine your way of work by automating key customer interactions, improving business outcomes, and providing customization. Start with a pilot, gather data, and gradually implement AI solutions that align with your needs.

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com. Explore practical AI solutions like the AI Sales Bot from itinai.com/aisalesbot, designed to automate customer engagement and manage interactions across all stages of the customer journey. Discover how AI can redefine your sales processes and customer engagement.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Data poisoning tool helps artists punish AI scrapers

- DailyAI

- Twitter – @itinaicom