Advancing Large Language Models (LLMs) with Critic-CoT Framework

Enhancing AI Reasoning and Self-Critique Capabilities for Improved Performance

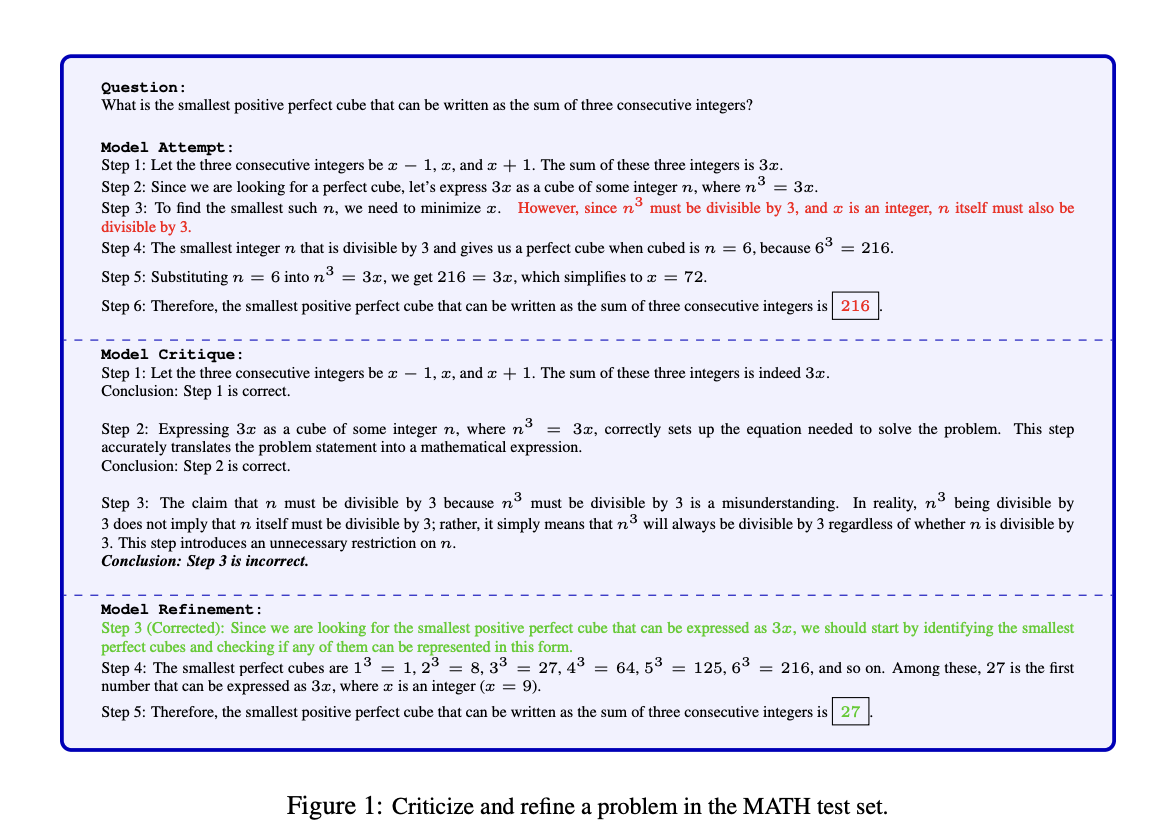

Artificial intelligence is rapidly progressing, focusing on improving reasoning capabilities in large language models (LLMs). To ensure AI systems can generate accurate solutions and critically evaluate their outputs, the Critic-CoT framework has been developed to significantly enhance self-critique abilities in LLMs. This structured and iterative refinement process reduces the need for human intervention and improves the accuracy and reliability of AI reasoning across complex tasks.

Researchers have demonstrated the effectiveness of the Critic-CoT framework through extensive experiments on grade-school-level and high school math problems datasets. The framework has shown significant improvements in task-solving performance, with the accuracy of LLMs increasing after employing the Critic-CoT model and the critic filter. This advancement represents a scalable and efficient solution for future AI development, minimizing the need for resource-intensive and impractical feedback mechanisms.

If you want to evolve your company with AI, stay competitive, and use Critic-CoT to enhance self-critique and reasoning capabilities in large language models for improved AI accuracy and reliability. Discover how AI can redefine your way of work, identify automation opportunities, define KPIs, select an AI solution, and implement gradually. Connect with us at hello@itinai.com for AI KPI management advice and stay tuned for continuous insights into leveraging AI on our Telegram t.me/itinainews or Twitter @itinaicom.