Practical Solutions and Value of Using Multi-Agent Systems for Large Language Models (LLMs)

Context Window Limitations

Large Language Models (LLMs) face challenges with complex tasks due to context window limitations. Solving multi-step problems within a single context window can reduce performance and accuracy.

Subtask Decomposition

Breaking down complex tasks into smaller subtasks using subtask decomposition enhances LLM performance on complex tasks. This method allows models to focus on simpler parts for more efficient completion.

Generation Complexity

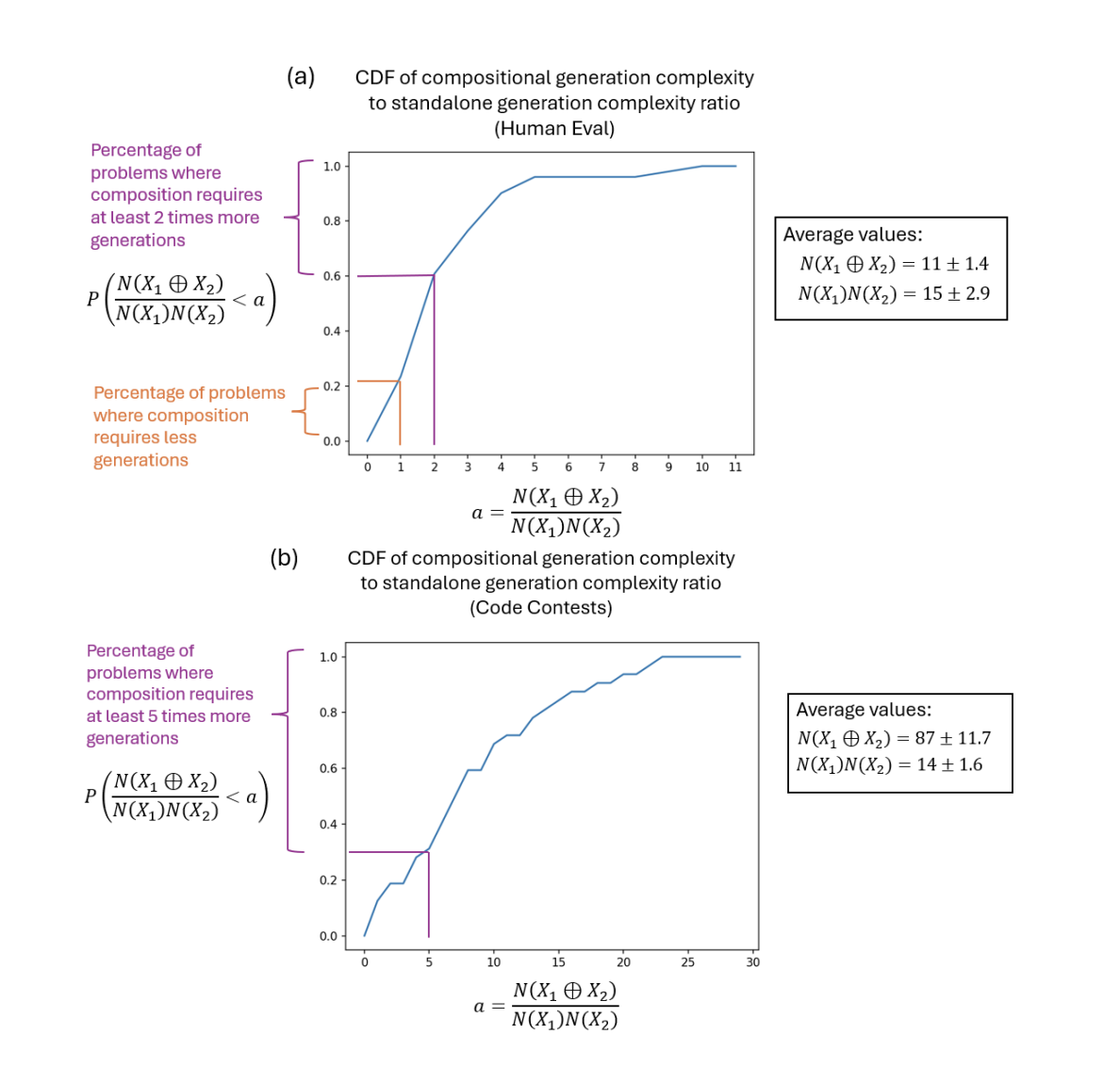

Generation complexity measures how many times an LLM must provide alternative answers before finding the correct solution. For composite problems with multiple tasks, generation complexity increases with task complexity and number of steps.

Multi-Agent Systems

Using multiple instances of LLMs in a distributed approach can alleviate in-context challenges and generation complexity. Each agent focuses on a specific part of the problem, leading to faster and more accurate task completion.

Benefits of Multi-Agent Systems

Employing multi-agent systems enables LLMs to handle longer and more complex tasks efficiently. Tasks are divided among agents, preventing complexity from growing exponentially and improving overall accuracy and performance.

Conclusion

While LLMs show promise in solving analytical problems, in-context limitations persist. Multi-agent systems offer a viable solution by distributing tasks among LLM instances, increasing precision and efficiency in handling complex issues.

![[FIXED] Conversation not found Error in ChatGPT](https://itinai.com/wp-content/uploads/2025/05/itinai.com_llm_Large_Language_model_graph_clusters_quant_comp_69744d4c-3b21-4fa5-ba57-af38e2af6ff4_2.png)