Practical Solutions and Value of Compositional GSM in Assessing AI Reasoning Capabilities

Overview:

Natural Language Processing (NLP) has evolved with large language models (LLMs) tackling challenging problems like mathematical reasoning. However, assessing their true reasoning abilities remains debatable.

Key Innovations:

Researchers introduced Compositional Grade-School Math (GSM) to evaluate LLMs’ reasoning with interconnected problems, going beyond traditional benchmarks.

Evaluation Method:

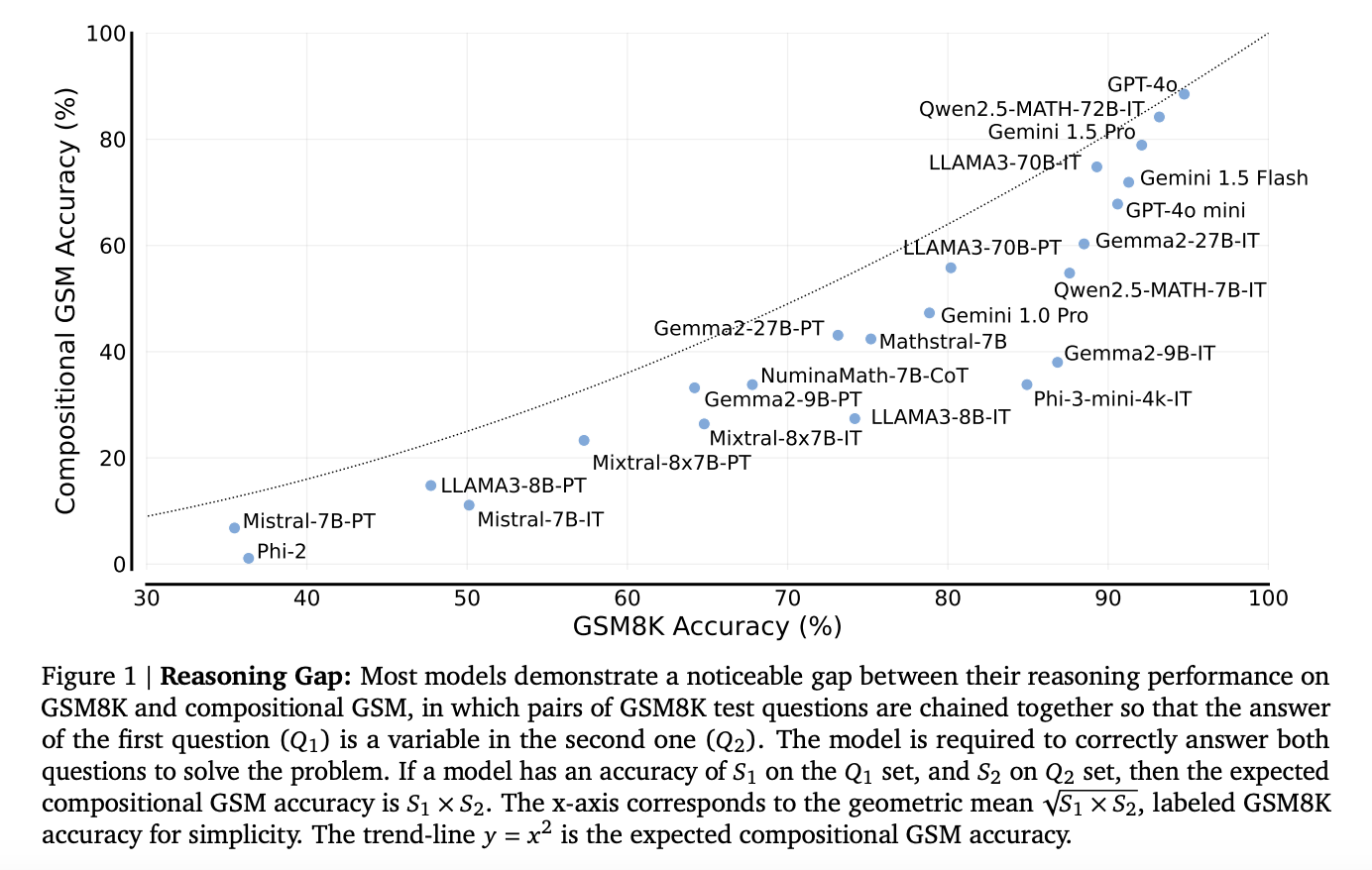

Compositional GSM links math problems, testing models’ ability to handle dependencies and step-by-step reasoning in solving multiple interconnected problems.

Findings:

LLMs showed significant reasoning gaps in compositional problem-solving compared to standard benchmarks, highlighting the need for enhanced training strategies.

Impact:

Analysis revealed the importance of reassessing evaluation methods to improve models’ compositional reasoning skills for better performance in complex scenarios.

Next Steps:

Enhance AI reasoning capabilities by evolving benchmark designs and training strategies, enabling models to excel in multi-step problem-solving tasks.

Collaboration:

For AI KPI management advice and insights on leveraging AI, connect with us at hello@itinai.com.