Instead of fully retraining large language models (LLMs) for different tasks, LoRA adapters can be fine-tuned, allowing cost-effective task-specific adaptations. A novel approach described in the article enables combining multiple LoRA adapters to create a versatile adapter for multitasking, such as both chatting and translating, using a single LLM with a simple process of weighted combination through methods like concatenation or Singular Value Decomposition (SVD).

“`html

Add Skills to Your AI Without Major Overhaul: Simple Adapters

Problem: Fine-tuning an entire Large Language Model (LLM) is expensive.

Solution: Use LoRA adapters—small, trainable components added to a frozen LLM, tailoring it for specific tasks without the cost and complexity of fine-tuning the whole model.

Why Combine Adapters?

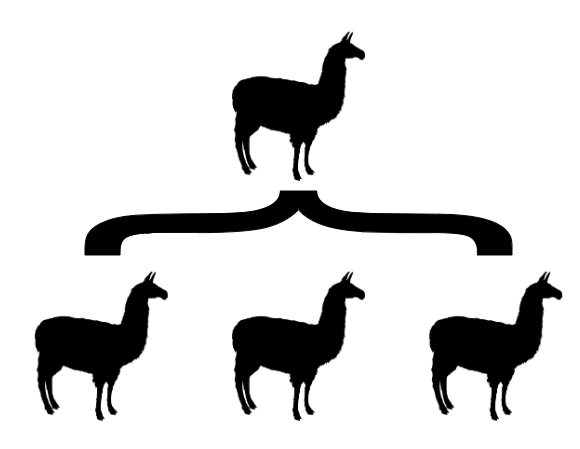

Combining adapters like pieces of a puzzle allows a single LLM to tackle multiple tasks—like translation and chatting—without re-training from scratch.

Case Study: Transform Llama 2 into a Dual-Task Powerhouse

Practical Value: A Llama 2 model updated with combined adapters can handle both translation and chat, enhancing its utility and efficiency.

How to Add Adapters

It’s crucial to match the adapter to the LLM’s version. For instance, an adapter fine-tuned for “Llama 2 7B” will integrate seamlessly with that model. Then, fine-tune the adapter with QLoRA to transform Llama 2 into a specialized chat model.

How to Combine Adapters

Use the PEFT library to merge adapters easily using methods like concatenation or SVD.

Key Steps:

1. Install necessary tools (e.g., transformers, peft).

2. Import the base LLM and set up quantization, if needed.

3. Load and combine adapters, possibly adjusting weights to favor certain behaviors.

4. Test the combined adapter with relevant prompts to ensure proper function.

Solution Spotlight: Multi-Task Adapters

A single multi-task adapter can save resources and streamline AI functions by managing different tasks. Use simple command lines to combine different adapters, each fine-tuned for distinct tasks, into one multi-task adapter.

Value for Managers:

1. Cost-effective: No need for extensive re-training of the entire LLM.

2. Versatile: Equip your AI to handle diverse tasks with one solution.

3. Efficient: Quick combination of adapters means faster time to implementation.

In Practice: Test and Adjust

Experiment with generating responses using prompts for chat or translation. Tweak adapter weights and combination methods to optimize performance for your desired tasks.

Wrap-Up: Combining multiple adapters is straightforward and cost-effective. It’s essential, however, to use adapters with significantly different prompt formats to avoid confusion. Experimenting with weights during the combination process can fine-tune performance for your specific needs.

Looking to Advance with AI?

Explore itinai.com for AI solutions that can transform your business operations, from automating customer engagement with an AI Sales Bot to identifying opportunities for AI integration.

Take Action:

– Identify areas for AI automation.

– Define clear performance metrics.

– Select tailored AI tools.

– Implement incrementally, starting with a pilot project.

Stay Connected: Get ongoing AI insights by joining our Telegram or following us on Twitter.

Ready for AI Integration?

Discover the AI Sales Bot and other AI solutions at itinai.com/aisalesbot to revolutionize your sales processes and customer interactions.

“`

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Combine Multiple LoRA Adapters for Llama 2

- Towards Data Science – Medium

- Twitter – @itinaicom