Understanding Multimodal Large Language Models (MLLMs)

Multimodal large language models (MLLMs) are cutting-edge systems that understand various types of input like text and images. They aim to solve tasks by reasoning and providing accurate results. However, they often struggle with complex problems due to a lack of structured thinking, leading to incomplete or unclear answers.

Current Challenges in MLLMs

Traditional reasoning methods in MLLMs face several issues:

- Prompt-based methods: These mimic human reasoning but struggle with difficult tasks.

- Plant-based methods: They seek reasoning paths but lack flexibility.

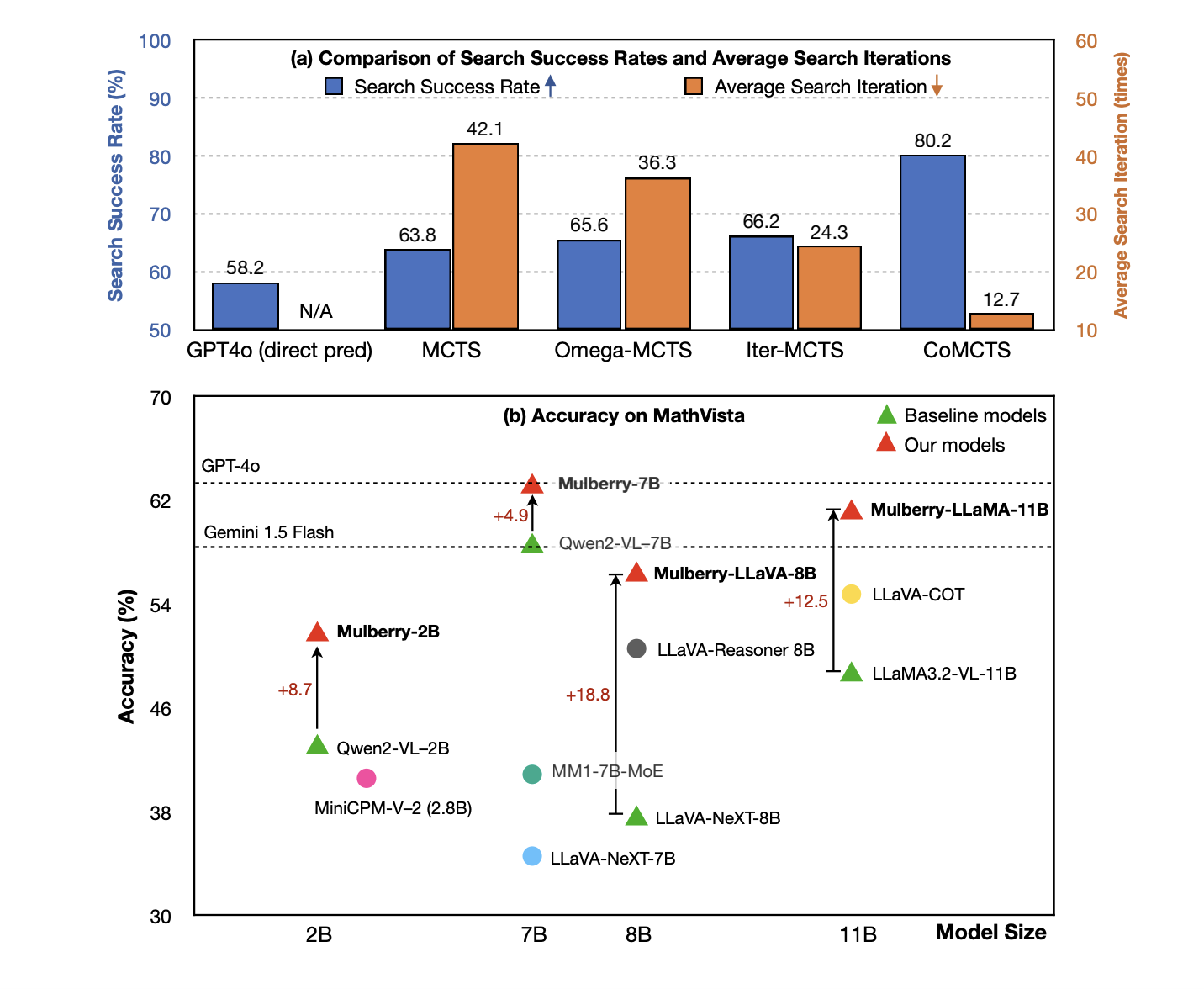

- Learning-based methods: Approaches like Monte Carlo Tree Search (MCTS) are too slow and don’t promote deep thinking.

- Direct prediction: Many models provide quick answers without showing their thought process.

Introducing CoMCTS: A Solution for MLLMs

A research team from leading universities developed CoMCTS, a framework designed to enhance reasoning in tree search tasks. Unlike traditional methods, CoMCTS uses a collaborative strategy, employing multiple pre-trained models to improve accuracy and minimize errors.

Four Key Steps of CoMCTS

- Expansion: Multiple models search for different solutions simultaneously, increasing diversity in answers.

- Simulation: Ineffective paths are eliminated, simplifying the search process.

- Backpropagation: Models learn from past mistakes, leading to better future predictions.

- Selection: A statistical method identifies the best action to take.

Mulberry-260K Dataset

The researchers created the Mulberry-260K dataset, which includes 260,000 multimodal questions combining text and images across various subjects. This dataset enables effective training for CoMCTS, requiring an average of 7.5 reasoning steps per task.

Results and Performance Improvement

The CoMCTS framework showed significant performance boosts of up to 7.5% over existing models. It excelled in complex reasoning tasks and demonstrated a 63.8% improvement in evaluation performance.

Conclusion: The Value of CoMCTS

CoMCTS enhances reasoning capabilities in MLLMs by integrating collective learning with tree search methods. It provides a more efficient way to find reasoning paths, making it a valuable asset for future research and development in AI.

Getting Involved

Explore the research paper and its GitHub page. Follow us on Twitter, join our Telegram Channel, and be part of our LinkedIn Group. Also, connect with over 60,000 members in our ML SubReddit.

Unlocking the Power of AI for Your Business

Stay competitive by leveraging the benefits of CoMCTS for your organization. Here’s how:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select the Right AI Solution: Choose tools that meet your specific needs.

- Implement Gradually: Begin with pilot projects, gather data, and expand wisely.

For Expert AI Advice

Contact us at hello@itinai.com for guidance on AI KPI management. Follow our updates on Telegram or Twitter.

Transform Your Sales and Customer Engagement with AI

Discover innovative solutions at itinai.com.