Practical Solutions and Value of Mixture of Agents (MoA) Framework in Finance

Introduction

Language model research has rapidly advanced, focusing on improving how models understand and process language, particularly in specialized fields like finance. Large Language Models (LLMs) have moved beyond basic classification tasks to become powerful tools capable of retrieving and generating complex knowledge. In finance, where the volume of data is immense and requires precise interpretation, LLMs are crucial for analyzing market trends, predicting outcomes, and providing decision-making support.

Challenges in LLM Field

One major problem researchers face in the LLM field is balancing cost-effectiveness with performance. LLMs are computationally expensive, and as they process larger data sets, the risk of producing inaccurate or misleading information increases, especially in fields like finance, where incorrect predictions can lead to significant losses.

MoA Framework

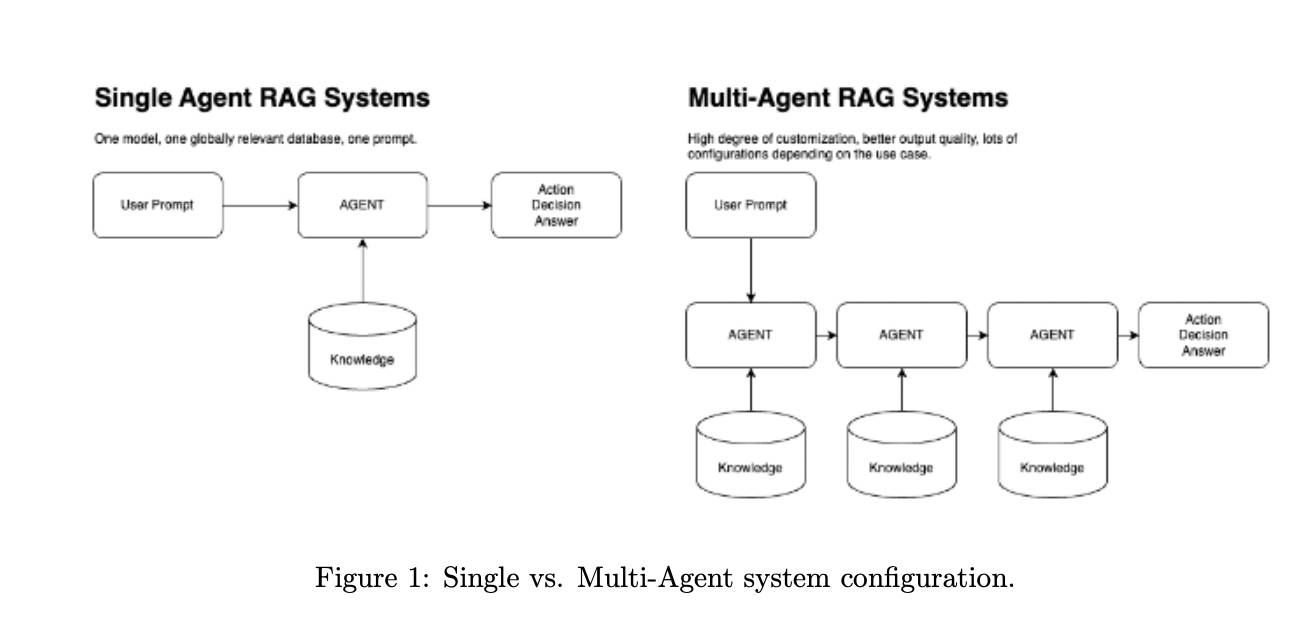

Researchers from the Vanguard IMFS team introduced a new framework called Mixture of Agents (MoA) to overcome the limitations of traditional ensemble methods. MoA is an advanced multi-agent system designed specifically for Retrieval-Augmented Generation (RAG) tasks. Unlike previous models, MoA utilizes a collection of small, specialized models that work together in a highly coordinated manner to answer complex questions with greater accuracy and lower costs.

Performance and Cost-Effectiveness

In terms of performance, the MoA system has shown significant improvements in response quality and efficiency compared to traditional single-model systems. The cost-effectiveness of MoA makes it highly suitable for large-scale financial applications, operating at a total monthly cost of under $8,000 while processing queries from a team of researchers.

Advantages of MoA

The MoA framework’s modular design allows companies to scale their operations based on budget and need, with the flexibility to add or remove agents as necessary. With its ability to process vast amounts of data in a fraction of the time while maintaining high accuracy, MoA is set to become a standard for enterprise-grade applications in finance and beyond.

Conclusion

The Mixture of Agents framework offers a powerful solution for improving the performance of large language models in finance. The researchers successfully addressed critical issues like scalability, cost, and response accuracy by leveraging a collaborative agent-based system. With its ability to redefine the way of work and provide significant cost savings compared to traditional methods, MoA represents a significant advancement in LLM technology.