Understanding CodeLLMs and Their Limitations

Code Large Language Models (CodeLLMs) mainly focus on generating code but often overlook the critical need for code comprehension. Current evaluation methods may be outdated and can lead to misleading results due to data leakage. Furthermore, practical usage shows issues like bias and hallucination in these models.

Introducing CodeMMLU

A team from FPT Software AI Center, Hanoi University of Science and Technology, and VNU-HCM University of Science has developed CodeMMLU. This new benchmark is designed to evaluate how well LLMs understand software and code.

Unlike traditional benchmarks, CodeMMLU assesses models on their ability to reason about code, not just generate it. This offers valuable insights into their understanding of complex software concepts, ultimately improving AI tools for software development.

Key Features of CodeMMLU

- Comprehensive Coverage: CodeMMLU includes over 10,000 questions from diverse sources, ensuring that the dataset is unbiased.

- Diverse Knowledge: The data spans various software topics, including QA, code generation, and defect detection, across over 10 programming languages.

Benchmarking Methodology

CodeMMLU focuses on two main areas: knowledge-based tests and real-world programming problems. The knowledge tests cover a range from high-level software concepts to low-level language grammar. Questions are gathered from reputable sources like GeeksforGeeks and W3Schools.

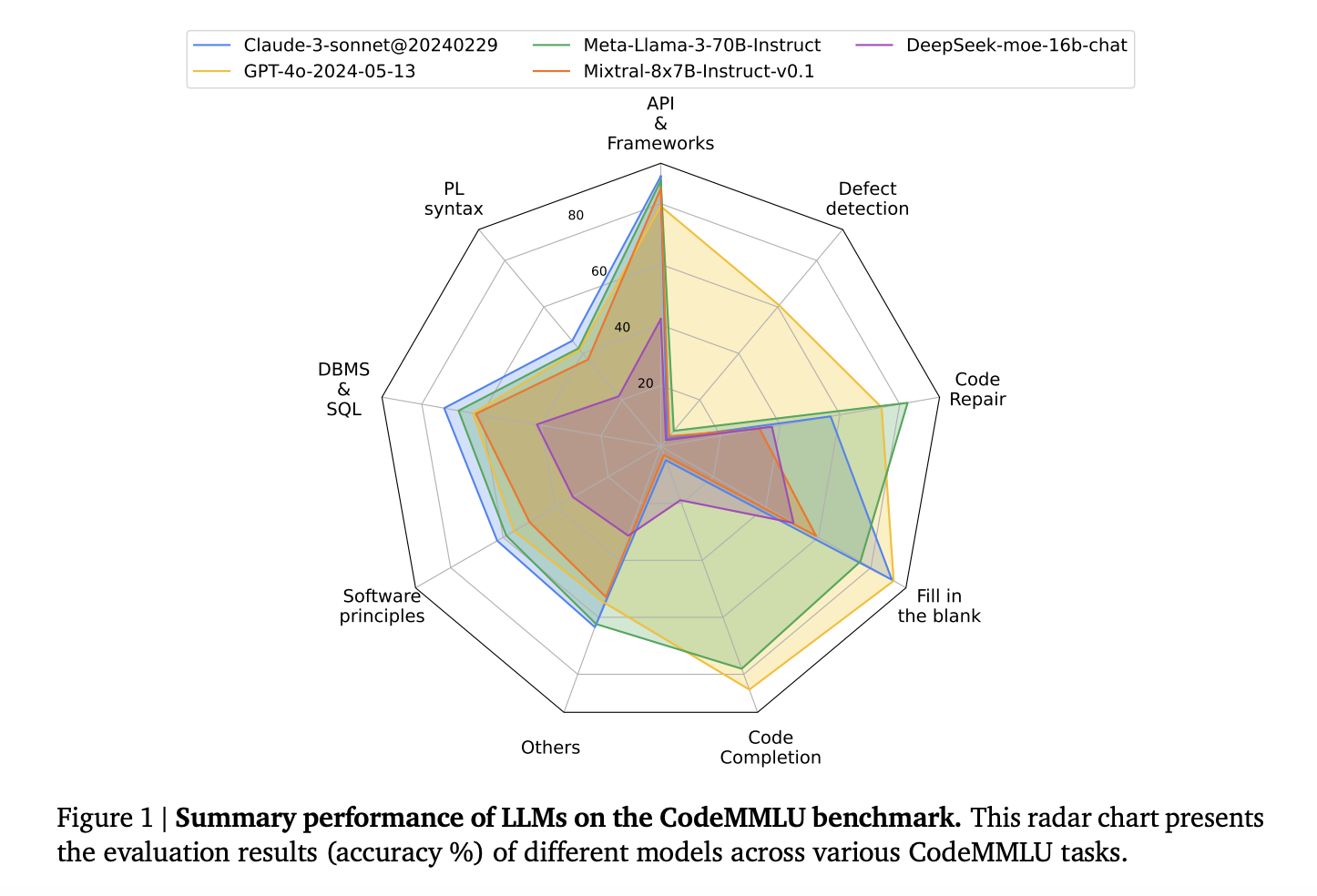

The benchmark evaluates skills through five multiple-choice question types, including code completion and defect detection.

Performance Insights

Research shows a strong link between scores on knowledge tests and performance in real-world coding tasks, with a Pearson correlation score of r = 0.61. This indicates that understanding software principles is key to excelling in practical coding challenges.

Future Directions

While CodeMMLU provides thorough assessments, it has limitations such as not fully measuring creative coding abilities. Future plans include expanding the benchmark to cover more specialized areas and integrating complex tasks.

Get Involved!

Explore the research paper and GitHub for more details. Don’t forget to follow us on Twitter, join our Telegram Channel, and our LinkedIn Group. Sign up for our newsletter to stay updated.

If you’re looking to enhance your business with AI, learn how to:

- Identify Automation Opportunities: Pinpoint areas where AI can improve customer interactions.

- Define KPIs: Set measurable goals for your AI projects.

- Select AI Solutions: Choose tools that fit your needs.

- Implement Gradually: Start with small projects and expand.

For expert advice on AI KPI management, contact us at hello@itinai.com. Stay informed about AI insights by following us on Telegram and Twitter.

Upcoming Event

RetrieveX – The GenAI Data Retrieval Conference on Oct 17, 2023.