Multimodal AI Benchmark: MMMU-Pro

Overview

Multimodal large language models (MLLMs) are crucial for tasks like medical image analysis and engineering diagnostics. However, existing benchmarks for evaluating MLLMs have been insufficient, allowing models to take shortcuts and raising concerns about their true capabilities.

Solution

To address this, researchers from Carnegie Mellon University and other institutions have introduced MMMU-Pro, an advanced benchmark designed to push the limits of AI systems’ multimodal understanding. This benchmark filters out questions solvable by text-only models, increases the difficulty of multimodal questions, and includes features like vision-only input scenarios and multiple-choice questions with augmented options.

Methodology

The construction of MMMU-Pro involved filtering out questions answerable by text-only models, increasing the number of answer options, and introducing a vision-only input setting to challenge models to understand both textual and visual information simultaneously.

Performance Insights

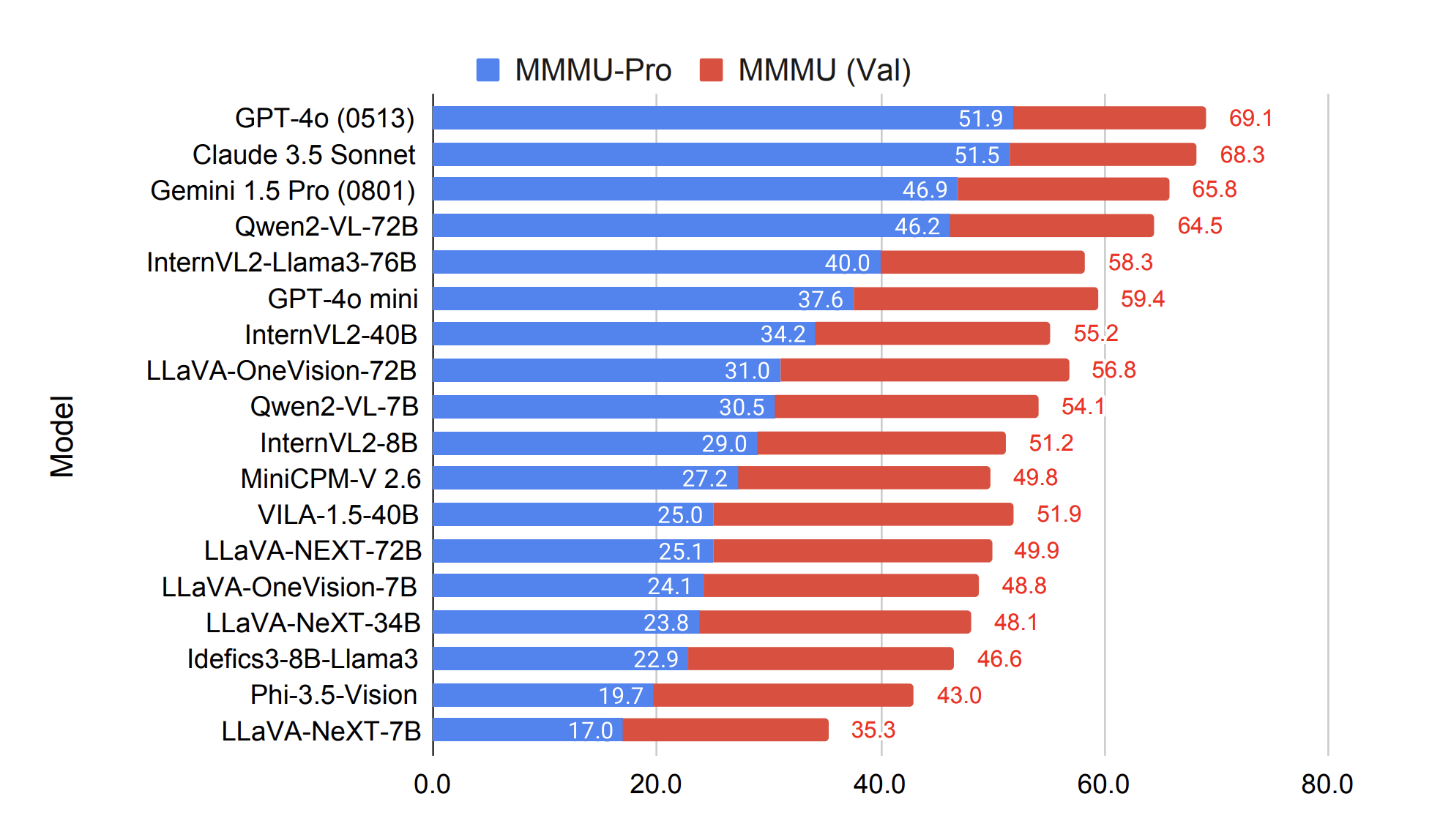

MMMU-Pro revealed the limitations of many state-of-the-art models, with significant drops in accuracy for models like GPT-40, Claude 3.5 Sonnet, and Gemini 1.5 Pro. Chain of Thought (CoT) reasoning prompts were introduced to improve model performance, but results varied across models.

Conclusion

MMMU-Pro marks a significant advancement in evaluating multimodal AI systems, identifying limitations in existing models and presenting a more realistic challenge for assessing true multimodal understanding. This benchmark opens new directions for future research and represents an important step forward in the quest for AI systems capable of performing sophisticated reasoning in real-world applications.

Check out the Paper and Leaderboard for more details.

If you want to evolve your company with AI, stay competitive, and use MMMU-Pro to your advantage, connect with us at hello@itinai.com. Follow us on Twitter and LinkedIn. Join our Telegram Channel.

For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

![Exploring Well-Designed Machine Learning (ML) Codebases [Discussion]](https://itinai.com/wp-content/uploads/2025/03/itinai.com_russian_handsome_charismatic_models_scrum_site_dev_96579955-dded-4288-b857-3ee0b72c8d7a_2.png)