Efficient Long-Context Inference with LLMs

Understanding KV Cache Compression

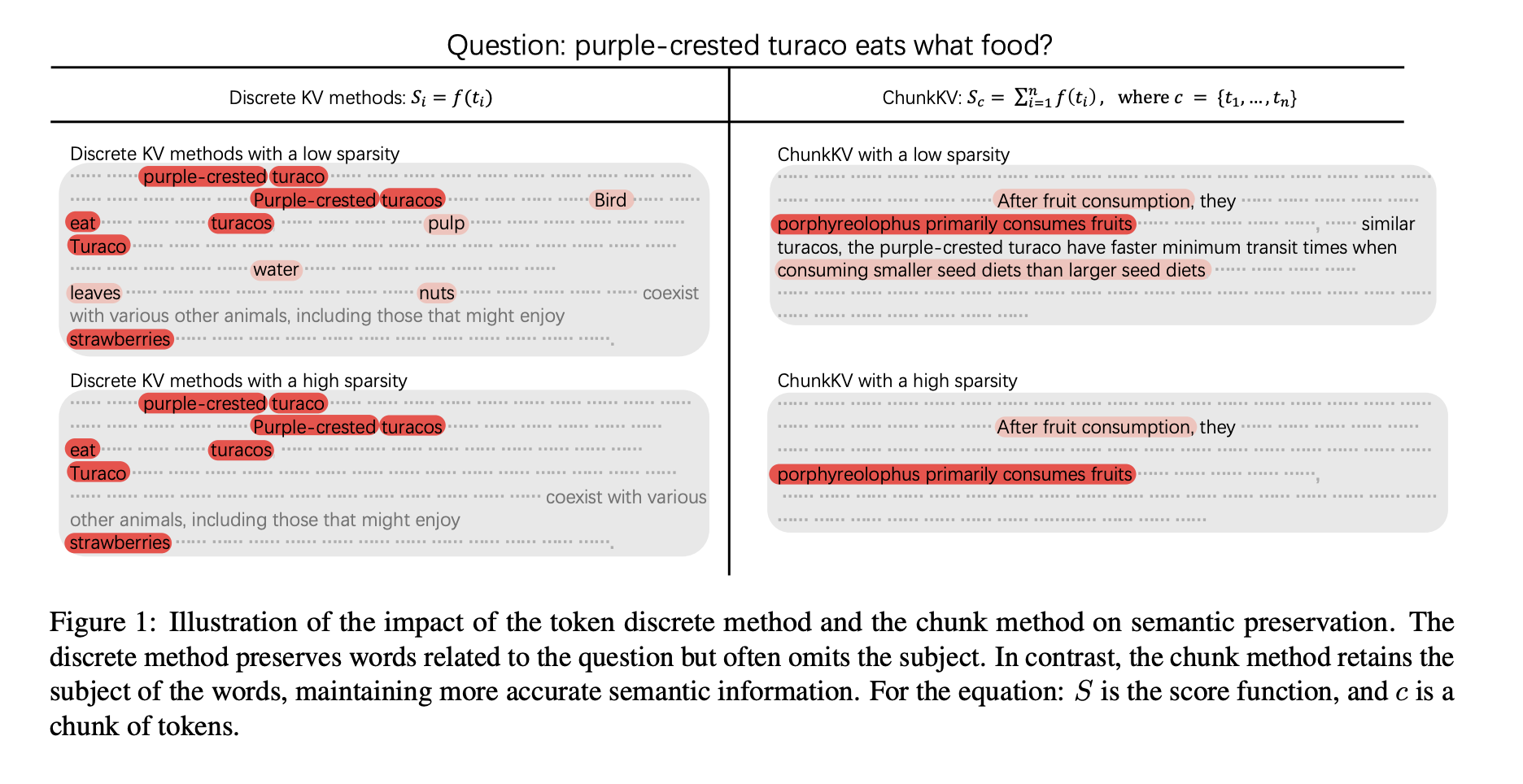

Managing GPU memory is essential for effective long-context inference with large language models (LLMs). Traditional techniques for key-value (KV) cache compression often discard less important tokens based on attention scores, which can lead to loss of meaningful information. A better approach is needed that keeps the relationships between tokens in mind to maintain semantic integrity.

Dynamic Solutions for Improved Memory Usage

New strategies like H2O and SnapKV focus on dynamic KV cache compression, which optimizes memory usage while still delivering strong performance. These methods use attention-based evaluations and organize text into meaningful segments. Additionally, techniques such as LISA and DoLa leverage insights from multiple transformer layers to further enhance efficiency.

Introducing ChunkKV

Researchers from Hong Kong University developed ChunkKV, a method that groups tokens into meaningful chunks instead of evaluating each token individually. This method not only reduces memory usage but also retains vital semantic information. ChunkKV has been shown to improve performance by up to 10% in various benchmarks while maintaining contextual meaning.

Key Benefits of ChunkKV

- Memory Efficiency: Reduces GPU memory usage by preserving important token groups.

- Semantic Preservation: Maintains critical information and context in long-text analysis.

- Improved Performance: Outperforms existing methods in preserving accuracy across various compression ratios.

- Layer-wise Optimization: Shares compressed indices across transformer layers for enhanced efficiency.

Benchmark Results

In evaluations on LongBench and Needle-In-A-Haystack, ChunkKV consistently outperformed other methods, showing its ability to retain key information and enhance throughput on A40 GPUs. Its optimal chunk size of 10 balances semantic preservation and compression efficiency, reducing latency by 20.7% and increasing throughput by 26.5%.

Elevate Your Business with AI

To stay competitive and leverage AI effectively, consider adopting ChunkKV for optimizing long-context inference. Here are some practical steps:

- Identify Opportunities: Look for key areas in customer interactions that could benefit from AI.

- Define Metrics: Ensure that your AI efforts have measurable impacts on your business.

- Select Solutions: Choose AI tools that fit your needs and allow customization.

- Implement Gradually: Start small with pilot projects, gather data, and expand usage wisely.

Connect with Us

For AI KPI management advice, reach out at hello@itinai.com. Stay updated on leveraging AI by following us on Telegram or Twitter @itinaicom.

Discover More

Discover how AI can transform your sales processes and improve customer engagement. Visit us at itinai.com for more solutions.