Understanding the Challenges of Large Language Models (LLMs)

Large Language Models (LLMs) are becoming more complex and in demand, posing challenges for companies that want to offer Model-as-a-Service (MaaS). The increasing use of LLMs leads to varying workloads, making it hard to balance resources effectively. Companies must find ways to meet different Service Level Objectives (SLOs) for speed and efficiency, especially during busy times when demand spikes.

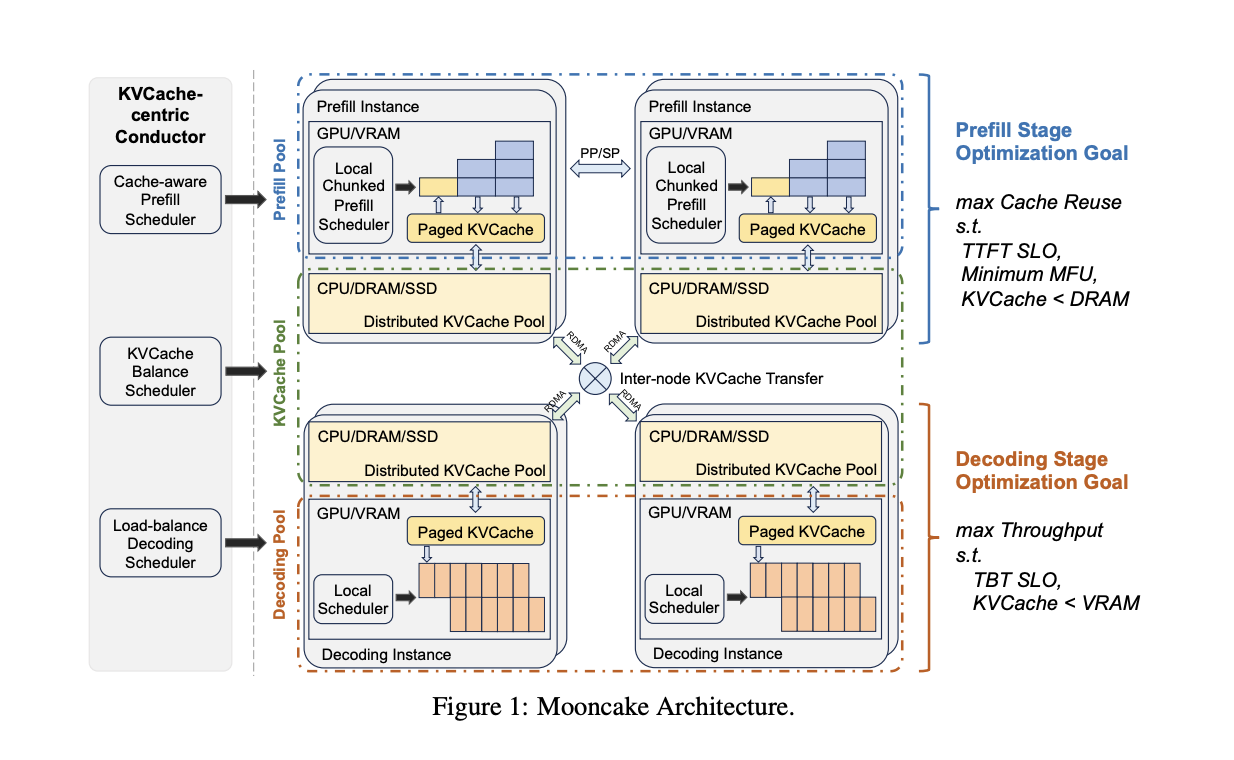

Introducing Mooncake by Moonshot AI

Moonshot AI, a company based in China, has open-sourced its innovative reasoning architecture called Mooncake. This architecture is designed to tackle scalability and efficiency issues in LLM serving. The first component, the Transfer Engine, is now available on GitHub, with more features to come.

Key Features of Mooncake

- KVCache-Centric Design: Mooncake separates prefill and decoding processes, allowing better resource optimization using underutilized hardware like CPUs and SSDs.

- Improved Throughput: By isolating caching from computational tasks, Mooncake enhances both speed and efficiency.

- Two-Stage Serving: The architecture divides LLM serving into Prefill and Decoding stages, reducing redundant computations and improving performance.

- Early Rejection Policy: This feature helps manage system overload during peak times, maintaining SLOs for response times.

Significant Performance Improvements

Mooncake has demonstrated remarkable results, achieving up to a fivefold increase in throughput in simulated scenarios and enabling Kimi to handle 75% more requests in real-world situations. This efficiency is crucial as demand for LLM capabilities grows across various industries.

Benefits of Mooncake’s Open-Source Release

- Decentralization: It prevents any single hardware component from becoming a bottleneck.

- Resource Balancing: The KVCache-centric model effectively balances loads, maximizing throughput while meeting latency needs.

- Flexibility: The disaggregated approach allows for easy addition of computational resources, adapting to workload variations.

- Collaboration: The phased rollout encourages community input for continuous improvement.

Conclusion

Moonshot AI’s open-source release of Mooncake marks a significant step towards transparent and scalable AI development. By focusing on efficient resource management, Mooncake addresses key challenges in LLM serving, enhancing performance and reducing costs. This architecture is a promising solution for companies looking to leverage AI effectively.

Get Involved and Stay Updated

Explore the research paper and GitHub page for more details. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn for updates. If you’re interested in AI solutions for your business, reach out to us at hello@itinai.com.