Enhancing AI Reasoning with Chain-of-Associated-Thoughts (CoAT)

Transforming AI Capabilities

Large language models (LLMs) have changed the landscape of artificial intelligence by excelling in text generation and problem-solving. However, they typically respond to queries quickly without adjusting their answers based on ongoing information. This can lead to challenges in complex tasks that need real-time updates, such as answering multi-step questions or adapting code.

Challenges in Current LLM Enhancements

Current methods to improve LLM reasoning fall into two main types:

– **Retrieval-Augmented Generation (RAG)**: These systems preload external information but can sometimes include irrelevant data, affecting speed and accuracy.

– **Tree-Based Search Algorithms**: Techniques like Monte Carlo Tree Search (MCTS) allow structured reasoning but don’t effectively incorporate new context during the process.

Introducing CoAT: A Solution to Limitations

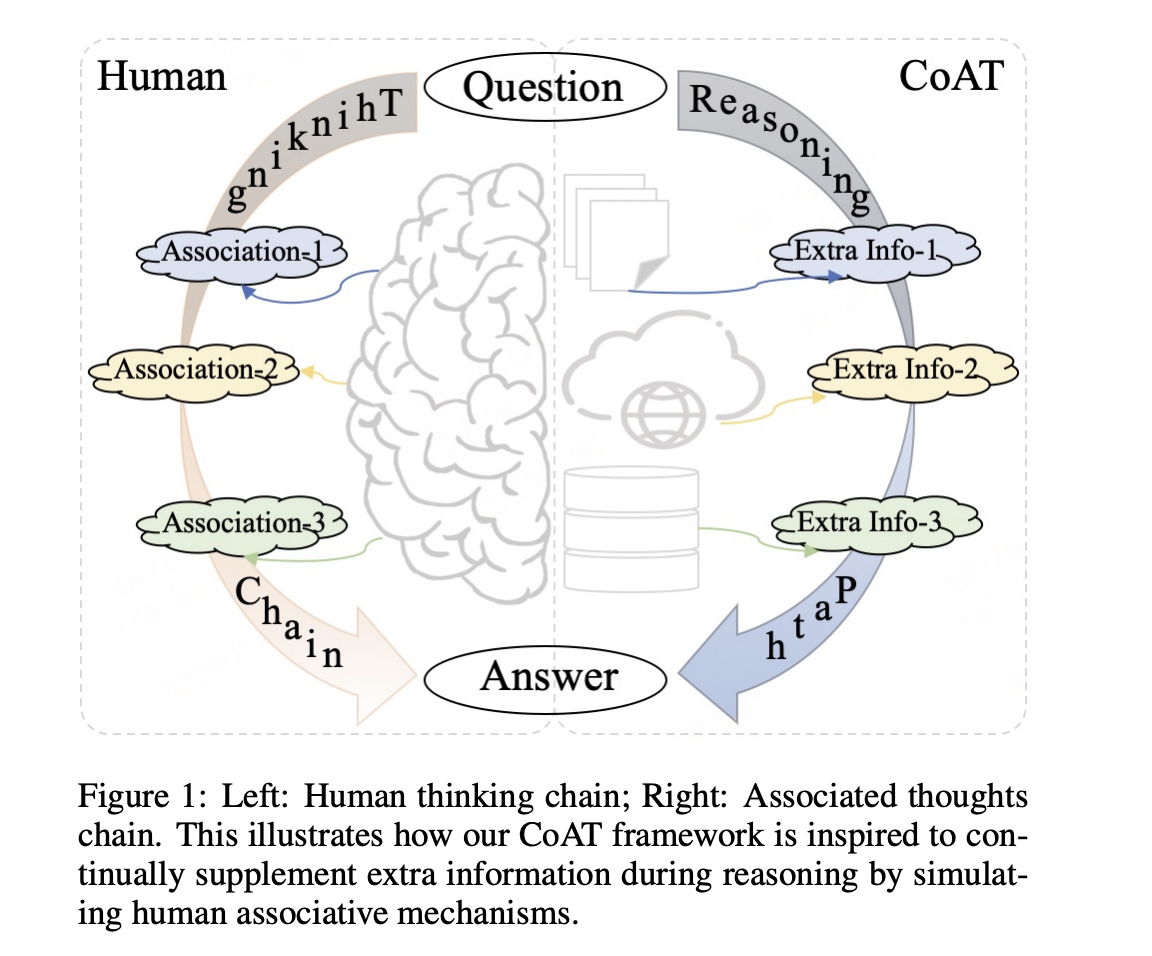

A team at Digital Security Group, Qihoo 360 has proposed the Chain-of-Associated-Thoughts (CoAT) framework to tackle these issues through two significant innovations:

1. **Dynamic Knowledge Integration**: CoAT retrieves knowledge as needed, similar to how a mathematician recalls relevant theories only when working on a proof.

2. **Optimized MCTS Algorithm**: This includes a four-stage cycle—selection, knowledge integration, evaluation, and feedback—ensuring that each reasoning step can trigger relevant knowledge updates.

Dual-Stream Reasoning Architecture

CoAT utilizes a dual-stream reasoning method. While addressing a query, it explores reasoning pathways through the MCTS tree and maintains an associative memory. Each node generates content and associated knowledge, scoring them based on quality and relevance.

Proven Performance

CoAT has shown superior results compared to existing methods through extensive testing. It generated more detailed answers for complex queries and excelled in knowledge-heavy question answering and code generation tasks. This includes outperforming other models in various benchmarks, proving its effectiveness.

A New Paradigm for AI Reasoning

CoAT establishes a fresh approach to LLM reasoning by blending dynamic knowledge associations with systematic search techniques. This setup enables real-time updates, allowing the AI to adapt its reasoning as new information arises. The framework supports easy integration with tools like LLM agents and real-time search.

Your Path to AI Integration

If you want your company to thrive with AI, consider the following steps with CoAT:

– **Identify Automation Opportunities**: Find points in customer interactions that could benefit from AI.

– **Set Measurable KPIs**: Ensure that your AI initiatives drive measurable business outcomes.

– **Choose the Right AI Solution**: Select tools that fit your needs and allow customization.

– **Gradual Implementation**: Start with pilot projects, gather insights, and scale judiciously.

Stay Connected for More Insights

For further information on AI integration, contact us at hello@itinai.com. Follow us for updates on our Telegram channel or Twitter.

Explore how AI can transform your sales and customer engagement at itinai.com.