Understanding Contrastive Language-Image Pretraining

What is Contrastive Language-Image Pretraining?

Contrastive language-image pretraining is a cutting-edge AI method that allows models to effectively connect images and text. This technique helps models understand the differences between unrelated data while aligning related content. It has shown exceptional abilities in tasks where the model hasn’t seen specific examples before, known as zero-shot transfer.

Challenges in Pretraining

However, large-scale pretraining can struggle when new data differs significantly from what it was originally trained on. To overcome this, researchers found that adding more data during testing is crucial for adapting to these changes and understanding context better. They have explored various strategies to enhance model performance, such as fine-tuning and adapter training.

Advancements in Pretraining Techniques

New Approaches in Pretraining

Contrastive image-text pretraining has become the go-to method for creating powerful visual representation models, thanks to frameworks like CLIP and ALIGN. Recent innovations, like SigLIP, offer more efficient training methods that maintain or improve performance. Researchers are also looking at new strategies to enhance how well models generalize across different data settings.

LIxP: A Breakthrough in Adaptation

Researchers from Tubingen AI Center, Munich Center for ML, and Google DeepMind have developed a new approach called LIxP (Language-Image Contextual Pretraining). This method enhances traditional contrastive pretraining by adding a context-aware feature, improving how well models adapt to new data without losing their existing capabilities. LIxP has demonstrated significant improvements in performance and efficiency across various tasks.

Key Findings from LIxP Research

Performance Improvements

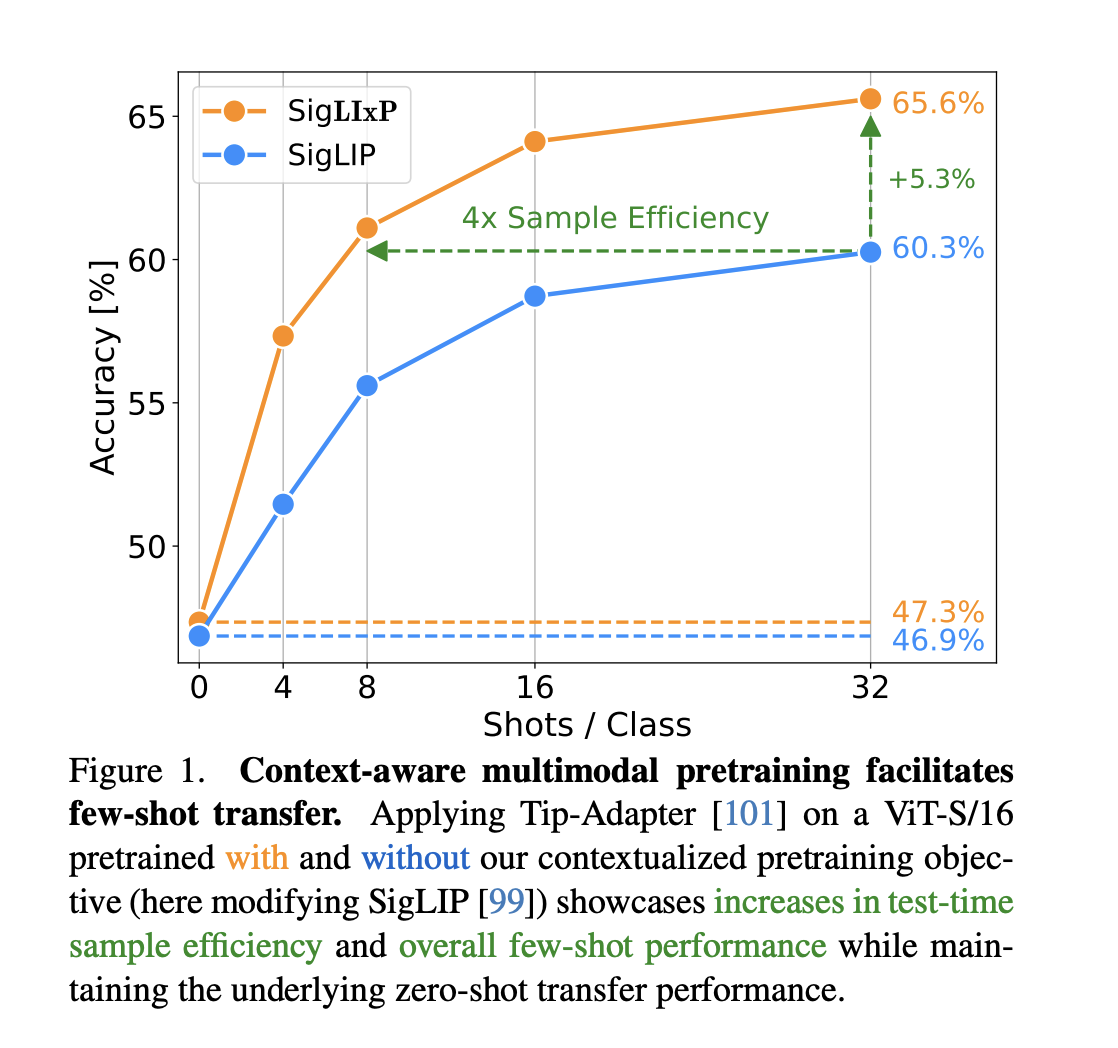

LIxP has achieved up to four times better sample efficiency and improved average performance by over 5% across 21 classification tasks. This was done while maintaining the original zero-shot transfer capabilities. The research involved extensive testing with various model sizes and datasets, showing consistent improvements.

Efficient Adaptation

This innovative method allows for effective few-shot adaptation at test time, ensuring that performance remains high even with limited data. The researchers found that even minimal additional training could lead to performance levels comparable to models trained on much larger datasets.

Conclusion and Call to Action

For businesses and researchers looking to leverage AI, consider how these advancements can enhance your operations. Explore opportunities where AI can automate processes and improve outcomes. Start with a pilot project, measure results, and expand your use of AI accordingly.

To learn more about our insights and solutions, connect with us via email at hello@itinai.com or follow us on @itinaicom on Twitter, and join our Telegram Channel.

Also, don’t forget to check out the research paper detailing these findings and innovations!