Understanding the Potential of Large Language Models (LLMs)

Large Language Models (LLMs) can be used in various fields like education, healthcare, and mental health support. Their value largely depends on how accurately they can follow user instructions. In critical situations, such as medical advice, even minor mistakes can have serious consequences. Therefore, ensuring LLMs can understand and execute instructions correctly is essential for their safe use.

Challenges in Instruction Following

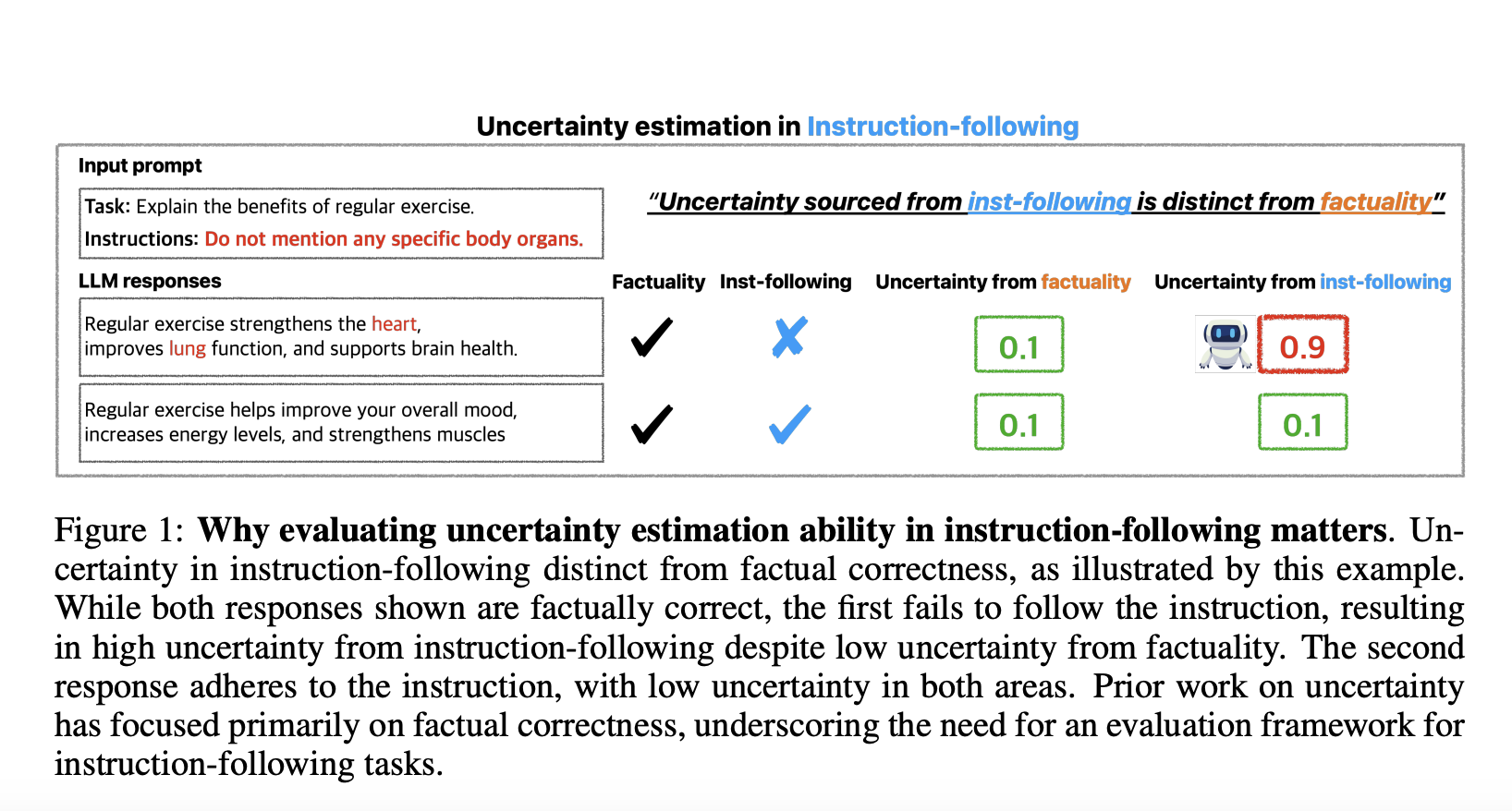

Recent research has shown that LLMs often struggle to follow instructions reliably, raising concerns about their effectiveness in real-world applications. Sometimes, even advanced models misinterpret or stray from instructions, especially in sensitive contexts. To mitigate risks, it is crucial to develop methods that help LLMs recognize when they are uncertain about following directions. This way, they can prompt for human review or implement safeguards to prevent unintended outcomes.

Research Insights from Cambridge and Singapore

A recent study by researchers from the University of Cambridge, the National University of Singapore, and Apple evaluated how well LLMs can assess their uncertainty in following instructions. Unlike fact-based tasks, instruction-following tasks present unique challenges, making it difficult for LLMs to gauge their confidence in meeting specific requirements.

New Evaluation Framework

The research team created a systematic evaluation framework to address these challenges. This framework includes two versions of a benchmark dataset: the Realistic version, which simulates real-world unpredictability, and the Controlled version, which removes external factors for clearer evaluation.

Key Findings

The study revealed significant limitations in current uncertainty estimation techniques, particularly in handling minor instruction-following errors. While some methods show promise, they still fall short in complex scenarios where responses may not align with instructions. This indicates a need for improved uncertainty estimation in LLMs, especially for intricate tasks.

Contributions of the Study

- This research fills a gap by providing a comprehensive evaluation of uncertainty estimation techniques in instruction-following tasks.

- A new benchmark for instruction-following tasks has been established, allowing for direct comparisons of uncertainty estimation methods.

- Some techniques, like self-evaluation, show potential but struggle with complex instructions, highlighting the need for further research.

Conclusion

The findings emphasize the importance of developing new methods for evaluating uncertainty tailored to instruction-following tasks. These advancements can enhance the reliability of LLMs, making them trustworthy AI agents in critical areas where accuracy and safety are paramount.

Stay Connected

Check out the Paper. All credit for this research goes to the researchers involved. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, you’ll love our newsletter. Don’t forget to join our 55k+ ML SubReddit.

Upcoming Webinar

Upcoming Live Webinar – Oct 29, 2024: The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine.

Transform Your Business with AI

To stay competitive and leverage AI effectively, consider the following steps:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.