Understanding Vision Transformers and Their Challenges

Vision Transformers (ViTs) are crucial in computer vision, known for their strong performance and adaptability. However, their large size and need for high computational power can make them challenging to use on devices with limited resources. For example, models like FLUX Vision Transformers have billions of parameters, which require significant storage and memory. This makes them impractical for many real-world applications. To overcome these issues, we need innovative solutions that lower computational demands without losing performance.

Introduction of the 1.58-bit FLUX Model

Researchers from ByteDance have launched the 1.58-bit FLUX model, a quantized version of the FLUX Vision Transformer. This model reduces its parameters by 99.5%, going from 11.9 billion to just 1.58 bits. This drastic reduction lowers both computational and storage needs. Unlike traditional methods, it uses a self-supervised approach and a custom kernel to optimize operations at 1.58 bits. This makes it much easier to deploy in environments with limited resources.

Key Technical Highlights

- The model’s quantization technique simplifies weights to three values: +1, -1, or 0.

- It compresses model parameters from 16-bit precision to just 1.58 bits.

- The quantization process does not require image data, relying instead on a calibration dataset of text prompts.

- A custom kernel was developed to enhance the efficiency of low-bit operations.

- Despite these reductions, the model can still generate high-resolution images (1024 × 1024 pixels).

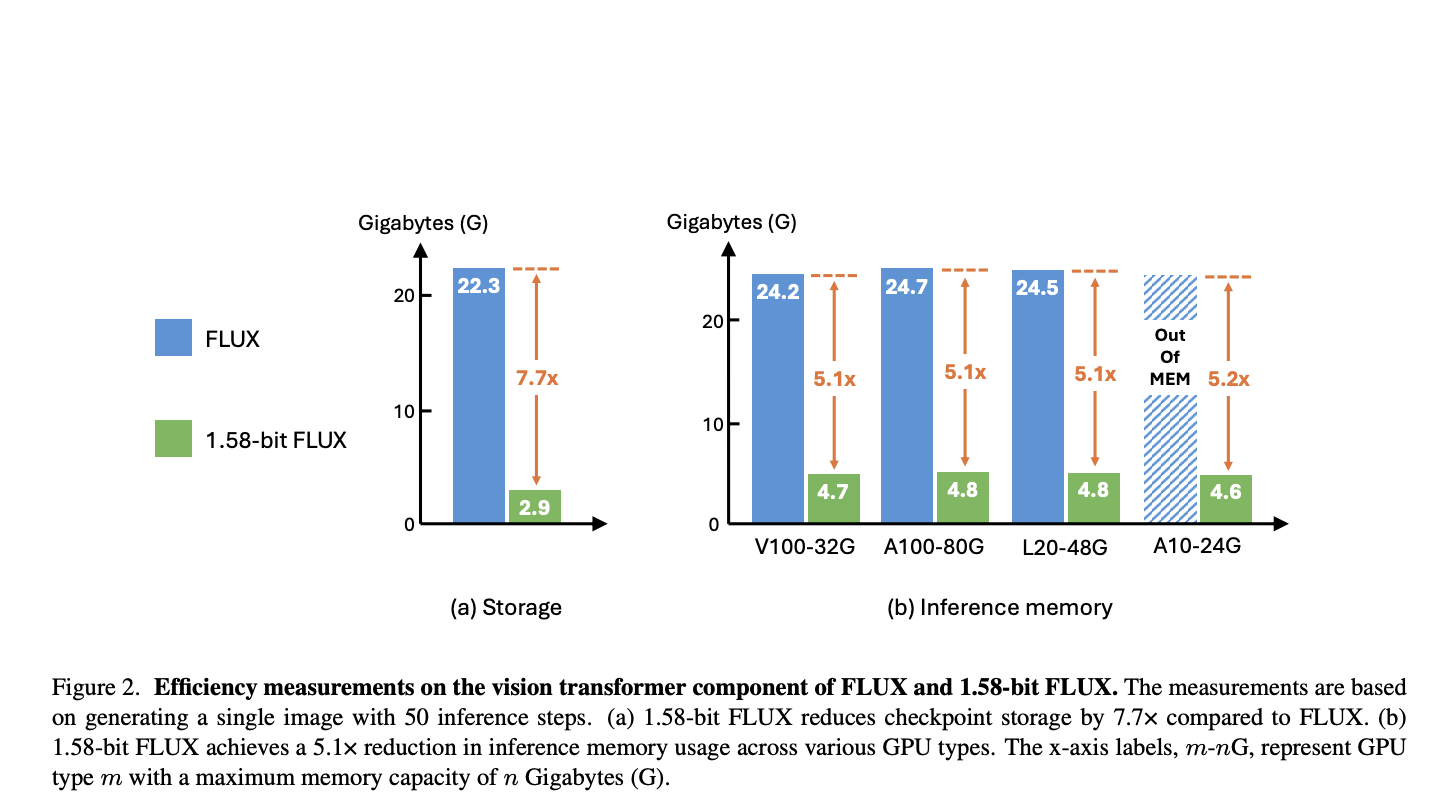

Performance and Efficiency Results

Tests on the 1.58-bit FLUX model showed it performs similarly to its full-precision version, with only minor differences in some tasks. The model provides:

- 7.7× reduction in storage needs

- 5.1× reduction in memory usage

It also showed impressive performance on GPUs like the L20 and A10, with noticeable improvements in latency, making it practical for various applications.

Conclusion and Future Prospects

The 1.58-bit FLUX model successfully tackles significant challenges in deploying large Vision Transformers. Its ability to drastically cut down storage and memory requirements while maintaining performance marks a significant advancement in efficient AI model design. Although there is still room for improvement, such as enhancing activation quantization, this development lays a strong groundwork for future innovations. As research progresses, the feasibility of using high-quality generative models on everyday devices is becoming increasingly attainable.

Stay Updated and Engage with Us

For more insights, check out the research paper and follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 60k+ ML SubReddit!

Transform Your Business with AI

If you want to enhance your company using AI, consider the following steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and offer customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram at t.me/itinainews or on Twitter at @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.