Advancements in Deep Neural Network Training

Deep Neural Network (DNN) training has rapidly evolved due to the emergence of large language models (LLMs) and generative AI. The effectiveness of these models improves with their size, supported by advancements in GPU technology and frameworks like PyTorch and TensorFlow. However, training models with billions of parameters poses significant challenges, requiring distribution across multiple GPUs and parallel processing of matrix operations.

Challenges and Solutions in Training Efficiency

Training efficiency is influenced by various factors, including sustained computational performance and effective communication among GPUs. Recent attempts to train LLMs have highlighted the need for improved GPU cluster utilization. For instance, Meta’s Llama 2 was trained using 2,000 NVIDIA A100 GPUs, while Megatron-LM achieved 52% peak performance with a 1000B parameter model across 3,072 GPUs.

AxoNN: A New Approach to Training

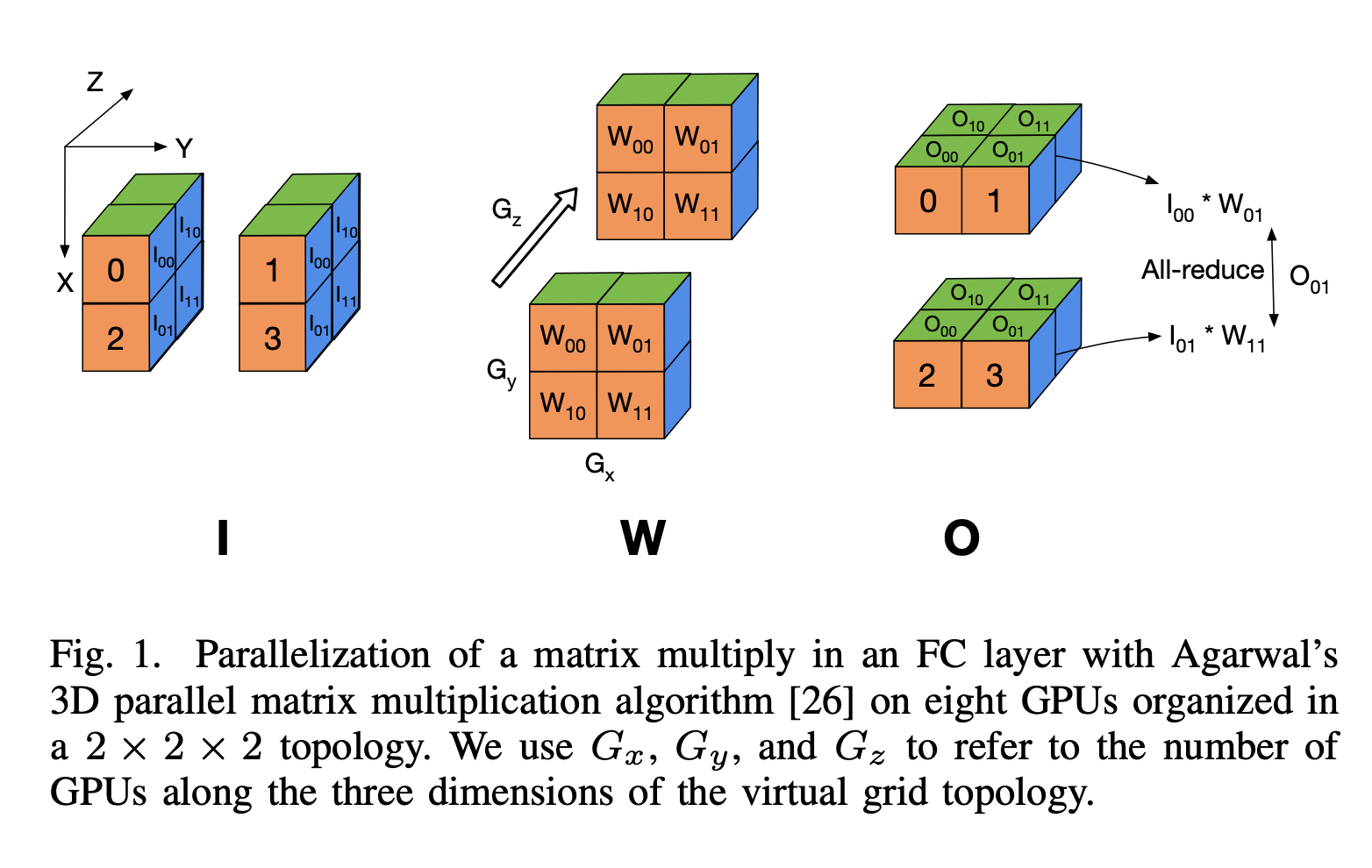

Researchers from the University of Maryland, Max Planck Institute, and UC Berkeley have introduced AxoNN, a novel hybrid parallel algorithm implemented in a scalable, open-source framework. AxoNN optimizes matrix multiplication performance, overlaps computation with communication, and employs modeling to find optimal configurations. Additionally, it addresses privacy concerns related to training data memorization.

Performance Evaluation of AxoNN

AxoNN has been tested on leading supercomputing platforms, including Perlmutter, Frontier, and Alps, showcasing exceptional scaling performance. It maintains near-ideal scaling up to 4,096 GPUs across all platforms, with efficiency rates reaching 88.3% on Frontier. The performance scaling demonstrates significant increases in sustained floating-point operations, confirming AxoNN’s effectiveness in training large models.

Conclusion and Implications for Business

AxoNN not only enhances performance metrics but also provides scalable access to model parallelism, enabling efficient training of larger models. This democratization allows practitioners across various fields to fine-tune large models on specific data. However, it is crucial to address potential memorization risks as more researchers engage with complex models that may unintentionally capture sensitive information.

Explore AI Solutions for Your Business

Consider how artificial intelligence can transform your operations:

- Identify processes suitable for automation.

- Pinpoint customer interactions where AI can add value.

- Establish key performance indicators (KPIs) to measure AI impact.

- Select customizable tools that align with your goals.

- Start small, evaluate effectiveness, and gradually expand AI usage.

If you need assistance in managing AI in your business, contact us at hello@itinai.ru. Connect with us on Telegram, Twitter, and LinkedIn.