Code Generation and Debugging with AI

Understanding the Challenge

Code generation using Large Language Models (LLMs) is a vital area of research. However, creating accurate code for complex problems in one attempt is tough. Even experienced developers often need multiple tries to debug difficult issues. While LLMs like GPT-3.5-Turbo show great potential, their ability to self-debug and correct errors is still limited.

Current Approaches

Various methods have been explored to enhance code generation and debugging in LLMs. These include:

– **Single-Round Generation**: Many existing models focus on generating code in one go rather than refining it.

– **Supervised Fine-Tuning**: Techniques like ILF, CYCLE, and Self-Edit have been used to improve performance.

– **Multi-Turn Interactions**: Solutions like OpenCodeInterpreter aim to create high-quality datasets for more effective training.

Introducing LEDEX

Researchers from Purdue University, AWS AI Labs, and the University of Virginia have developed **LEDEX** (Learning to Self-Debug and Explain Code). This innovative framework enhances LLMs’ self-debugging skills by:

– **Sequential Learning**: It emphasizes explaining incorrect code before refining it, which helps models analyze and improve their outputs.

– **Automated Data Collection**: LEDEX uses a pipeline to gather high-quality datasets for code explanation and refinement.

– **Combined Training Methods**: It integrates supervised fine-tuning and reinforcement learning to optimize code understanding and correction.

How LEDEX Works

LEDEX employs a structured approach:

1. **Data Collection**: It gathers datasets through queries to pre-trained models.

2. **Verification**: Responses are verified to ensure high-quality data.

3. **Training**: The verified data is used for supervised fine-tuning, significantly boosting the model’s debugging capabilities.

Performance Results

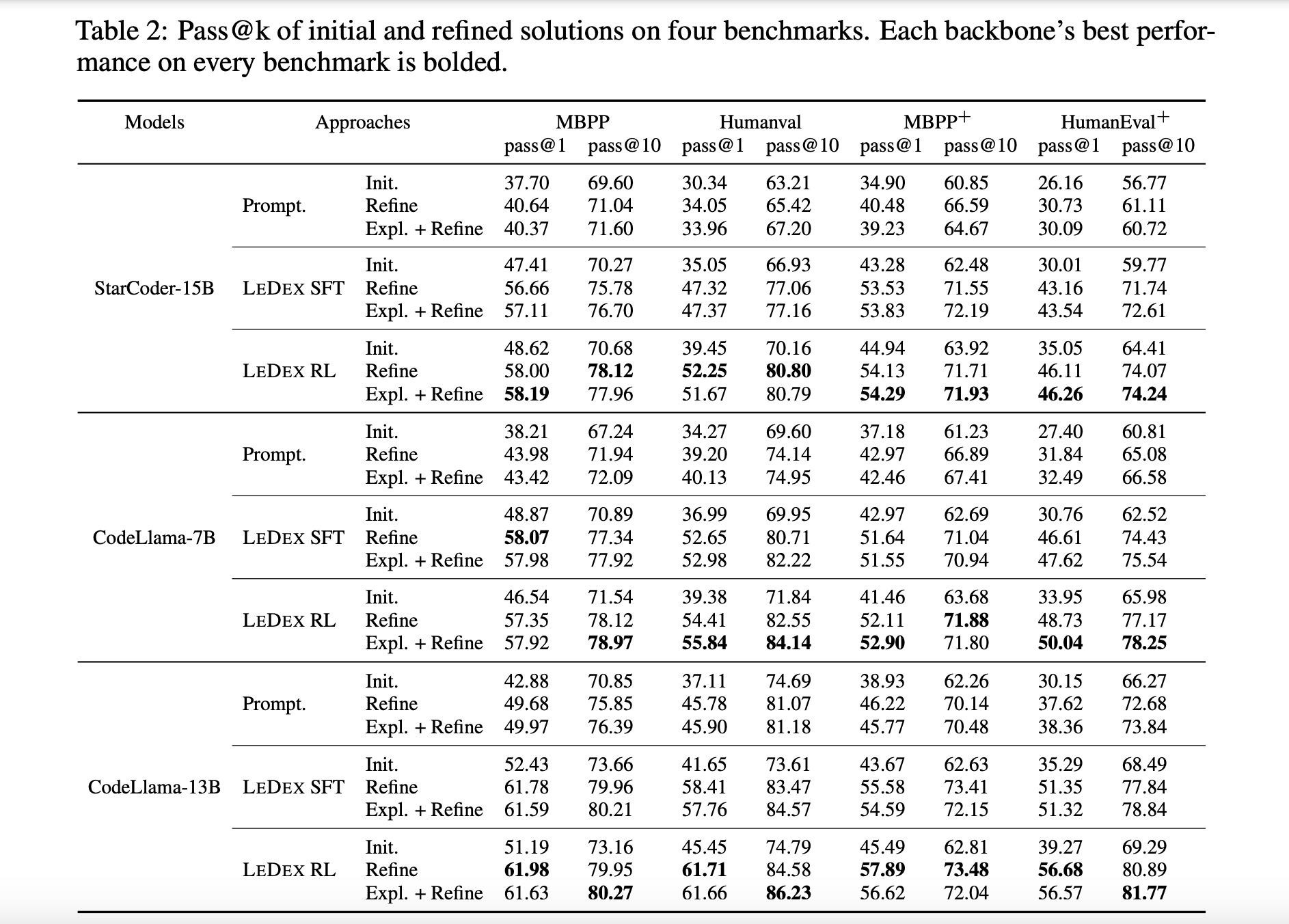

LEDEX has been tested with various model backbones, showing impressive results:

– **Pass Rates**: The supervised fine-tuning phase achieved up to a 15.92% increase in pass rates across benchmark datasets.

– **Reinforcement Learning Improvements**: Further enhancements of up to 3.54% in pass rates were noted after the RL phase.

– **Model-Agnostic Success**: LEDEX proved effective with different models, achieving significant improvements regardless of the base model used.

Conclusion

LEDEX is a powerful framework that combines automated data processes and innovative training methods to enhance LLMs’ ability to identify and fix code errors. Its robust verification process ensures high-quality outputs, making it a valuable tool for developers. Human evaluations confirm that models trained with LEDEX provide superior explanations, aiding developers in resolving coding issues effectively.

Get Involved

Check out the research paper for more details. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. Don’t miss out on our growing ML community on Reddit!

Explore AI Solutions for Your Business

To stay competitive and leverage AI effectively, consider the following steps:

– **Identify Automation Opportunities**: Find areas in customer interactions that can benefit from AI.

– **Define KPIs**: Ensure your AI initiatives have measurable impacts.

– **Select an AI Solution**: Choose tools that fit your needs and allow for customization.

– **Implement Gradually**: Start small, gather data, and expand usage wisely.

For AI KPI management advice, contact us at hello@itinai.com, and for ongoing insights, follow us on Telegram or Twitter. Discover how AI can transform your sales processes and customer engagement at itinai.com.