Automating Reinforcement Learning Workflows with Vision-Language Models: Towards Autonomous Mastery of Robotic Tasks

Practical Solutions and Value

Recent advancements in utilizing large vision language models (VLMs) and language models (LLMs) have significantly impacted reinforcement learning (RL) and robotics. These models have demonstrated their utility in learning robot policies, high-level reasoning, and automating the generation of reward functions for policy learning. This progress has notably reduced the need for domain-specific knowledge typically required from RL researchers.

In the realm of science and engineering automation, LLM-empowered agents are being developed to assist in software engineering tasks, from interactive pair-programming to end-to-end software development. Similarly, in scientific research, LLM-based agents are being employed to generate research directions, analyze literature, automate scientific discovery, and conduct machine learning experiments. For embodied agents, particularly in robotics, LLMs are being utilized to write policy code, decompose high-level tasks into subtasks, and even propose tasks for open-ended exploration.

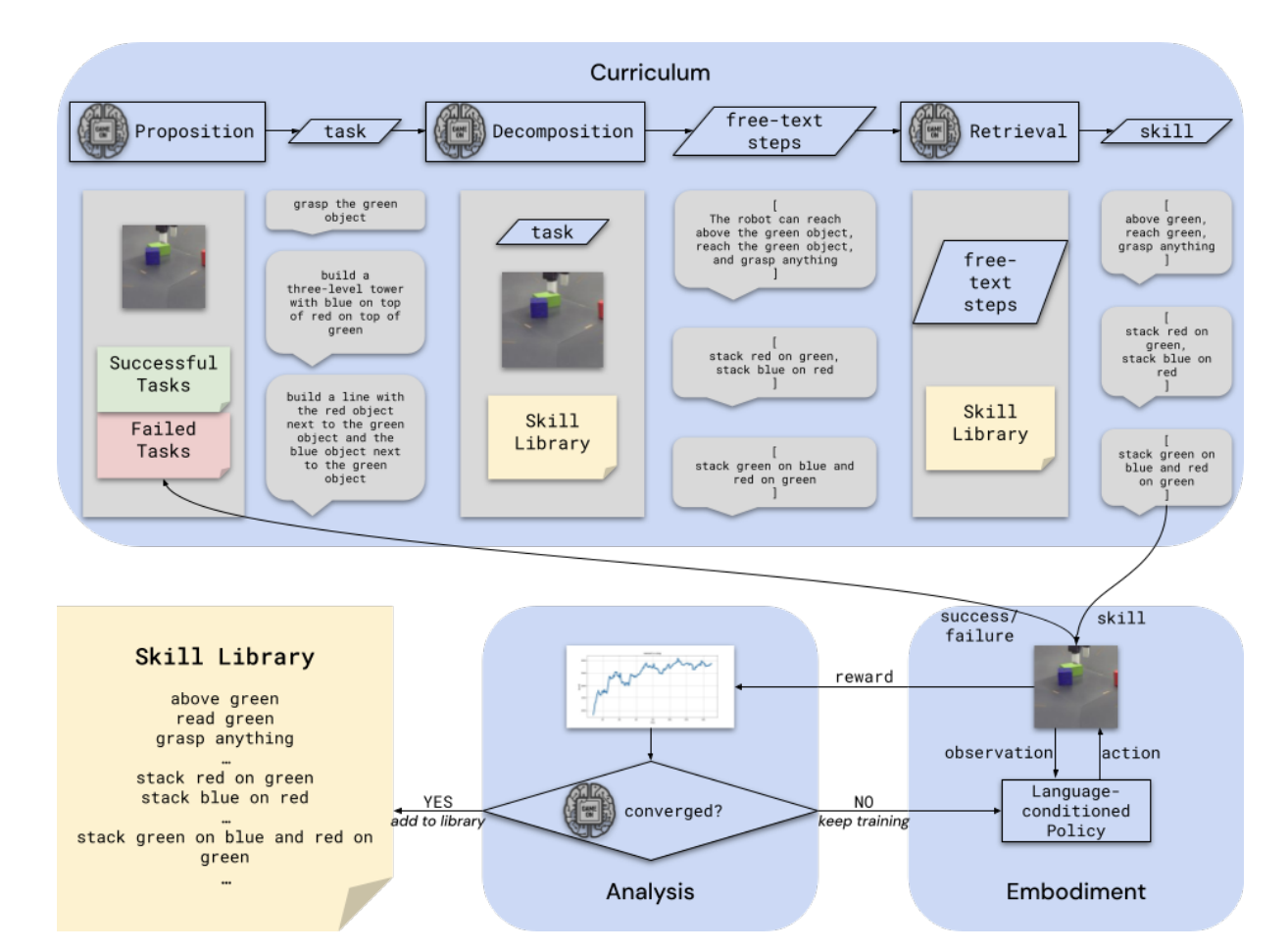

DeepMind Researchers propose an innovative agent architecture that automates key aspects of the RL experiment workflow, aiming to enable automated mastery of control domains for embodied agents. This system utilizes a VLM to perform tasks typically handled by human experimenters, including monitoring and analyzing experiment progress, proposing new tasks based on the agent’s past successes and failures, decomposing tasks into sequences of subtasks, and retrieving appropriate skills for execution. This approach enables the system to build automated curricula for learning, representing one of the first proposals for a system that utilizes a VLM throughout the entire RL experiment cycle.

The researchers have developed a prototype of this system, using a standard Gemini model without additional fine-tuning. This model provides a curriculum of skills to a language-conditioned Actor-Critic algorithm, guiding data collection to aid in learning new skills. The data collected through this method is effective for learning and iteratively improving control policies in a robotics domain. Further examination of the system’s ability to build a growing library of skills and assess the progress of skill training has yielded promising results.

To explore the feasibility of their proposed system, the researchers implemented its components and applied them to a simulated robotic manipulation task. The system architecture consists of several interacting modules: Curriculum Module, Embodiment Module, and Analysis Module. The modules interact through a chat-based interface in a Google Meet session, allowing for easy connection and human introspection.

For policy training, the system employs a Perceiver-Actor-Critic (PAC) model, which can be trained via offline reinforcement learning and is text-conditioned. This allows for the use of non-expert exploration data and relabeling of data with multiple reward functions. The high-level system utilizes a standard Gemini 1.5 Pro model, with prompts designed using the OneTwo Python library. This implementation demonstrates a practical approach to integrating VLMs into the RL workflow, enabling automated task proposal, decomposition, and execution in a simulated robotic environment.

The researchers evaluated their approach using a robotic block stacking task involving a 7-DoF Franka Panda robot in a MuJoCo simulator. The prototype implementation demonstrated several key capabilities: proposing new tasks for exploration, decomposing tasks into skill sequences, and analyzing learning progress. Despite some simplifications in the prototype, the system successfully collected diverse data for self-improvement of the control policy and learned new skills beyond its initial set. The curriculum showed adaptability in proposing tasks based on available skill complexity.

If you want to evolve your company with AI, stay competitive, use for your advantage Automating Reinforcement Learning Workflows with Vision-Language Models: Towards Autonomous Mastery of Robotic Tasks.

Discover how AI can redefine your way of work. Identify Automation Opportunities: Locate key customer interaction points that can benefit from AI. Define KPIs: Ensure your AI endeavors have measurable impacts on business outcomes. Select an AI Solution: Choose tools that align with your needs and provide customization. Implement Gradually: Start with a pilot, gather data, and expand AI usage judiciously.

For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.