Understanding the Challenges of Artificial Neural Networks

Artificial Neural Networks (ANNs) have significantly advanced computer vision, but their lack of transparency poses challenges in areas that require accountability and regulatory compliance. This opacity limits their use in critical applications where understanding decision-making is crucial.

The Need for Explainable AI

Researchers are keen to comprehend the internal workings of these models to improve debugging, enhance model performance, and draw connections with neuroscience. This has led to the emergence of Explainable Artificial Intelligence (XAI), which aims to make ANNs more interpretable and bridge the gap between machine intelligence and human understanding.

Concept-Based Methods in XAI

Among the various XAI approaches, concept-based methods are effective frameworks for uncovering understandable visual concepts within the complex activation patterns of ANNs. Recent studies frame concept extraction as a dictionary learning problem, where activations correspond to a higher-dimensional, sparse “concept space” that is easier to interpret.

Innovations in Sparse Autoencoders

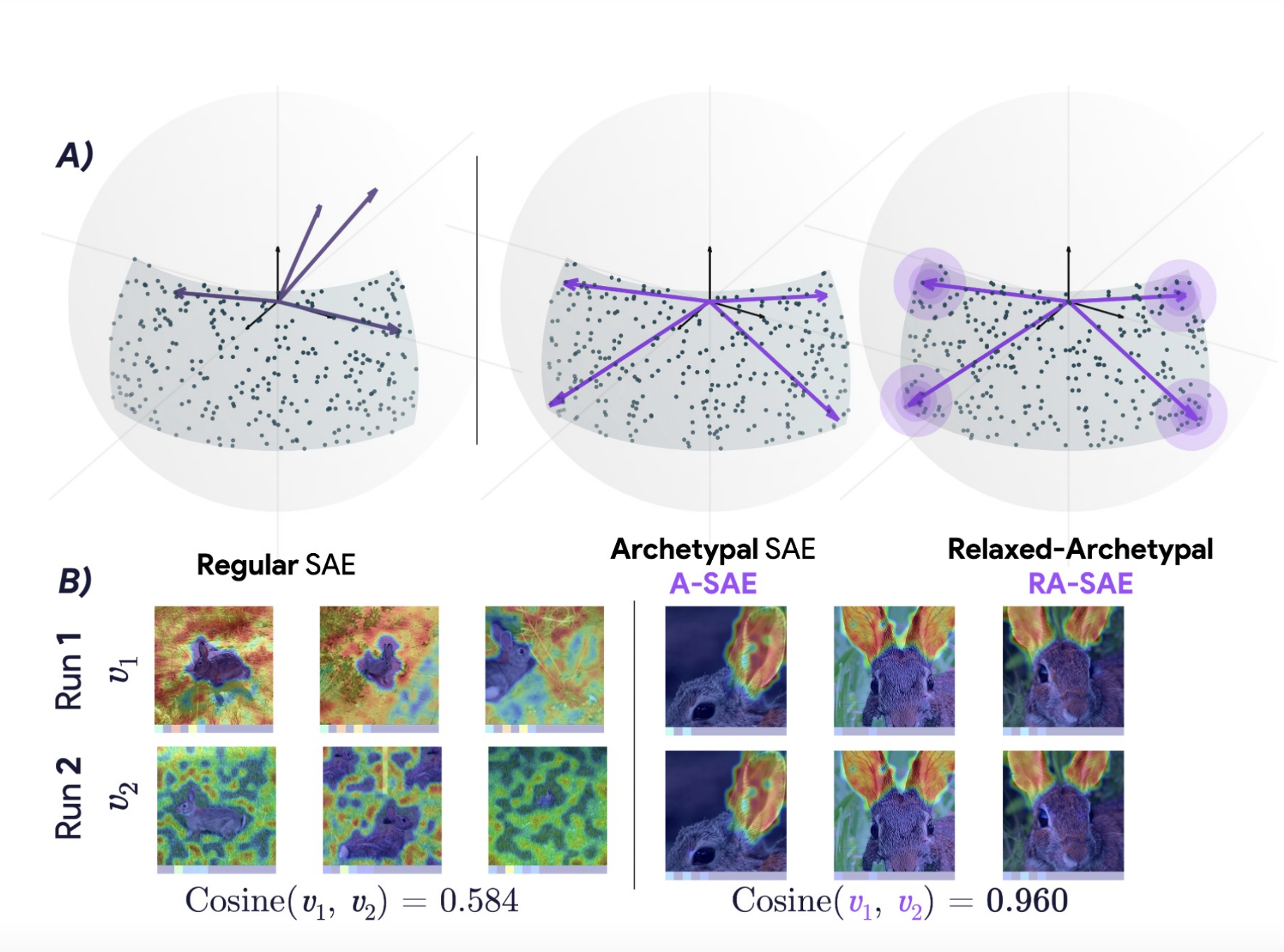

Techniques such as Non-negative Matrix Factorization (NMF) and K-Means are utilized to reconstruct original activations accurately. Sparse Autoencoders (SAEs) have emerged as powerful alternatives, balancing sparsity with reconstruction quality. However, they face challenges with stability, as training identical SAEs can yield different concept dictionaries.

New Approaches: A-SAE and RA-SAE

Researchers from Harvard University, York University, CNRS, and Google DeepMind have introduced two innovative variants of Sparse Autoencoders: Archetypal-SAE (A-SAE) and Relaxed Archetypal-SAE (RA-SAE). These models enhance stability and consistency in concept extraction by imposing geometric constraints on the dictionary atoms.

Evaluation of the New Models

The researchers tested their methods on five vision models, training them on the ImageNet dataset. The results showed that RA-SAE outperformed traditional approaches in accurately recovering underlying object classes and uncovering meaningful concepts.

Conclusions and Future Directions

A-SAE and RA-SAE provide a robust framework for dictionary learning and concept extraction in large-scale vision models. Their development opens avenues for reliable concept discovery across various data modalities, including large language models (LLMs).

Practical Business Solutions

Explore how AI can transform your business operations:

- Identify processes that can be automated to enhance efficiency.

- Pinpoint customer interactions where AI can add significant value.

- Establish key performance indicators (KPIs) to measure the impact of your AI investments.

- Select customizable tools that align with your business objectives.

- Start with a pilot project, assess its effectiveness, and gradually expand your AI initiatives.

Get in Touch

If you need assistance with AI in your business, contact us at hello@itinai.ru or connect with us on Telegram, X, and LinkedIn.