Introduction to DistillKit

DistillKit, an open-source tool by Arcee AI, revolutionizes the creation and distribution of Small Language Models (SLMs), making advanced AI capabilities more accessible and efficient.

Distillation Methods in DistillKit

DistillKit employs logit-based and hidden states-based distillation methods to transfer knowledge from large models to smaller, more efficient ones, democratizing access to advanced AI and promoting energy efficiency.

Key Takeaways of DistillKit

DistillKit demonstrates performance gains across various datasets and training conditions, provides domain-specific improvements, offers flexibility in model architecture choices, and optimizes computational resources for AI deployment.

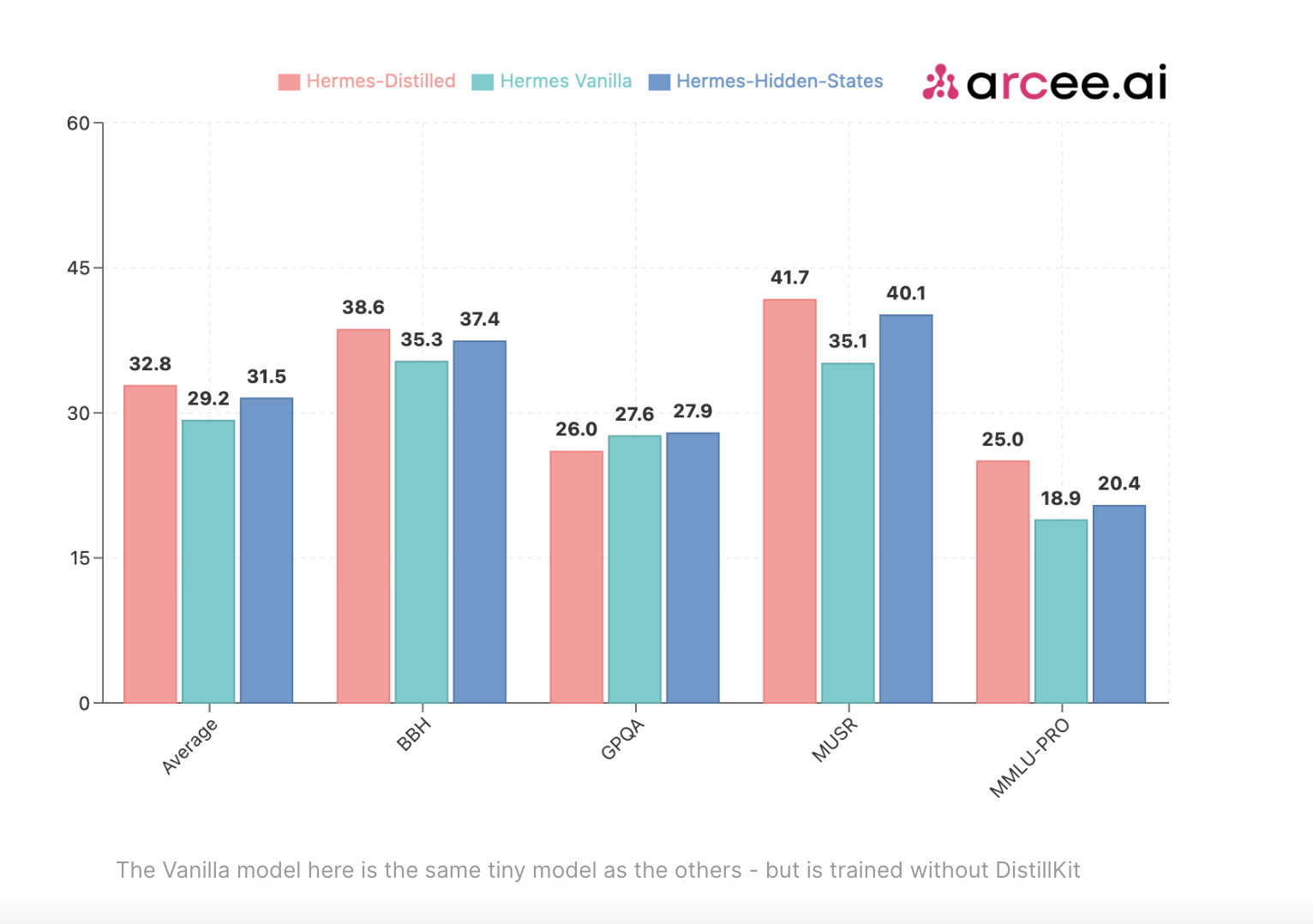

Performance Results

Experiments show significant performance improvements for distilled models over standard supervised fine-tuning, highlighting the effectiveness of distillation methods in enhancing efficiency and accuracy of smaller models.

Impact and Future Directions

The release of DistillKit enables the creation of efficient models, reducing energy consumption and operational costs, with plans for future updates to incorporate advanced distillation techniques and optimizations.

Conclusion

Arcee AI’s DistillKit marks a significant milestone in model distillation, offering a robust, flexible, and efficient tool for creating SLMs, revolutionizing AI deployment and inviting community collaboration for continuous evolution.