Revolutionizing Language Models with Cut Cross-Entropy (CCE)

Overview of Large Language Models (LLMs)

Advancements in large language models (LLMs) have transformed natural language processing. These models are used for tasks like text generation, translation, and summarization. However, they require substantial data and memory, creating challenges in training.

Memory Challenges in Training

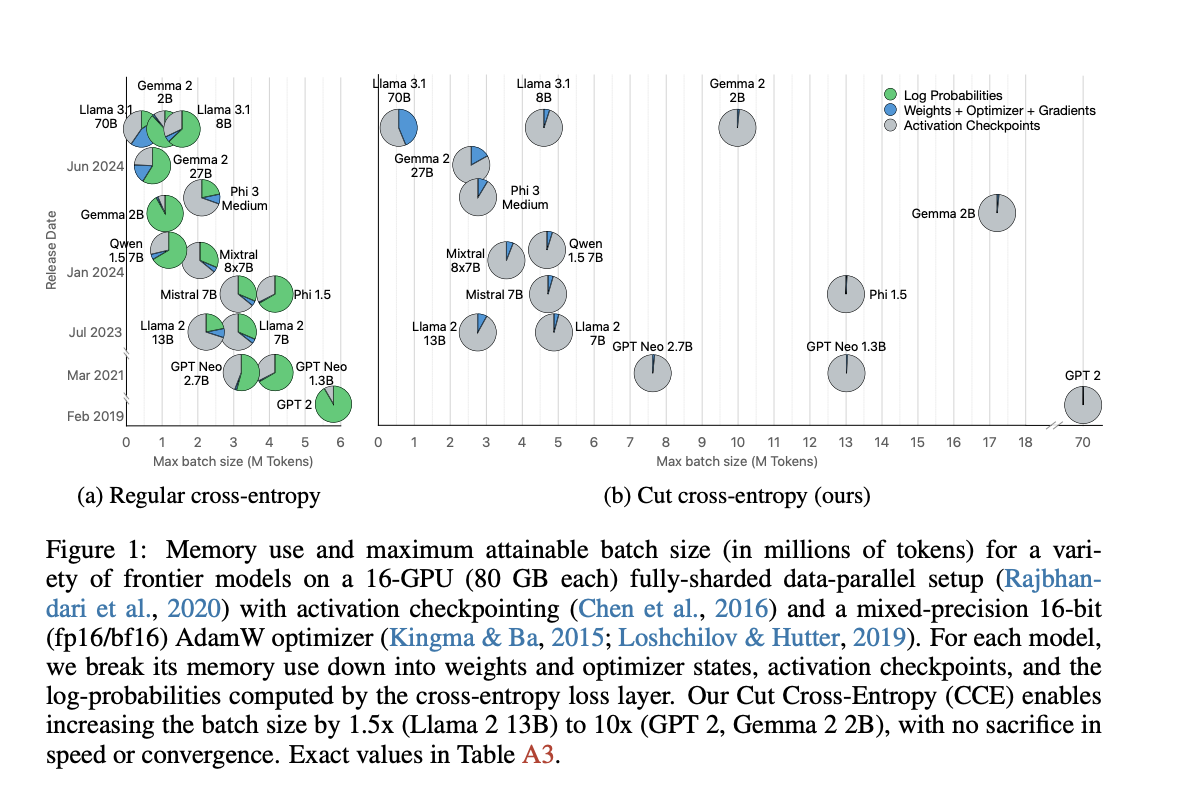

A major issue in training LLMs is the memory needed for cross-entropy loss computation. As vocabulary sizes increase, models like Gemma 2 (2B) face memory consumption of up to 24 GB during training, limiting performance and scalability.

Limitations of Previous Solutions

Past attempts to reduce memory usage, such as FlashAttention, have focused on specific areas but have not effectively addressed the cross-entropy layer’s demands. Other methods like chunking reduce memory but can slow down processing.

Introducing Cut Cross-Entropy (CCE)

Researchers at Apple have developed the Cut Cross-Entropy (CCE) method. This innovative approach calculates only the necessary logits dynamically, significantly lowering memory usage. For example, in Gemma 2, memory use for loss computation dropped from 24 GB to just 1 MB.

How CCE Works

CCE employs custom CUDA kernels for efficient processing. It calculates logits on-the-fly, avoiding large memory storage. This method also uses gradient filtering to skip insignificant computations, optimizing performance.

Benefits of CCE

– **Significant Memory Reduction**: Memory use for cross-entropy loss can be as low as 1 MB for large models.

– **Improved Scalability**: Larger batch sizes enhance resource utilization for extensive models.

– **Efficiency Gains**: The method maintains training speed and model convergence despite reduced memory.

– **Practical Applicability**: CCE can be adapted for various scenarios, including image classification.

– **Future Potential**: It supports training larger models with better resource balancing.

Conclusion

The CCE method is a breakthrough in training large language models by minimizing memory usage without sacrificing speed or accuracy. This advancement enhances the efficiency of current models and opens doors for future scalable architectures.

Stay Connected

Check out the research paper and GitHub page for more details. Follow us on Twitter, join our Telegram Channel, and LinkedIn Group. If you enjoy our insights, subscribe to our newsletter and join our 55k+ ML SubReddit.

Discover AI Solutions for Your Business

– **Identify Automation Opportunities**: Find customer interaction points that can benefit from AI.

– **Define KPIs**: Ensure measurable impacts from your AI initiatives.

– **Select an AI Solution**: Choose tools that fit your needs and offer customization.

– **Implement Gradually**: Start with a pilot program, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights via our Telegram channel or Twitter. Explore how AI can transform your sales processes and customer engagement at itinai.com.