Recent Developments in AI and Mathematical Reasoning

Understanding LLMs and Their Reasoning Skills

Recent advancements in Large Language Models (LLMs) have sparked interest in their ability to reason mathematically, particularly through the GSM8K benchmark, which tests basic math skills. Despite improvements shown by LLMs, questions still linger about their true reasoning capabilities. Current evaluation methods may not fully reflect their potential. Research indicates that LLMs often rely on pattern matching instead of real logical reasoning, making them sensitive to minor changes in input data.

The Need for Better Evaluation Methods

Logical reasoning is crucial for intelligent systems, but the consistency of LLMs in this area is still uncertain. While some studies show LLMs can perform tasks using pattern matching, they often struggle with formal reasoning. This is evident when small changes in input can lead to vastly different outcomes. More complex tasks require a higher level of expressiveness, which could be enhanced by using external memory tools.

Introducing GSM-Symbolic

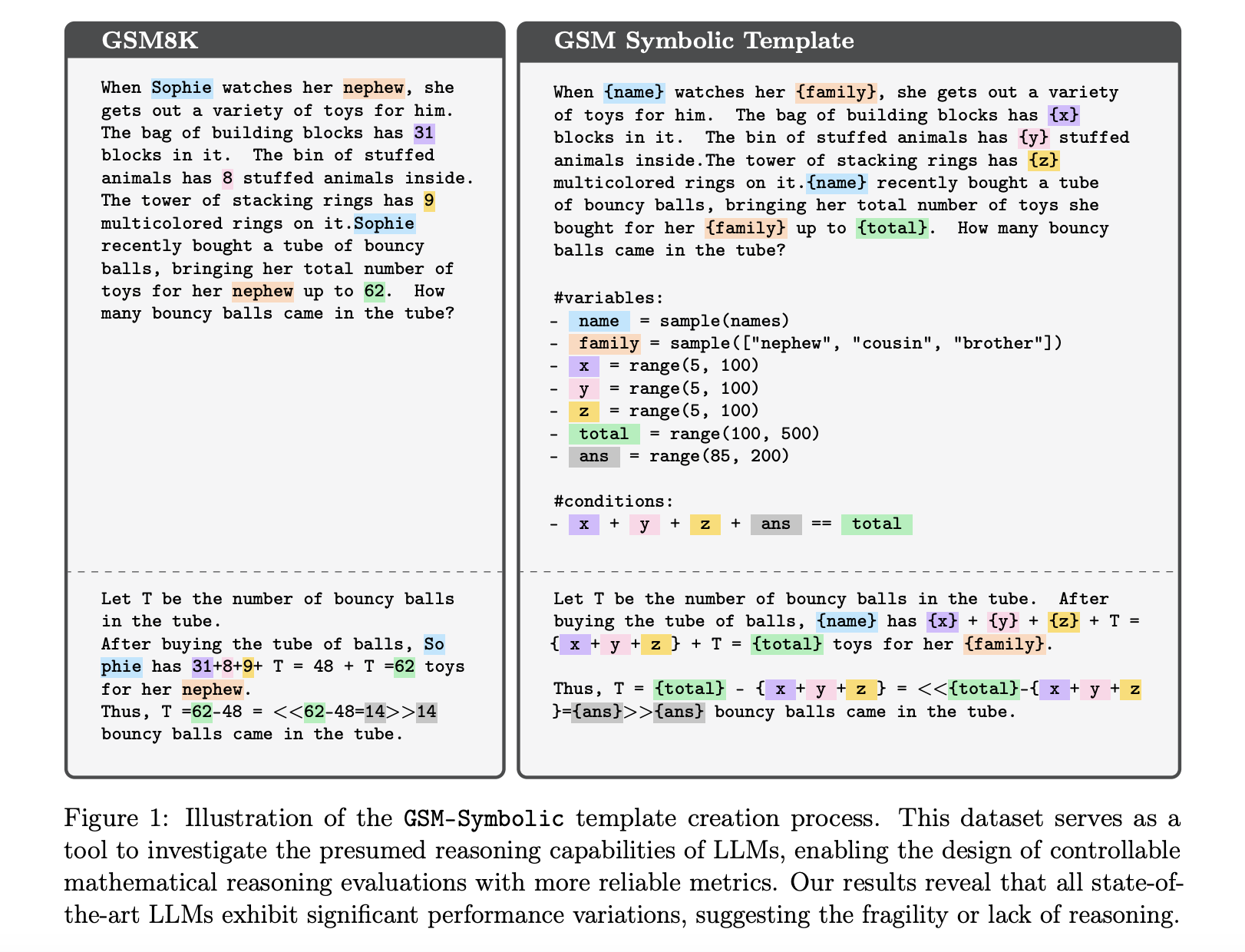

Researchers at Apple have conducted a comprehensive study to assess LLM reasoning with a new benchmark called GSM-Symbolic. This benchmark creates a variety of mathematical problems using symbolic templates, offering more reliable evaluations. The findings indicate that LLM performance decreases significantly when questions become more complex or when irrelevant information is included.

Improving Evaluation with GSM-Symbolic

The GSM8K dataset contains over 8,000 grade-school math questions, but it has limitations, including data contamination and performance inconsistencies. GSM-Symbolic addresses these challenges by generating diverse questions, allowing for a more thorough assessment of LLMs. This benchmark evaluates over 20 models using 5,000 samples, offering valuable insights into the strengths and weaknesses of LLMs in mathematical reasoning.

Key Findings from the Research

Initial tests show significant variability in model performance on GSM-Symbolic, with lower accuracy compared to GSM8K. The study reveals that changing numerical values greatly impacts LLM performance, and as question difficulty increases, accuracy declines. This suggests that LLMs depend more on pattern matching than on true reasoning abilities.

Implications of the Research

The research underscores the limitations of current evaluation techniques for LLMs. The introduction of GSM-Symbolic aims to improve the assessment of mathematical reasoning by providing multiple variations of questions. The results indicate that LLMs struggle with irrelevant information and complex questions, highlighting the need for further advancements to enhance their logical reasoning capabilities.

Take Action with AI Solutions

Transform Your Business with AI

Stay competitive by leveraging AI in your organization. Here’s how:

- Identify Automation Opportunities: Pinpoint areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select the Right AI Solution: Choose tools that fit your needs and offer customization.

- Implement Gradually: Start with a pilot program, gather data, and expand AI usage wisely.

Stay Connected for More Insights

For expert advice on AI KPI management, reach out to us at hello@itinai.com. For ongoing insights on leveraging AI, connect with us on Telegram or follow us on @itinaicom.

Upcoming Event

RetrieveX – The GenAI Data Retrieval Conference

Join us on Oct 17, 2023, for an exciting exploration of AI-driven data retrieval solutions.

Follow the Research

Check out the full research paper for in-depth insights. All credit goes to the dedicated researchers behind this project. Don’t forget to follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, subscribe to our newsletter and join our community of over 50,000 on our ML SubReddit.