Practical Solutions and Value of ANOLE: An Open, Autoregressive, Native Large Multimodal Model for Interleaved Image-Text Generation

Challenges Addressed

Existing open-source large multimodal models (LMMs) often lack native integration and require adapters, introducing complexity and inefficiency in both training and inference time.

Proposed Solution

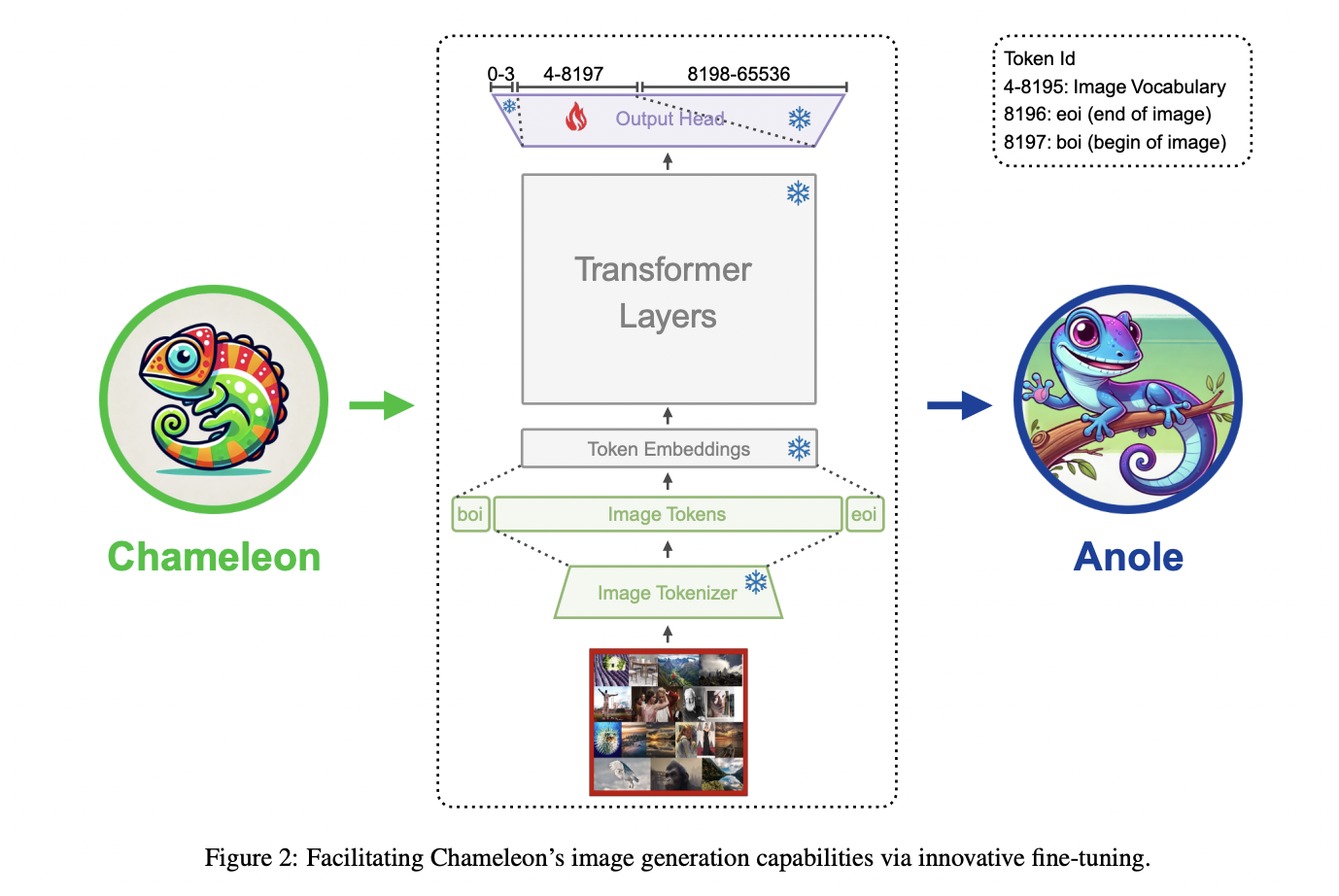

ANOLE is an open, autoregressive, native LMM for interleaved image-text generation, addressing the limitations of previous open-source LMMs. It offers a data and parameter-efficient solution for high-quality multimodal generation capabilities.

Key Features

ANOLE adopts an early-fusion, token-based autoregressive approach to model multimodal sequences without using diffusion models, relying solely on transformers. It demonstrates impressive image and multimodal generation capabilities with limited data and parameters.

Practical Applications

ANOLE can generate diverse and accurate visual outputs from textual descriptions and seamlessly integrate text and images in interleaved sequences. It can be used for generating detailed recipes with corresponding images, producing informative interleaved image-text sequences, and more.

Advantages

ANOLE democratizes access to advanced multimodal AI technologies and paves the way for more inclusive and collaborative research in this field.

AI Implementation Recommendations

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to leverage AI for business outcomes.

Contact Information

For AI KPI management advice, connect with us at hello@itinai.com. Stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom for continuous insights into leveraging AI.