The Impact of Flash Attention on Training Stability in Large-Scale Machine Learning Models

Addressing Training Challenges

The challenge of training large and sophisticated models is significant, requiring extensive computational resources and time. Instabilities during training sessions can lead to costly interruptions, affecting models like LLaMA2’s 70-billion parameter model.

Optimizing Attention Mechanisms

Flash Attention is a technique that targets the efficiency of the attention mechanism in transformer models, aiming to reduce computational overhead and memory usage. It has shown a 14% increase in speed for text-to-image models, enhancing training efficiency.

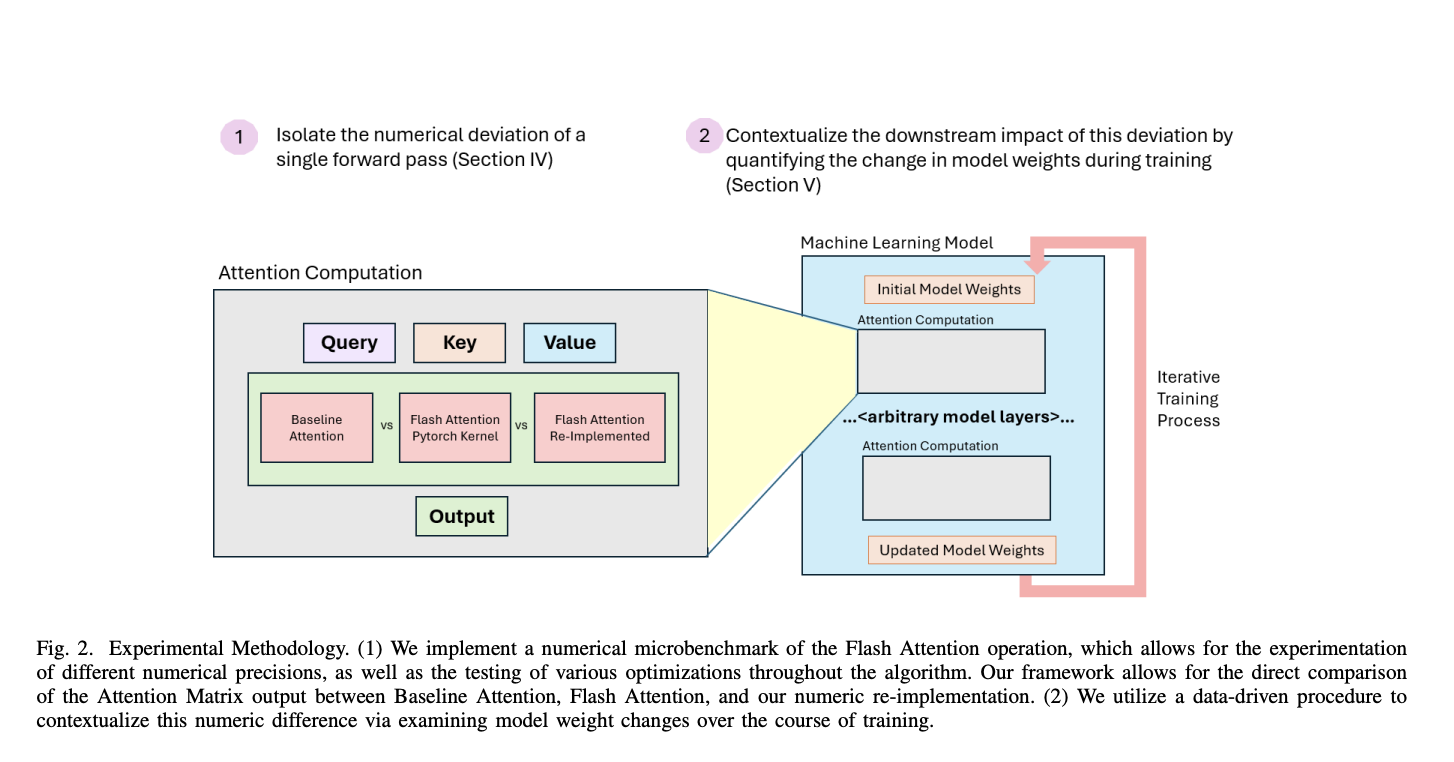

Numeric Deviations and Training Stability

While Flash Attention introduces computational nuances and numeric deviations, it still offers improvements in computational efficiency and memory usage. However, the implications of these deviations on training stability require careful evaluation.

Practical AI Solutions

Discover how AI can redefine your work processes and customer engagement. Identify automation opportunities, define KPIs, select suitable AI solutions, and implement gradually to leverage AI effectively.

AI Sales Bot

Consider the AI Sales Bot from itinai.com/aisalesbot, designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.