The Efficient Deployment of Large Language Models (LLMs)

Practical Solutions and Value

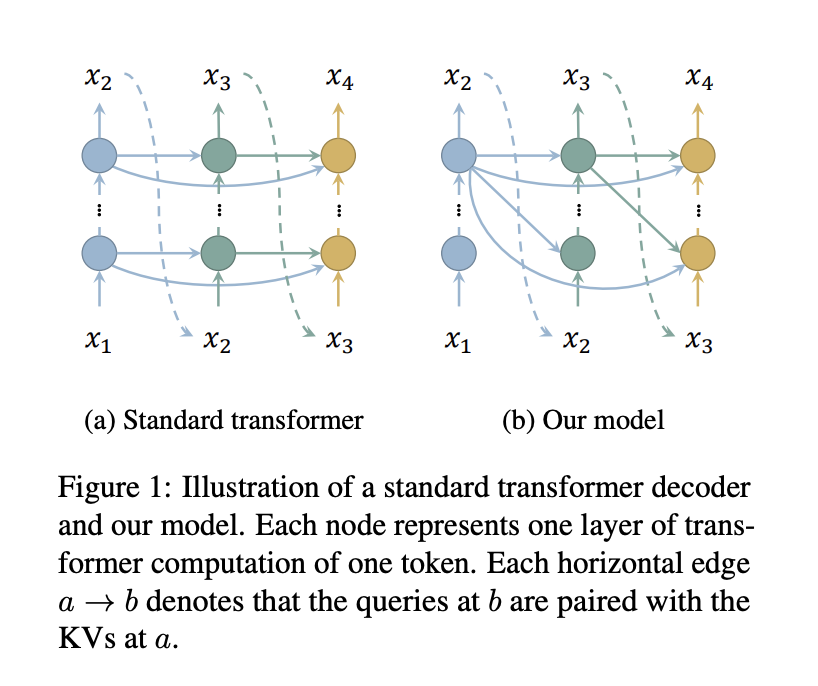

The efficient deployment of large language models (LLMs) requires high throughput and low latency. However, the substantial memory consumption of the key-value (KV) cache hinders achieving large batch sizes and high throughput. Various approaches such as compressing KV sequences and dynamic cache eviction policies aim to alleviate this memory burden in LLMs.

Researchers from the School of Information Science and Technology, ShanghaiTech University, and Shanghai Engineering Research Center of Intelligent Vision and Imaging present an efficient approach to reduce memory consumption in the KV cache of transformer decoders by decreasing the number of cached layers. This method significantly saves memory without additional computation overhead, while maintaining competitive performance with standard models.

Empirical Results and Integration

Empirical results demonstrate substantial memory reduction and throughput improvement with minimal performance loss. The method seamlessly integrates with other memory-saving techniques like StreamingLLM. Integration with StreamingLLM demonstrates lower latency and memory consumption, with the ability to process infinite-length tokens effectively.

Practical Implementation and Evaluation

Researchers evaluated their method using models with 1.1B, 7B, and 30B parameters on different GPUs, including NVIDIA GeForce RTX 3090 and A100. Evaluation measures include latency and throughput, with results indicating significantly larger batch sizes and higher throughput than standard Llama models across various settings.

AI Solutions for Your Business

If you want to evolve your company with AI, stay competitive, and use An Efficient AI Approach to Memory Reduction and Throughput Enhancement in LLMs, consider the following practical steps:

- Identify Automation Opportunities: Locate key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI endeavors have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that align with your needs and provide customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage judiciously.

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.